An Overview of Apache Spark Training

The Apache Spark Course in Coimbatore is designed to provide learners with complete knowledge of distributed data processing and real-time analytics. Through Apache Spark training in Coimbatore, participants gain in-depth expertise in working with RDDs, DataFrames and Spark SQL while exploring structured and unstructured data. The Apache Spark Certification Course in Coimbatore combines theoretical learning with practical exposure, ensuring learners understand core concepts through projects and hands-on sessions guided by industry professionals. This Apache Spark course offers flexible learning options, including self-paced study and instructor-led classes, allowing participants to learn as per their convenience. By enrolling in Apache Spark training, learners not only boost their technical proficiency but also enhance their job readiness, making them highly competitive in the job market. Strong abilities in data streaming, machine learning integration and dashboard development are developed through the Apache Spark training course, preparing people for extremely fulfilling positions in a variety of sectors that depend on data-driven solutions.

Additional Info

Future Trends for Apache Spark Course

- Real-Time Data Processing:

Real-time analytics is closely related to Apache Spark's future. In order to respond swiftly in highly competitive settings, businesses nowadays require immediate information. Spark is perfect for processing real-time data from financial systems, social media and the Internet of Things because of its streaming capabilities. Real-time workflows will be emphasized more and more in training. This guarantees that experts can process continuous data accurately and quickly.Learners who possess real-time abilities would be better equipped to handle pressing business problems. Compared to traditional batch analysis, more businesses are investing in fast insights. Professionals will be ready for these new demands due to Apache Spark's streaming training.

- Integration with Cloud Platforms:

Apache Spark is becoming a mainstream tool in the ecosystems of AWS, Azure and Google Cloud as cloud use increases. Training courses will demonstrate Spark's smooth integration with cloud-native compute and storage providers. Students will get hands-on experience setting up scalable cloud clusters. This enables flexibility in handling dynamic workloads. Cloud-powered Spark skills are in high demand.Cloud integration also reduces infrastructure costs for businesses. Professionals trained in cloud-Spark workflows are more versatile and employable. The future of Spark training will always align with cloud innovation.

- Machine Learning Expansion:

Machine learning makes extensive use of Apache Spark applications. Training will concentrate on AI-driven solutions and predictive modeling with MLlib and integration with libraries such as TensorFlow. The preparation and analysis of large data for model training will be taught to professionals. The development of AI-driven decision-making is reflected in this trend. Spark’s role in automating insights will only grow stronger. Machine learning will make Spark professionals stand out in analytics-driven industries. Training will emphasize both algorithm building and deployment. The synergy of Spark and AI creates endless career possibilities.

- Graph Analytics Advancements:

Graph analytics is becoming more and more important for network analysis, recommendation engines and fraud detection. In order to handle extremely complicated interactions between data points, Spark GraphX is developing. Through training, students will investigate real-world situations where business intelligence is enhanced by graph models. This field is essential as networks get more complex. One of the main trends in upcoming Spark use cases will be graph analytics.Experts in graph analysis can provide industry with fresh perspectives. Businesses seek professionals who can uncover hidden connections in vast amounts of data. Spark’s graph analytics training makes this skill set future-proof.

- Enhanced Data Security:

With rising concerns about data breaches, Spark training will emphasize security measures in data pipelines. Encryption, authentication and access control are becoming non-negotiable. Learners will gain knowledge of protecting sensitive data in distributed environments. Companies demand professionals who can implement secure big data solutions. Future Spark skills will always involve a strong security focus. Security-focused Spark professionals will see demand in finance and healthcare. Training in compliance-ready Spark solutions will grow in importance. Cybersecurity and data analytics will continue to converge in Spark careers.

- IoT and Edge Computing Integration:

The Internet of Things generates huge amounts of sensor data. Apache Spark is playing a central role in processing this at scale. Spark's connection with edge devices and IoT ecosystems will be covered in training more and more. This guarantees that students comprehend how to handle quick, device-driven data. Spark professionals will have a lot of chances in this field as IoT adoption increases.Real-time decision-making and IoT data will be combined in future projects. Smart device streaming pipelines will be highlighted throughout the training. Strong career trajectories in automation-driven businesses are made possible by Spark's IoT focus. Training will highlight streaming pipelines for smart devices. Spark’s IoT focus opens strong career paths in automation-driven industries.

- Data Visualization Enhancements:

The cycle of insights is completed by visualization, even though Spark is great for processing. Spark interaction with Tableau, Power BI and open-source tools will be covered in future courses. This aids students in creating insightful dashboards from raw data. Professionals with strong data analysis and presentation skills are preferred by employers. Spark analytics and visualization abilities are increasingly essential. Business decision-making is enhanced by clear visual storytelling. In analytics teams, Spark experts that are adept at visualization stand out. The pattern guarantees that data processing and display are always combined in Spark training in the future.

- Low-Code and Automation Features:

The utilization of Spark is changing as a result of automation in data processing. The training's primary emphasis will be on using Spark with automated pipelines and low-code platforms. Big data solutions are now accessible to non-technical people because to this trend. Experts will discover how to use less coding to create quicker workflows. This preserves analytical flexibility while guaranteeing productivity. Low-code integration will increase Spark's industry-wide adoption. Automation-first design and streamlined workflows will be the focus of the training. Automation-savvy Spark specialists will be essential to the digital transition.

- Scalability and Performance Tuning:

Spark's performance improvement becomes essential as businesses grow. To increase speed, training will concentrate on memory tuning, caching and partitioning. Students will comprehend how to scale apps for millions of records of data. Future projects will require high-performance Spark specialists. Scalability is therefore a key trend in Spark skill development. Professionals that can blend efficiency and speed are in high demand by employers. Real-world readiness is ensured by refining technique training. Students will have technical skill that is future-proof because to Spark's emphasis on scalability.

- Cross-Industry Applications:

Apache Spark is no longer limited to IT companies. It is being used by sectors like as manufacturing, finance, healthcare and retail to address particular issues. Sector-specific use cases will be incorporated into training programs as they develop. This guarantees that students can use their Spark abilities to a variety of sectors. In order to solve real-world problems, future Spark occupations will require cross-domain skills. For Spark professionals, career stability is created by the variety of industries. Skills become more applicable through training in real-world industry use cases. Spark's flexibility to adapt to many industries enhances its long-term career worth.

Tools and Technologies for Apache Spark Course

- Spark SQL:

Spark SQL is one of the most powerful tools in Apache Spark training, helping learners query structured data using SQL-like commands. It bridges the gap between relational data and big data, offering smooth integration with Hive, JDBC and BI tools. Learners can analyze data without complex coding and use familiar query languages for real-time insights. For experts handling enterprise-scale datasets, Spark SQL is therefore crucial. You acquire the ability to effortlessly execute complex analytics and optimize queries as a result.

- Spark Streaming:

Spark Streaming is a vital tool that focuses on processing real-time data streams with high accuracy. During Apache Spark training, students learn how to handle live data sources such as Twitter feeds, Kafka and log files. It teaches efficient batch processing of data split into micro-batches, enabling faster decision-making. Organizations rely on Spark Streaming for fraud detection, monitoring and IoT applications. Training with this tool builds expertise in real-time analytics, making you job-ready for data-driven industries.

- MLlib:

MLlib is Spark’s machine learning library, designed to make predictive analytics simpler and faster. Learners gain hands-on exposure to algorithms like classification, regression, clustering and collaborative filtering. Apache Spark training helps you understand how to implement scalable ML models without writing everything from scratch. The tool also supports feature extraction, selection and dimensionality reduction for optimized workflows. With MLlib, students prepare for advanced careers in AI and machine learning using big data.

- GraphX:

GraphX allows learners to work with graph-parallel computation for large-scale data. In Apache Spark training, students explore graph algorithms like PageRank, connected components and shortest paths. This tool is particularly useful in social network analysis, recommendation engines and fraud detection. It combines ETL and iterative graph computation in a single system, ensuring efficiency. Learning GraphX equips you with specialized skills for analyzing interconnected data patterns.

- Hadoop Integration:

One crucial topic covered in Apache Spark training courses is Hadoop compatibility. Students learn how Spark integrates with Hadoop Distributed File System (HDFS) to provide dependable data storage. Businesses may accomplish both batch and real-time processing by combining Spark with Hadoop. Scalability for managing petabytes of both structured and unstructured data is guaranteed. You may efficiently manage hybrid big data settings by receiving training on this integration.

- Apache Kafka:

Apache Kafka is an essential tool for Spark training since it is frequently used to create data pipelines and streaming applications. Students discover how to process high-velocity event data by integrating Spark Streaming with Kafka. It improves dependability when managing social media feeds, transactions and logs. Businesses that depend on constant data flow must have this mix. Learners develop into advanced real-time analytics and data engineering professions by becoming proficient with Spark and Kafka.

- PySpark:

PySpark is the Python API for Spark, which simplifies development and makes big data processing more accessible. Training sessions focus on teaching learners how to code Spark applications using Python. It supports tasks like data cleaning, transformation and analysis with fewer complexities. PySpark is highly preferred by data scientists for building prototypes quickly. By learning PySpark, you open career doors in analytics, data science and AI-driven industries.

- SparkR:

SparkR is an R package that brings Spark capabilities to R users, particularly those in statistical and analytical domains. This tool is introduced in Apache Spark training to improve statistical modeling and data visualization. Students can use well-known R functions while working with large datasets. While working in their comfort zone, SparkR makes sure data analysts don't miss out on large data trends. It connects the dots between large-scale analytics and statistical computing.

- Hive on Spark:

Hive on Spark combines the capabilities of Spark's execution engine with SQL-based querying. For students with a background in data warehousing, this tool is essential. Students learn how Hive queries execute more quickly on Spark during Apache Spark training. It facilitates large-scale analytics across industries and enhances query performance. Professionals improve their knowledge of contemporary data warehouse ecosystems with Hive on Spark.

- TensorFlow with Spark:

Integrating TensorFlow with Spark introduces learners to the world of deep learning at scale. Training includes hands-on exposure to building distributed deep learning models using Spark clusters. This tool supports real-time predictions and advanced analytics in AI projects. It is especially valuable in fields like image recognition, NLP and IoT applications. By learning TensorFlow with Spark, you gain an edge in blending big data with artificial intelligence.

Roles and Responsibilities of Apache Spark Course

- Apache Spark Developer:

An Apache Spark Developer is responsible for designing, developing and optimizing Spark applications that handle large datasets. They work on real-time data pipelines, batch processing and distributed systems to deliver reliable solutions. Their role includes writing clean and efficient code in languages like Scala, Java or Python. The performance and scalability of Spark-based systems are guaranteed by developers. In order to connect Spark with current infrastructures, they also work with data engineers and architects. This position requires strong problem-solving skills to transform raw data into actionable insights.

- Data Engineer:

Data Engineers in Apache Spark training learn how to create, construct and manage reliable data pipelines. They focus on ensuring data flows smoothly between multiple sources and destinations with Spark as the core engine. Their work involves integrating Spark with Hadoop, Kafka and databases for scalable data management. Data Engineers also take responsibility for handling data quality, validation and transformation. They enable organizations to use clean and structured data for analysis and reporting. In Spark projects, they are the backbone of the entire data processing workflow.

- Data Analyst:

A Data Analyst uses Apache Spark to analyze, interpret and visualize data for informed business decisions. They leverage Spark SQL and SparkR to query massive datasets efficiently. Analysts focus on uncovering hidden patterns, generating reports and sharing insights with stakeholders. Their work supports forecasting, performance evaluation and strategy building in organizations. Spark training helps analysts move beyond spreadsheets and work with enterprise-level datasets. By mastering Spark tools, analysts improve decision-making speed and accuracy.

- Big Data Consultant:

A big data specialist offers knowledgeable advice on how to use Apache Spark solutions across a range of businesses. They evaluate business issues, provide ideas based on Spark and supervise implementation. Consultants assist businesses in selecting the finest cluster management systems, tools and frameworks. Additionally, they provide teams with training on how to use Spark for real-time data processing. They serve as a link between corporate goals and technical implementation. Consultants who possess Spark experience turn into reliable counsel on digital transformation projects.

- Machine Learning Engineer:

To create and implement predictive models at scale, machine learning engineers utilize Spark MLlib. They concentrate on developing algorithms for recommendation, classification and clustering systems. During Apache Spark training, students are exposed to distributed computing-based real-world machine learning tasks. ML engineers make sure their models are highly accurate when handling terabytes of data. For more complex AI solutions, they frequently combine Spark ML with frameworks like PyTorch or TensorFlow. In sectors that depend on automation and sophisticated analytics, this function is essential.

- Business Intelligence Specialist:

Spark is used by a business intelligence (BI) specialist to turn data into insightful dashboards and reports. To improve reporting capabilities, they combine Spark with visualization tools such as Tableau or Power BI. Giving CEOs relevant insights based on real-time data is their primary responsibility. Decision-makers can monitor performance and react swiftly to trends thanks to BI specialists. They can handle large datasets beyond the capabilities of conventional BI systems thanks to Spark training. With these abilities, BI specialists use analytics to provide more profound business value.

- Cloud Data Architect:

Cloud Data Architects design cloud-native solutions that integrate Spark for large-scale data processing. They focus on deploying Spark clusters on platforms like AWS, Azure or Google Cloud. Their responsibilities include ensuring scalability, security and cost-efficiency of Spark environments. They also work on hybrid solutions, combining on-premises systems with cloud services. In training, learners gain exposure to Spark on cloud platforms for enterprise-grade solutions. This role is critical for businesses moving towards digital-first strategies.

- Data Scientist:

A Data Scientist applies statistical techniques and advanced algorithms to Spark datasets for actionable insights. They use Spark MLlib and PySpark to conduct large-scale experiments and predictions. Their responsibilities include feature engineering, data cleaning and developing AI-driven solutions. Data Scientists trained in Spark can analyze data far beyond the limits of traditional tools. They often work with interdisciplinary teams to translate data into business growth. Spark training equips them with the ability to scale their models to enterprise needs.

- ETL Developer:

Data migration and analytical preparation are the areas of expertise for ETL (Extract, Transform, Load) developers. They automate data integration, transformation, and ingestion processes with Spark. Their job is to make sure that unprocessed data is useable for reporting and analytics. Tools like Hive, Kafka and Hadoop are frequently used by ETL developers to optimize workflows. They have the know-how to effectively manage intricate pipelines thanks to their Spark training. They are essential in making sure business systems run on accurate and updated information.

- Apache Spark Administrator:

Spark cluster management and monitoring are under the purview of an Apache Spark Administrator. Among their responsibilities are node configuration, resource allocation, and performance maintenance. They take care of Spark environment security, upgrades and troubleshooting. In order to maximize efficiency across dispersed systems, administrators also keep an eye on workloads. They can maintain high availability in Spark clusters with the help of training. In companies that mostly rely on ongoing data processing, this function is essential.

Companies Hiring Apache Spark Professionals

- Accenture:

Accenture actively hires Apache Spark professionals to deliver advanced analytics and digital transformation solutions. The company uses Spark for processing massive datasets across financial, healthcare and retail clients. Their focus is on real-time analytics, AI integration and cloud-based data solutions. Employees trained in Spark gain opportunities to work on global projects with diverse industries. This makes Accenture a leading destination for Apache Spark experts.

- Infosys:

To strengthen its big data and AI-driven initiatives, Infosys hires Spark experts. The business uses Spark to get real-time information across logistics, banking and telecommunications. Skilled workers can work with scalable data systems and cutting-edge tools. Infosys offers Spark engineers options for ongoing education and development. Exposure to significant enterprise level analytics initiatives is guaranteed when working here.

- Tata Consultancy Services (TCS):

TCS employs Apache Spark professionals to support clients in digital transformation initiatives. Spark is used extensively in their projects for fraud detection, customer analytics and smart data pipelines. The company values professionals who can handle both batch and streaming data processing. With its global presence, TCS offers diverse opportunities for Spark engineers. Employees gain long-term career growth and stability in big data solutions.

- Wipro:

Hiring Spark specialists to improve its cloud and analytics offerings is something Wipro is well-known for. The business incorporates Spark into industry wide AI-driven decision-making systems. Spark professionals contribute to building robust, high-performance data platforms. Wipro values individuals with practical training and real-world Spark project experience. This creates exciting roles for professionals looking to innovate in data analytics.

- IBM:

IBM is a global technology leader that uses Apache Spark to power AI, IoT and enterprise data platforms. Spark is integrated into IBM’s advanced solutions like Watson for large-scale analytics. Professionals trained in Spark work on projects involving machine learning and predictive insights. IBM looks for Spark experts who can design innovative solutions for business clients. Joining IBM gives exposure to cutting-edge technologies in the data analytics space.

- Capgemini:

Capgemini strengthens its digital and consulting offerings by hiring experts in Apache Spark. The business uses Spark for tasks that require scalable and quick data analysis. Capgemini's Spark specialists manage AI solutions, predictive modeling and client data platforms. In Spark deployments, the organization places a strong emphasis on problem-solving abilities and practical knowledge. Here, professionals have the opportunity to work on global big data initiatives.

- Cognizant:

Cognizant actively seeks Apache Spark professionals to deliver innovative analytics solutions for its clients. Spark is central to their projects involving real-time monitoring, personalization and customer engagement. Employees get hands-on exposure to Spark with cloud technologies like AWS and Azure. Cognizant values professionals who bring creativity along with technical expertise. This makes it an ideal workplace for Spark-trained candidates.

- Deloitte:

Spark experts are employed by Deloitte to assist businesses in using big data to make better decisions. Spark is essential to their consulting offerings, particularly in the areas of healthcare analytics and financial risk management. Here, teams work with Spark experts to create safe, scalable, and significant solutions. Spark professionals have access to elite clientele and sectors through Deloitte. As a result, there are unparalleled chances for creativity and professional growth.

- Tech Mahindra:

Tech Mahindra is known for hiring Spark professionals to support telecom and IT clients. Spark is used by the business to manage customer experience analytics and real-time network data. Here, Spark experts collaborate on significant international initiatives. Candidates with Spark training are in high demand for Tech Mahindra's big data positions. A dynamic workplace that prioritizes innovation and scalability is advantageous to employees.

- HCL Technologies:

HCL Technologies hires Apache Spark professionals to enhance its data engineering and AI capabilities. The company implements Spark for large-scale analytics, predictive maintenance and cloud-based projects. Spark-trained employees contribute to building scalable solutions across industries. HCL emphasizes technical expertise and practical project exposure in Spark. Working here provides long-term growth in the evolving field of big data.

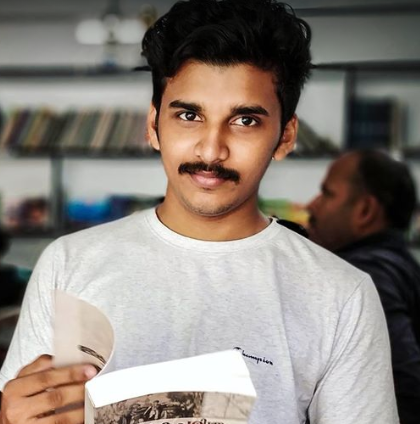

Regular 1:1 Mentorship From Industry Experts

Regular 1:1 Mentorship From Industry Experts