Service Validation and Testing Tutorial

Last updated on 29th Sep 2020, Blog, Tutorials

Service Validation and Testing defines the testing of services during the Service Transition phase. This will ensure that new or changed services are fit for purpose (this is known as utility) and fit for use (this is known as warranty).

Service Validation and Testing’s goal is to make sure the delivery of activities adds value that is agreed and expected.If testing hasn’t been carried out properly, additional Incidents and Problems will arise.

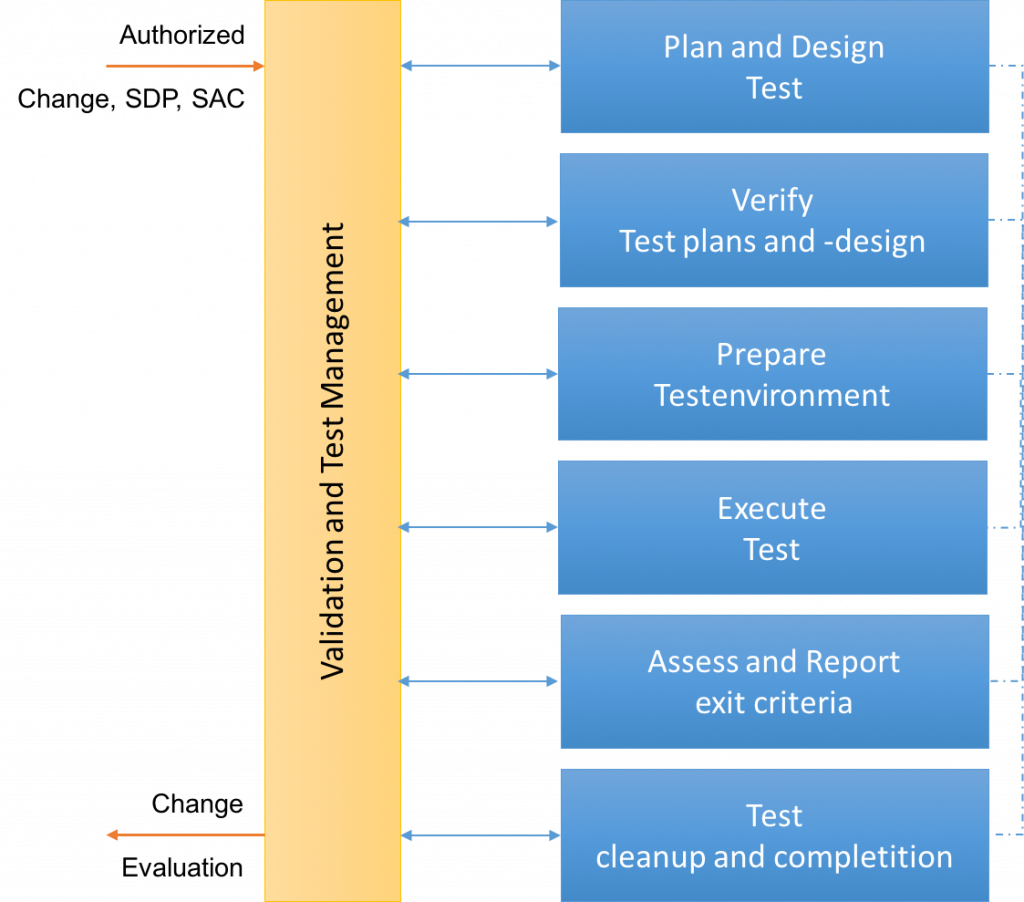

There are a number of activities for Service Validation and Testing, these include:

- Validation and Test management – This consists of planning and managing/controlling and then reporting on the activities that have taken place during all phases to ensure they are fit for purpose/use.

- Planning and Design – Test planning and design activities take place in the early stages of the Service Lifecycle. These correlate to resources, supporting services, scheduling milestones for delivery and acceptance.

- Verification of Test Plan and Design – Test plans and designs are validated to ensure all activities are complete (this also includes test scripts). Test models are also verified to minimise the risks to the service.

- Preparation of the Test environment – Prepare and make a baseline of the test environment.

- Testing – Tests are carried out using manual or automated testing techniques and procedures. All results are registered.

- Evaluate Exit Criteria and Report – Actual results are compared with projected results.

- Clean up and Closure – Ensure the test environment is cleaned. Learn from previous experiences and identify areas for improvement.

Subscribe For Free Demo

Error: Contact form not found.

What is Validation Testing?

Validation testing is the process of ensuring if the tested and developed software satisfies the client /user needs. The business requirement logic or scenarios have to be tested in detail. All the critical functionalities of an application must be tested here.

As a tester, it is always important to know how to verify the business logic or scenarios that are given to you. One such method that helps in detail evaluation of the functionalities is the Validation Process.

Whenever you are asked to perform a validation test, it takes a great responsibility as you need to test all the critical business requirements based on the user needs. There should not be even a single miss on the requirements asked by the user. Hence a keen knowledge on validation testing is much important.

Purpose of Service Validation and Testing Process (SVT)

The purpose of the Service Validation and Testing process is to:

- Ensure that a new or changed IT service matches its design specification and will meet the needs of the business.

Objectives of Service Validation and Testing

- Plan and implement a structured validation and test process that provides objective evidence that the new or changed service will support the customer’s business and stakeholder requirements, including the agreed service levels

- Quality assure a release, its constituent service components, the resultant service and service capability delivered by a release

- Identify, assess and address issues, errors, and risks throughout Service Transition.

- Provide confidence that a release will create a new or changed service or service offerings that deliver the expected outcomes and value for the customers within the projected costs, capacity and constraints

- Validate that the service is ‘fit for purpose’ – it will deliver the required performance with desired constraints removed

- Assure a service is ‘fit for use’ – it meets certain specifications under the specified terms and conditions of use

- Confirm that the customer and stakeholder requirements for the new or changed service are correctly defined and remedy any errors or variances early in the service lifecycle as this is considerably cheaper than fixing errors in production.

Principles of Service Validation and Testing

The typical policy statements of service validation and testing include the following.

- All the tests for service validation must be designed and carried out by the people who haven’t been involved in the design and development activities for the service.

- The criteria for passing/failing of the test should be documented in an SDP in advance before the start of any testing.

- Each test environment should be restored to an earlier known state before starting the test.

- Service validation and testing need to create, catalog and maintain a library of test models, test cases, test data and test scripts which can be reused.

- A risk-based testing approach should be adopted to reduce the risk to the service and customer’s business.

Important Terminologies & Definitions:

Service Level Package (SLP):

- Service Level Package (SDP) is a defined level of Utility and Warranty for a particular Service Package.

- Each SLP is designed to meet the needs of a particular Pattern of Business Activity (PBA).

- SLPs are associated with a set of service levels, pricing policies, and a core service package.

Service Design Package (SDP):

- The Service Design Package (SDP) contains the core documentation of a service and is attached to its entry in the ITIL Service Portfolio.

- It is defined upon the Service Level Requirements and specifies the requirements from the viewpoint of the client.

- It also defines how these requirements would actually be fulfilled from a technical and organizational point of view.

- Service Design Package (SDP) is an important input for ITIL Service Validation and Testing.

Development/ Installation QA Documentation:

- This is a documentation of tests and quality assurance measures applied during the development or installation of services, systems and service components (e.g. component tests, code walk-through etc).

- A complete Development/ Installation Quality Assurance (QA) Documentation ensures that the required QA measures were tested before transferring a release component back to release Management.

Test Model:

- A Test Model is created during the Release planning phase to specify the testing approach to be used before deploying the Release into the live environment.

- It is an important input for the Project Plan, And most importantly, this document defines the required test scripts and the quality assurance checkpoints a service needs to pass during the Release deployment.

Process Activities of Service Validation and Testing

The process activities do not take place in a sequence, and several activities can take place in parallel. The activities in this process are:

- Planning and designing of tests

- Verifying the test plans and test designs

- Preparing the test environment

- Performing the tests

- Evaluating the exit criteria and report

- Test clean up and closure

Scope of the Service Validation and Testing Process

The service provider takes responsibility for delivering, operating and/or maintaining customer or service assets at specified levels of warranty, under a service agreement.

The scope of the Service Validation and Testing Process are:

- Service Validation and Testing can be applied throughout the service lifecycle to assure any aspect of the service and the service providers’ capability, resources and capacity to deliver a service and/or service release successfully.

- In order to validate and test an end-to-end service the interfaces to suppliers, customers and partners are important. Service provider interface definitions define the boundaries of the service to be tested, e.g., process interfaces and organizational interfaces.

- Testing is equally applicable to in-house or developed services, hardware, software or knowledge-based services. It includes the testing of new or changed services or service components and examines the behavior of these in the target business unit, service unit, deployment group or environment. This environment could have aspects outside the control of the service provider, e.g., public networks, user skill levels or customer assets.

- Testing directly supports the release and deployment process by ensuring that appropriate levels of testing are performed during the release, build and deployment activities.

- It evaluates the detailed service models to ensure that they are fit for purpose and fit for use before being authorized to enter Service Operations, through the service catalog. The output from testing is used by the evaluation process to provide the information on whether the service is independently judged to be delivering the service performance with an acceptable risk profile.

Value to Business of Service Validation and Testing Process

Service failures can harm the service provider’s business and the customer’s assets and result in outcomes such as loss of reputation, loss of money, loss of time, injury and death.

The key value to the business and customers from Service Testing and Validation is in terms of the established degree of confidence that a new or changed service will deliver the value and outcomes required of it and understanding the risks.

Successful testing depends on all parties understanding that it cannot give, indeed should not give, any guarantees but provides a measured degree of confidence. The required degree of confidence varies depending on the customer’s business requirements and pressures of an organization.

Service Validation and Testing Policies

Policies that drive and support Service Validation and Testing include service quality policy, risk policy, Service Transition policy, release policy and Change Management policy. Let us discuss the policies below:

Service quality policy

Senior leadership will define the meaning of service quality. Service Strategy discusses the quality perspectives that a service provider needs to consider.

In addition to service level metrics, service quality takes into account the positive impact of the service (utility) and the certainty of impact warranty.

The Service Strategy publication outlines four quality perspectives:

- Level of excellence

- Value for money

- Conformance to specification

- Meeting or exceeding expectations

One or more, if not all four, perspectives are usually required to guide the measurement and control of Service Management processes. The dominant perspective will influence how services are measured and controlled, which in turn will influence how services are designed and operated. Understanding the quality perspective will influence the Service Design and the approach to validation and testing.

Risk policy

Different customer segments, organizations, business units and service units have different attitudes to risk. Where an organization is an enthusiastic taker of business risk, testing will be looking to establish a lower degree of confidence than a safety critical or regulated organization might seek.

The risk policy will influence control required through Service Transition including the degree and level of validation and testing of service level requirements, utility and warranty, i.e., availability risks, security risks, continuity risks and capacity risks.

Service Transition policy

A policy defined, documented and approved by the management team, who ensure ¡t ¡s communicated across the organization, to relevant suppliers/ partners

Release policy

The type and frequency of releases will influence the testing approach. Frequent releases such as once-a-day drive requirements for re-usable test models and automated testing.

Change Management policy

The use of change windows can influence the testing that needs to be considered. For example, if there is a policy of ‘substituting’ a release package late in the change schedule or if the scheduled release package is delayed then additional testing may be required to test this combination if there are dependencies.

Service Validation and Testing Activities:

There are a number of activities that are performed under the ITIL Service Validation and Testing, these include:

- Validation and Test Management – This consists of planning, managing, controlling, and reporting on the activities that have been carried out to ensure the quality/fitness of the release.

- Planning and Design – The test planning & design activities take place early on within the Service Lifecycle. These activities are related to resources, supporting services, and scheduling milestones for delivery & acceptance.

- Verification of Test Plan and Design – Ensures that all test plans and designs (test models) are validated to ensure their completeness. Test models are also verified in order to minimize the risks to the service.

- Preparation of the Test Environment – This refers to the preparation and establishing a baseline of the test environment.

- Testing – This is the means of doing thorough Testing by using both manual or automated testing techniques and procedures. All the results from the testing are documented in the test register.

- Evaluate Exit Criteria and Report – Here the documented records of the test register are compared with the projected/expected results.

- Clean up and Closure – This ensures that the test environments are reset to their default environment after completion of validation and testing procedures. It also suggests documenting all the knowledge learned throughout the process for future reference and improvement.

Challenges of Service Validation and Testing

The challenges faced are:

- A lack of respect and understanding for the testing role

- A lack of available funding for the testing process

Risks of Service Validation and Testing

The following risks are involved in service validation and testing:

- Objectives and expectations can be unclear at times

- There can be a lack of understanding of the risks involved, which results in testing that is not targeted at critical elements.

- Shortage of resources can introduce delays and have an impact on other service transitions.

Service Validation and testing thus delivers the expected outcomes and the optimum value for the customers. By validating that a process is ‘fit,’ it ensures that the necessary utilities are delivered. An additional benefit of service validation and testing is its ability to identify, analyze and solve the issues, problems, and risks which occur throughout the service transition process.

Service Validation and Testing Benefits:

- Ensures the quality of the deployed or developed Service meets the customer expectation.

- Reduce Service related incidents through thorough testing

- Ensures that the Service Desk Team has adequate knowledge to understand the service related issue.

- Reduces the efforts of troubleshooting of Service related problems in the live environment.

- Indirectly reduces costs by reducing the bugs before service is implemented.

- Ensures that users can use the service easily, hence increasing the intrinsic value of services.

Difference Between Verification and Validation

Let us understand these with an example in a simple way.

Example:

Client Requirement:

The proposed injection should not weigh above 2 cms.

Verification Test:

- Check if the injection is the injection that does not weigh above 2 cms by using checklist, review, and design.

Validation Test:

- Check if the injection does not weigh above 2 cms by using manual or automation testing.

- You have to check each and every possible scenario pertaining to the injection weight by using any suitable method of testing (functional and non-functional methods).

- Check for measurements less than 2 cm and above 2 cms.

Verification | Validation |

| The process just checks the design, code and program. | It should evaluate the entire product including the code. |

| Reviews, walkthroughs, inspections, and desk- checking involved. | Functional and non functional methods of testing are involved . In depth check of the product is done. |

| It checks the software with specification. | It checks if the software meets the user needs. |

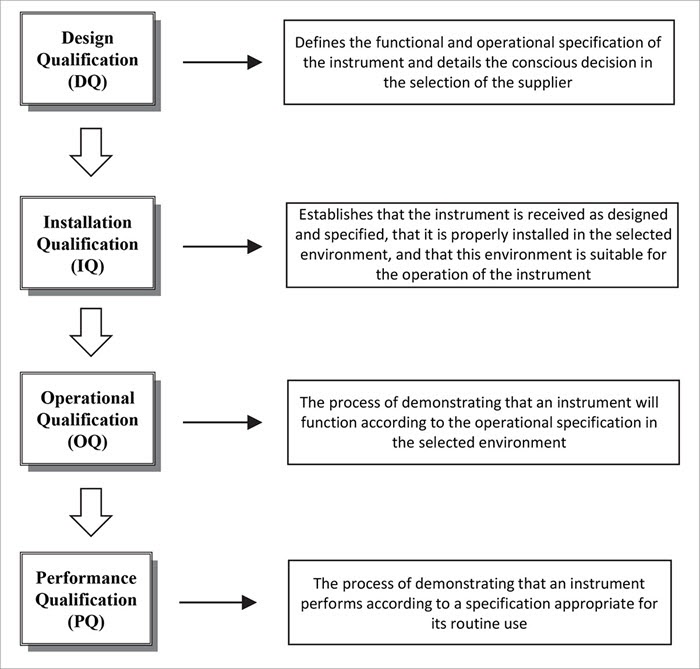

Stages Involved

- Design Qualification: This includes creating the test plan based on the business requirements. All the specifications need to be mentioned clearly.

- Installation Qualification: This includes software installation based on the requirements.

- Operational Qualification: This includes testing phase based on the User requirement specification.

This may include Functionality testing:

- Unit Testing – Black box, White box, Gray box.

- Integration Testing – Top-down, Bottom-up, Big bang.

- System Testing – Sanity, Smoke, and Regression Testing.

- Performance Qualification: UAT(User Acceptance testing) – Alpha and Beta testing.

- Production

Design Qualification

Design qualification simply means that you have to prepare the design of the software in such a way so that it meets the user specifications. Primarily you need to get the User Requirements Specification (URS) document from the client to proceed with the design.

Test Strategy:

This document forms the base for preparing the test plan. It is usually prepared by the team lead or manager of the project. It describes how we are going to proceed to test and achieve the desired target.

To incorporate all the procedures a proper plan should be designed and get approved by the stakeholders. So let us know the components of the test plan.

In a few projects, test plan and test strategy can be incorporated as a single document. Separate strategy documents are also prepared for a complex project (mostly in automation technique).

Components of the Validation Test Plan:

- Description of the project

- Understanding the requirements

- Scope of testing

- Testing levels and test schedule

- Run plan creation

- Hardware-software and staffing requirements

- Roles and responsibilities

- Assumption and dependencies

- Risks and mitigation

- Report and Metrics

Description of the Project:

Here you need to elucidate all the description of the application bestowed to you for testing. It should include all the functionalities of the app.

Understanding the Requirements:

Upon getting the USR, you need to mention the understood requirements from your side. You can also raise clarifications if any. This stands as the base or test criteria for testing.

Scope of Testing:

The scope must include the modules in detail along with the features at a high level. You need to tell the client what all requirements you would cover in your testing.

From a business perspective, validation testing may be asked to perform for the critical requirements of an application. It simply means that you say what will be covered and what not.

Testing Levels and Test Schedule:

You need to mention how many rounds of testing have to be conducted. The overall effort for the testing project is estimated using the standard estimation techniques like Test Case Point (TCP) estimation etc.

As the name implies test schedule describes how the testing will be carried out. It should also say how and when the approval, and reviews will be conducted.

Example:

Design of a webpage is the project considered.

Testing levels include:

- Level 1: Smoke testing

- Level 2: Unit Testing

- Level 3: Integration testing

- Level 3: System testing

- Level 3: Acceptance testing

Test Schedule:

- 1.Plan submission – Day 1

- 2.Design of Test Cases – Day 2

- 3.Dry run and bug fixing – Day 4

- 4.Review- Day 5

- 5.Formal Run – Day 6

- 6.Deliverables sent for approval – Day 8

- 7.Reports – Day 10

Run plan creation:

The run plan marks the number of runs required for testing. Every run you perform in the offsite will be noted by the onsite team.

For Example,

when you use the HP Quick Test Professional tool for execution, the number of runs will be shown in the Runs tab of the test plan.

Hardware-software and staffing requirements:

- Hardware and software requirements like the devices, browser versions, IOS, testing tools required for the project.

- Staffing means appointing the persons required for testing. You can mention the team count here.

- In case you need any extra testing members, then you can request onsite depending on the testing scope. Simply when the number of test cases increases, then it implies that you need more team members to execute them.

Roles and Responsibilities:

This implies assigning tasks to the related roles responsible for carrying the various levels of testing.

For Example,

An app needs to be tested by a team comprising of 4 members to execute 4 validation protocols and you can delegate the responsibilities as follows:

- 1.Test Lead: Design of test plan

- 2.Team member 1: Design & execution of protocols 1,2.

- 3.Team member 2: Design & execution of protocols 3,4.

- 4.Team member: Preparation of reports, review, and metrics.

Assumption and Dependencies:

This means that the assumptions made during design and dependencies identified for testing will be included here.

Risks and Mitigation:

Risks related to the test planning such as availability of the desired environments, build, etc along with mitigation and contingency plans.

Report and Metrics:

Factors that were used for testing and reports to the stakeholders have to be mentioned here.

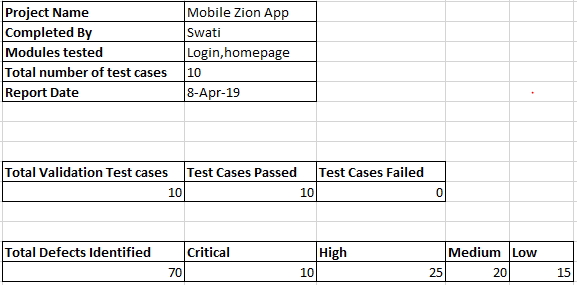

An Example of a mobile app is provided below:

Installation Qualification

- Installation qualification contains details like which and how many test environments would be used, what access level is required for the testers in each environment along with the test data required. It may include browser compatibility, tools required for execution, devices required for testing, etc. The system being developed should be installed in accordance with user requirements.

- Test data may be required for testing some applications and it needs to be given by the proper person. It is a vital pre-requisite.

- Some applications may require a database. We have to keep all data required for testing ready in a database to validate the specifications.

For Example,

A new app says “abc” has to be tested in mobile (Android 4.3.1) and browser (Chrome 54), in such a case, we have to keep a track of the following:

- Check if proper authorization is given to check the site of the app “abc”.

- See if the devices used for testing the app like mobile (android /ios), browser-Chrome, Internet Explorer with required version are available.

- Check if those are installed correctly with the specified versions (Eg: Chrome 54, Android version 4.3.1).

- Make sure if the app is accessible in both the browser and mobile.

Operational Qualification

Operational qualification ensures that every module and sub-module designed for the application under test functions properly as it is expected to in the desired environment.

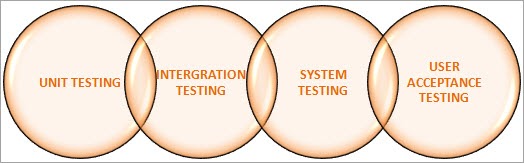

A validation testing, in general, is carried out in the following hierarchy.

Functional testing plays a major role in validation testing. It simply means that you have to validate the functionality of the application by each and every critical requirement mentioned. This paves the way to map the requirements mentioned in the Functional Specification document and ensures that the product meets all the requirements mentioned.

Functional Testing and its Types

As the name suggests, functional testing is testing the functions i.e. what the software has to do. The functionalities of the software will be defined in the requirement specification document.

Let’s have a quick glance of its types.

1) Unit Testing:

Unit testing is testing the individual units/modules/components/methods of the given system. The field validation, layout control, design, etc., are tested with different inputs after coding. Each line of the code should be validated to the individual unit test cases.

Unit testing is done by the developers themselves. The cost of fixing bugs is less here when compared to the other levels of testing.

Example:

Evaluating a loop of the code for a function say gender choice is an example of unit testing.

2) Black Box Testing:

Testing the behavior of an application for the desired functionalities against the requirements without focusing the internal details of the system is called Black box testing. It is usually performed by an independent testing team or the end users of the application.

The application is tested with relevant inputs and is tested to validate if the system behaves as desired. This can be used to test both the functional as well as non-functional requirements.

3) White Box Testing:

White box testing is nothing but a detailed checking of the program code by code. The entire working of the application depends on the code written, hence it is necessary to test the code very carefully. You need to check every unit and its integration as a whole module in a step by step way.

A tester with programming knowledge is a must criterion here. This clearly finds out if there is any deviation in the workflow of the application. It is useful for both the developers as well as testers.

4) Gray Box Testing:

Gray box testing is a combination of both white box and black box testing. Partial knowledge about the structure or the code of the unit to be tested is known here.

Integration Testing and its Types

The individual components of the software that are already tested in unit testing are integrated and tested together to test their functionalities as a whole, in order to ensure data flow across the modules.

This is done by the developers themselves or by an independent testing team. This can be done after two or more units are tested.

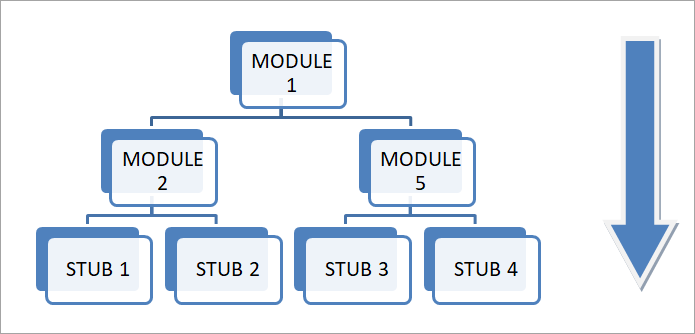

Top-down Approach:

In this approach, the top units are tested first, and then the lower level units are tested one by one stepwise. Test stubs that may be used are required to simulate the lower level units that may not be available during the initial phases.

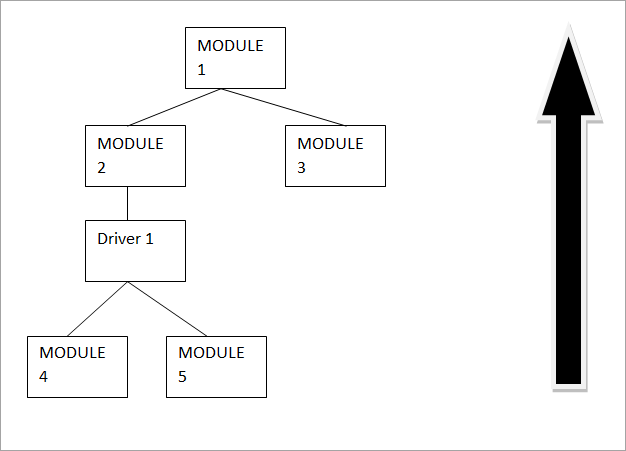

Bottom-up Approach:

In this approach, the bottom units are tested first, integrated and then the higher level units are tested.Test stubs that may be used are required to simulate the higher level units that may not be available during the initial phases.

System Testing and its Types

Testing the complete system/software is called system testing. The system is tested completely against the functional requirement specifications. System testing is done against both the functional and non-functional requirements. Black box testing is generally preferred for this type of testing.

1) Smoke Testing:

When the builders give the build to test initially, we have to test the build thoroughly. This is called smoke testing. We need to state if the build is capable of further testing or not.

In order to perform validation, you need a proper build. Hence smoke testing is firstly done by the testing team. The workflow of the application tested should be tested either with the test cases or without it. Test case covering the entire flow is helpful for this testing.

2) Sanity Testing:

In sanity testing, the main functionalities of the modules of the application under test are tested. In testing a website that has 3 tabs i.e. profile creation, education, login, etc., in IRCTC, the main functionalities of all these tabs have to be checked without going very deeper.

The menus, sub-menus, tabs have to be tested in all modules. It is a subset of regression testing as testing is done only of the main flow and not in depth.

3) Regression Testing:

For every release of the project, the development team may introduce certain changes. Validating if the new changes introduced have not affected the working flow of the system is called as Regression testing. Only certain test cases pertaining to the new requirements have to be tested here.

Performance Qualification

UAT (User Acceptance Testing):

This is the last phase of testing that is done to ensure that the system behaves as required corresponding to the specified requirements. This is done by the client. Once the client certifies and clears system testing, the product can go for deployment.

Alpha and Beta Testing:

Alpha testing is done by the developers on the application before release at the software development site. It involves black and white box testing. Beta testing is done at the customer side after the product is developed and deployed.

Sample Validation Test Cases or Protocol

With my experience, I have written this protocol for Gmail login.

In-depth check of the login functionality covered is what validation actually is. But I would like to mention that the style of sentence columns used may completely differ and depend on the client requirements.

Conclusion

Well, validation is all about analyzing the functionalities of a product in detail. As a validation tester, you must always remember to report the deviations then and there in order to obtain optimum results in testing.

Every test case that is written should be sharp, concise and understandable even to the common man. Validation tester should ensure that the right product is being developed against the specified requirements.

As a guide for validation testing, I have covered the process associated with validation.

Design qualification that involves the validation plan, Installation qualification that talks about the hardware-software installment, an Operational qualification that involves the entire system testing, Performance qualification that involves the user acceptance testing which provides the authorization for production.

Are you looking training with Right Jobs?

Contact Us- ITIL-Service Level Management Tutorial

- ITIL Turtorial

- ITIL Intermediate Certification Exam Process

- How to become a Certified ITIL Expert?

- ITIL Interview Questions and Answers

Related Articles

Popular Courses

- PMP Certification Training

11025 Learners - Software Testing Training

12022 Learners - ETL Testing Training

11141 Learners

- What is Dimension Reduction? | Know the techniques

- Difference between Data Lake vs Data Warehouse: A Complete Guide For Beginners with Best Practices

- What is Dimension Reduction? | Know the techniques

- What does the Yield keyword do and How to use Yield in python ? [ OverView ]

- Agile Sprint Planning | Everything You Need to Know