Perceptron Tutorial

Last updated on 25th Sep 2020, Blog, Tutorials

In machine learning, the perceptron is an algorithm for supervised learning of binary classifiers. It is a type of linear classifier, i.e. a classification algorithm that makes all of its predictions based on a linear predictor function combining a set of weights with the feature vector.

The linear classifier says that the training data should be classified into corresponding categories such that if we are applying classification for two categories, then all the training data must be lie in these two categories.

The binary classifier defines that there should be only two categories for classification.

The basic perceptron algorithm is used for binary classification and all the training examples should lie in these categories. The term comes from the basic unit in a neuron, which is called the perceptron.

What is an Artificial Neuron?

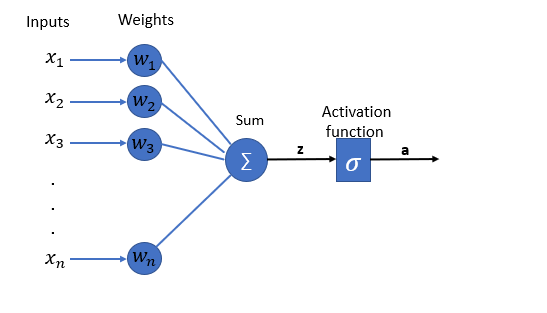

Considering the state of today’s world and to solve the problems around us we are trying to determine the solutions by understanding how nature works, this is also known as biomimicry. In the same way, to work like human brains, people developed artificial neurons that work similarly to biological neurons in a human being. An artificial neuron is a complex mathematical function, which takes input and weights separately, merge them together and pass it through the mathematical function to produce output.

Perceptron Learning Algorithm

Perceptron Algorithm is used in a supervised machine learning domain for classification. In classification, there are two types of linear classification and no-linear classification. Linear classification is nothing but if we can classify the data set by drawing a simple straight line then it can be called a linear binary classifier. Whereas if we cannot classify the data set by drawing a simple straight line then it can be called a non-linear binary classifier.

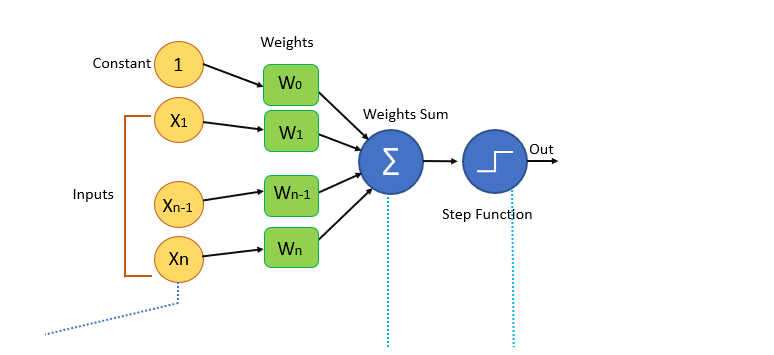

Perceptron Algorithm Block Diagram

Let us see the terminology of the above diagram.

1. Input: All the features of the model we want to train the neural network will be passed as the input to it, Like the set of features [X1, X2, X3…..Xn]. Where n represents the total number of features and X represents the value of the feature.

2. Weights: Initially, we have to pass some random values as values to the weights and these values get automatically updated after each training error that is the values are generated during the training of the model. In some cases, weights can also be called as weight coefficients.

3. Weights Sum: Each input value will be first multiplied with the weight assigned to it and the sum of all the multiplied values is known as a weighted sum.

Weights sum = ∑Wi * Xi (from i=1 to i=n) + (W0 * 1)

Step or Activation Function

Activation function applies step rule which converts the numerical value to 0 or 1 so that it will be easy for data set to classify. Based on the type of value we need as output we can change the activation function. Sigmoid function, if we want values to be between 0 and 1 we can use a sigmoid function that has a smooth gradient as well.

Sign function, if we want values to be +1 and -1 then we can use sign function. The hyperbolic tangent function is a zero centered function making it easy for the multilayer neural networks. Relu function is highly computational but it cannot process input values that approach zero. It is good for the values that are both greater than and less than a Zero.

Subscribe For Free Demo

Error: Contact form not found.

Bias

If you notice, we have passed value one as input in the starting and W0 in the weights section W0 is an element that adjusts the boundary away from origin to move the activation function left, right, up or down. since we want this to be independent of the input features, we add constant one in the statement so the features will not get affected by this and this value is known as Bias.

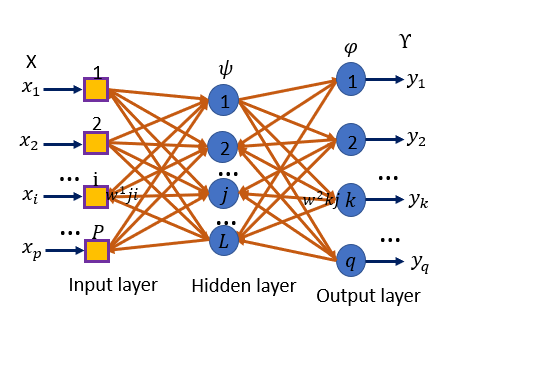

Perceptron algorithms can be divided into two types they are single layer perceptrons and multi-layer perceptron’s. In single-layer perceptron’s neurons are organized in one layer whereas in a multilayer perceptron’s a group of neurons will be organized in multiple layers. Every single neuron present in the first layer will take the input signal and send a response to the neurons in the second layer and so on.

Single Layer Perceptron

Multi-Layer Perceptron

Perceptron Learning Steps

- 1. Features of the model we want to train should be passed as input to the perceptrons in the first layer.

- 2. These inputs will be multiplied by the weights or weight coefficients and the production values from all perceptrons will be added.

- 3. Adds the Bias value, to move the output function away from the origin.

- 4. This computed value will be fed to the activation function (chosen based on the requirement, if a simple perceptron system activation function is step function).

- 5. The result value from the activation function is the output value.

Features added with perceptron make in deep neural networks. Back Propagation is the most important feature in these.

Back Propagation

After performing the first pass (based on the input and randomly given inputs) error will be calculated and the back propagation algorithm performs an iterative backward pass and try to find the optimal values for weights so that the error value will be minimized. To minimize the error back propagation algorithm will calculate partial derivatives from the error function till each neuron’s specific weight, this process will give us complete transparency from total error value to a specific weight that is responsible for the error.

Basics of The Perceptron

The perceptron(or single-layer perceptron) is the simplest model of a neuron that illustrates how a neural network works. The perceptron is a machine learning algorithm developed in 1957 by Frank Rosenblatt and first implemented in IBM 704.

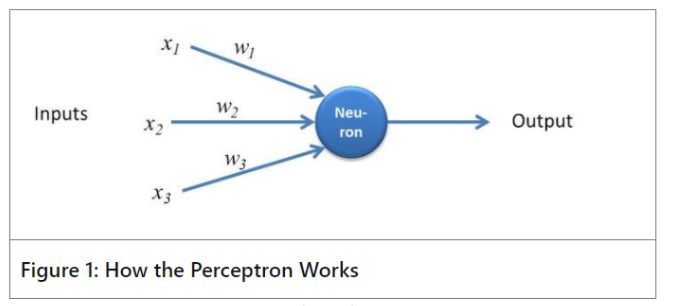

The perceptron is a network that takes a number of inputs, carries out some processing on those inputs and produces an output as can be shown in Figure 1.

How the Perceptron Works?

How the perceptron works is illustrated in Figure 1. In the example, the perceptron has three inputs x1, x2 and x3 and one output.

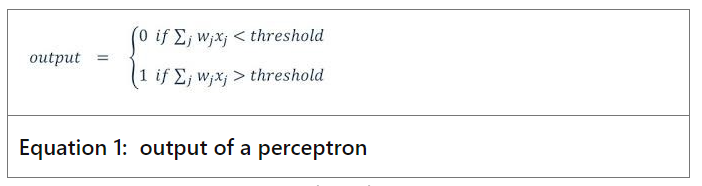

The importance of this inputs is determined by the corresponding weights w1, w2 and w3 assigned to this inputs. The output could be a 0 or a 1 depending on the weighted sum of the inputs. Output is 0 if the sum is below certain threshold or 1 if the output is above certain threshold. This threshold could be a real number and a parameter of the neuron. Since the output of the perceptron could be either 0 or 1, this perceptron is an example of binary classifier.

This is shown below in Equation 1

The Formula

Let’s write out the formula that joins the inputs and the weights together to produce the output

Output = w1x1 + w2x2 + w3x3

This function is a trivial one, but it remains the basic formula for the perceptron but I want you to read this equation as

Output ‘depends on’ w1x1 + w2x2 + w3x3

The reason for this is because, the output is not necessarily just a sum of these values, it may also depend on a bias that is added to this expression. In other words, we can think of a perceptron as a ‘judge who weights up several evidences together with other rules and the makes a decision’

Conclusion – Perceptron Learning Algorithm

When we say classification there raises a question why not use simple KNN or other classification algorithms? As the data set gets complicated like in the case of image recognition it will be difficult to train the algorithm with general classification techniques in such cases the perceptron learning algorithm suits the best. Single-layer perceptrons can train only on linearly separable data sets. If we want to train on complex datasets we have to choose multilayer perceptrons.

Activation function plays a major role in the perception if we think the learning rate is slow or has a huge difference in the gradients passed then we can try with different activation functions.

Are you looking training with Right Jobs?

Contact Us- Artificial Intelligence Tutorial

- Top Real World Artificial Intelligence Applications

- Artificial Neural Network Tutorial

- Top AI and Machine Learning Trends for 2020

- Artificial Intelligence Interview Questions and Answers

Related Articles

Popular Courses

- Artificial Intelligence Course

11025 Learners - Machine Learning Online Training

12022 Learners - Data Science Course Training

11141 Learners

- What is Dimension Reduction? | Know the techniques

- Difference between Data Lake vs Data Warehouse: A Complete Guide For Beginners with Best Practices

- What is Dimension Reduction? | Know the techniques

- What does the Yield keyword do and How to use Yield in python ? [ OverView ]

- Agile Sprint Planning | Everything You Need to Know