- Multilayer Perceptron Tutorial – An Complete Overview

- AWS Machine Learning Tutorial | Ultimate Step-by-Step Guide

- Keras Tutorial : What is Keras? | Learn from Scratch

- Machine Learning Algorithms for Data Science Tutorial

- Machine Learning-Random Forest Algorithm Tutorial

- Naive Bayes

- Classification – Machine Learning Tutorial

- Tensorflow Tutorial

- Deep Learning Tutorial

- Perceptron Tutorial

- Machine Learning Tutorial

- Multilayer Perceptron Tutorial – An Complete Overview

- AWS Machine Learning Tutorial | Ultimate Step-by-Step Guide

- Keras Tutorial : What is Keras? | Learn from Scratch

- Machine Learning Algorithms for Data Science Tutorial

- Machine Learning-Random Forest Algorithm Tutorial

- Naive Bayes

- Classification – Machine Learning Tutorial

- Tensorflow Tutorial

- Deep Learning Tutorial

- Perceptron Tutorial

- Machine Learning Tutorial

Tensorflow Tutorial

Last updated on 25th Sep 2020, Blog, Machine Learning, Tutorials

Today, in this TensorFlow tutorial for beginners, we will discuss the complete concept of TensorFlow. Moreover, we will start this TensorFlow tutorial with history and meaning of TensorFlow. Also, we will learn about Tensors & uses of TensorFlow. Along with this, we will see TensorFlow examples, features, advantage, and limitations. At last, we will see TensorBoard in TensorFlow.

So, let’s start TensorFlow Tutorial.

TensorFlow

TensorFlow is an open source machine learning framework for all developers. It is used for implementing machine learning and deep learning applications. To develop and research on fascinating ideas on artificial intelligence, Google team created TensorFlow. TensorFlow is designed in Python programming language, hence it is considered an easy to understand framework.

TensorFlow is a software library or framework, designed by the Google team to implement machine learning and deep learning concepts in the easiest manner. It combines the computational algebra of optimization techniques for easy calculation of many mathematical expressions.

TensorFlow History

Before the updation, TensorFlow is known as Distbelief. It built in 2011 as a proprietary system based on deep learning neural networks. The source code of distbelief was modified and made into a much better application based library and soon in 2015 came to be known as TensorFlow.

Why is TensorFlow So Popular?

TensorFlow is well-documented and includes plenty of machine learning libraries. It offers a few important functionalities and methods for the same.

TensorFlow is also called a “Google” product. It includes a variety of machine learning and deep learning algorithms. TensorFlow can train and run deep neural networks for handwritten digit classification, image recognition, word embedding and creation of various sequence models.

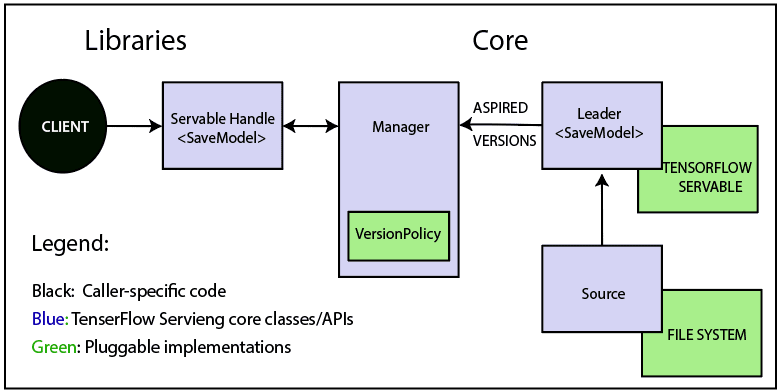

Architecture of TensorFlow

The TensorFlow runtime is a cross-platform library. The system architecture which makes this combination of scale flexible. We have basic familiarity with TensorFlow programming concepts such as the computation graph, operations, and sessions.

Some terms need to be understood first to understand TensorFlow architecture. The terms are TensorFlow Servable, servable Streams, TensorFlow Models, Loaders, Sources, Manager, and Core. The term and their functionality in the architecture of TensorFlow are described below.

TensorFlow architecture is appropriate to read and modify the core TensorFlow code.

1. TensorFlow Servable

These are the central uncompleted units in TensorFlow serving. Servables are the objects that the clients use to perform the computation.

The size of a servable is flexible. A single servable may consist of anything from a lookup table to a unique model in a tuple of interface models. Servable should be of any type and interface, which enabling flexibility and future improvements such as:

- Streaming results

- Asynchronous modes of operation.

- Experimental APIs

2. Servable Versions

TensorFlow server can handle one or more versions of the servables, over the lifetime of any single server instance. It opens the door for new algorithm configurations, weights, and other data can be loaded over time. They also can enable more than one version of a servable to be charged at a time. They also allow more than one version of a servable to be loaded concurrently, supporting roll-out and experimentation gradually.

3. Servable Streams

A sequence of versions of any servable sorted by increasing version of numbers.

4. TensorFlow Models

A serving represents a model in one or more servables. A machine-learned model includes one or more algorithm and lookup the embedding tables. A servable can also serve like a fraction of a model; for example, an example, a large lookup table be served as many instances.

5. TensorFlow Loaders

Loaders manage a servable’s life cycle. The loader API enables common infrastructure which is independent of the specific learning algorithm, data, or product use-cases involved.

6. Sources in TensorFlow Architecture

In simple terms, sources are modules that find and provide servable. Each reference provides zero or more servable streams at a time. For each servable stream, a source supplies only one loader instance for every servable.

Each source also provides zero or more servable streams. For each servable stream, a source supplies only one loader instance and makes available to be loaded.

7. TensorFlow Managers

TensorFlow managers handle the full lifecycle of a Servables, including:

- Loading Servables

- Serving Servables

- Unloading Servables

Manager observes to sources and tracks all versions. The Manager tries to fulfill causes, but it can refuse to load an Aspired version.

Managers may also postpone an “unload.” For example, a manager can wait to unload as far as a newer version completes loading, based on a policy to assure that at least one version is loaded all the times.

For example, GetServableHandle (), for clients to access the loaded servable instances.

Subscribe For Free Demo

Error: Contact form not found.

8. TensorFlow Core

This manages the below aspects of servables:

- Lifecycle

- Metrics

- TensorFlow serving core satisfaction servables and loaders as the opaque objects.

9. Life of a Servable

TensorFlow Technical Architecture:

- Sources create loaders for Servable Versions, and then loaders are sent as Aspired versions to the Manager, which will load and serve them to client requests.

- The Loader contains metadata, and it needs to load the servable.

- The source uses a callback to convey the Manager of Aspired version.

- The Manager applies the effective version policy to determine the next action to take.

- If the Manager determines that it gives the Loader to load a new version, clients ask the Manager for the servable, and specifying a version explicitly or requesting the current version. The Manager returns a handle for servable. The dynamic Manager applies the version action and decides to load the newer version of it.

- The dynamic Manager commands the Loader that there is enough memory.

- A client requests a handle for the latest version of the model, and dynamic Manager returns a handle to the new version of servable.

10. TensorFlow Loaders

TensorFlow is one such algorithm backend. For example, we will implement a new loader to load, provide access, and unload an instance of a new type of servable of the machine learning model.

11. Batcher in TensorFlow Architecture

Batching of TensorFlow requests into a single application can significantly reduce the cost f performing inference, especially in the presence of hardware accelerators and GPUs. TensorFlow serving has a claim batching device that approves clients to batch their type-specific assumption beyond request into batch quickly. And request that algorithm systems can process more efficiently.

TensorFlow Basics

TensorFlow is a machine learning framework and developed by Google Brain Team. It is derived from its core framework: Tensor. In TensorFlow, all the computations involve tensors. A tensor is a vector or a matrix of n-dimensions which represents the types of data. All the values in a TensorFlow identify data type with a known shape. The shape of the data is the dimension of the matrix or array.

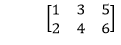

Representation of a Tensor

In TensorFlow, a tensor is the collection of feature vectors (Like, array) of n-dimension. For instance, if we have any 2×3 matrix with values 1 to 6, we write:

TensorFlow represents this matrix as:

- 1. [[1, 3, 5],

- 2. [2, 4, 6]]

If we create any three-dimensional matrix with values 1 to 8, we have:

TensorFlow represents this matrix as:

- 1. [ [[1, 2],

- 2. [[3, 4],

- 3. [[5, 6],

- 4. [[7, 8] ]

Note: A tensor is represented with a scalar or can have a shape of more than three dimensions. It is just difficult to anticipate high dimensions.

Types of Tensor

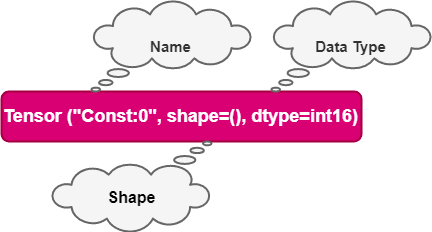

All computations pass through one or more Tensors in TensorFlow. A tensor is an object which has three properties which are as follows:

- A unique label (name)

- A dimension (shape)

- A data type (dtype)

Each operation we will do with TensorFlow involves the manipulation of a tensor. There are four main tensors we can create:

- tf.Variable

- tf.constant

- tf.placeholder

- tf.SparseTensor

In the tutorial, we will learn how to create the tf.constant and a tf. Variable.

Make sure that we activate the conda environment with TensorFlow. We named this environment hello-tf.

For Windows user:

- activate hello-tf

For macOS user:

- source activate hello-tf

After we have done that, we are ready to import tensorflow

- #Import tf

- import tensorflow as tf

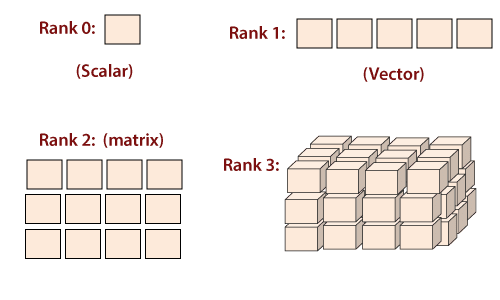

Create a tensor of n-dimension

We begin with the creation of a tensor with one dimension, namely a scalar.

To create a tensor, we can use tf.constant ()

- 1. tf.constant(value, dtype, name = “”)

- 2. arguments

- 3. `Value`: It is the Value of n dimension to define the tensor. And it is Optional.

- 4. `dtype`: Define the type of data:

- 5. `tf.string`: String variable

- 6. `tf.float32`: Float variable

- 7. `tf.int16`: Integer variable

- 8. “name”: Name of the tensor. Optional. By default, `Const_1:0`

To create a tensor of dimension 0, We have to run below code.

- ## rank 0

- ## Default name

- r1=tf.constant (1, tf.int18)

- print (r1)

Output:

Tensor (“Const: 0”, shape= (), dtype=int18

- # Named my_scalar

- r2 = tf.constant(1, tf.int18, name = “my_scalar”)

- print(r2)

Output:

Tensor (“my_scalar:0”, shape=( ), dtype=int18

Each tensor is displayed by the name of tensor. Each tensor object is generated with

a unique label (name),

a dimension (shape)

a data type (dtype).

We can define a tensor with decimal values or with a string to change the type of the data.

- #Decimal data

- q1_decimal = tf.constant(1.12345, tf.float32)

- print(q1_decimal)

- #String data

- q1_string = tf.constant(“JavaTpoint”, tf.string)

- print(q1_string)

Output:

Tensor(“Const_1:0”, shape=(), dtype=float32)

Tensor(“Const_2:0”, shape=(), dtype=string)

A tensor of 1 dimension can be created as follows:

- ## Rank 1_vector = tf.constant([1,3,6], tf.int18)

- print(q1_vector)

- q2_boolean = tf.constant([True, True, False], tf.bool)

- print(q2_boolean)

Output:

Tensor (“Const_5:0”, shape=(4), dtype=int18)

Tensor(“Const_4:0”, shape=(4), dtype=bool)

We can notice the shape is only composed in 1 column.

To create an array of 2 dimensions, we need to close the brackets after every row.

Example:

- ## Rank 2

- q2_matrix = tf.constant([ [1, 2],

- [3, 4] ],tf.int18)

- print(q2_matrix)

Output:

Tensor(“Const_6:0”, shape=(2, 2), dtype=int18)

The matrix possesses 2 rows and 2 columns filled with values 1, 2, 3, 4.

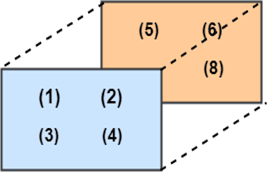

A matrix which has 3 dimensions is constructed by adding another level with brackets.

- # Rank 3

- q3_matrix = tf.constant([ [[1, 2],[3, 4], [5, 6]] ], tf.int18)

- print(q3_matrix)

Output:

Tensor(“Const_6:0”, shape=(1, 3, 2), dtype=int18)

The matrix looks like the below given picture.

Shape of tensor

When we print the tensor, TensorFlow guesses the shape. However, we can get the shape property.

Below, we construct a matrix filled with a number from 10 to 15 and we check the shape of m_shape

- # Shape of a tensor

- m_shape= tf.constant([[11,10],[13,12,],[15,14]])

- m_shape= shape

Output:

TensorShape ([Dimension(2),Dimension(3)])

The matrix has 2 rows and 3 columns.

TensorFlow is some useful commands to create a vector or a matrix filled with 0 or 1. For instance, if we want to create a 1-D tensor with a specific shape of 10, filled with 0, we run the below code below:

- # Create a vector of 0

- print(tf.zeros(10))

Output:

Tensor(“zeros:0”, shape=(10,), dtype=float32)

The property works for matrices. Here, we create a 10×10 matrix filled with 1.

- # Create a vector of 1

- print(tf.ones([10, 10]))

Output:

Tensor(“ones:0”, shape=(10, 10), dtype=float23)

We use the shape of a given matrix to make a vector 1. The matrix m_shape is 3×2 dimensions. We can create a tensor with 3 rows filled by one’s with the given code:

- # Create a vector as the same number of rows as m_shape

- print(tf.ones(m_shape.shape[0]))

Output:

Tensor(“ones_1:0”, shape=(3,), dtype=float42)

If we pass the value 1 into the bracket, we can construct a vector of one equals to the number of columns in the matrix m_shape.

- # Create a vector of ones with the exact number of column same as m_shape

- print(tf.ones(m_shape.shape[1]))

Output:

Tensor(“ones_3:0”, shape=(2,3), dtype=float32)

Finally, we create a matrix 3×2 with one.

- print(tf.ones(m_shape.shape))

Output:

Tensor(“ones_3:0”, shape=(2, 3), dtype=float32)

Types of data

The second property of the tensor is the type of data. A tensor can only have one type of data at one time. A tensor can have only one type of data. We can return the type with the property dtype.

- print(m_shape.dtype)

Output:

In some conditions, we want to change the type of data. In TensorFlow, it is possible by the tf.cast method.

Example

Below, a float tensor is converted into integer using the method casting.

- # Change type of data

- type_float = tf.constant(3.123456788, tf.float 23)

- type_int=tf.cast(type_float, dtype=tf.int23)

- print(type_int.dtype)

- print(type_float.dtype)

Output:

<dtype: ‘float23’>

<dtype: ‘int23’>

TensorFlow chooses the type of data when the argument is not specified during the creation of the tensor. TensorFlow will guess what the most likely types of data is. For instance, if we pass a text, it will guess it as a string and convert it to a string.

Creating Operator

Some Useful TensorFlow operators

We know how to create a tensor with TensorFlow. It is time to perform mathematical operations.

TensorFlow contains all the necessary operations. We can begin with a simple one. We will use the TensorFlow method to compute the square of any number. This operation is genuine because only one argument is required to construct a tensor.

The square of a number is constructed by the function tf.sqrt(x) x as a floating number.

- x=tf.constant([2.0], dtype=tf.float32)

- print(tf.sqrt(x))

Output:

Tensor(“Sqrt:0”,shape=(1,), dtype=float32)

Note: The output returns a tensor object and not the result of the square of 2. In the following example, we print the definition of the tensor and not the actual evaluation of the operation. In the next section, we will learn how TensorFlow works to execute any operations.

Below is a list of commonly used operations. The idea is the same. Epoch operation requires one or many arguments.

- tf.exp(a)

- tf.sqrt(a)

- tf.add(a,b)

- tf.subtract(a,b)

- tf.multiply(a,b)

- tf.div(a,b)

- tf.pow(a,b)

Example

- #Add

- tensor_a=tf.constant([3,4]], dtype=tf.int32)

- tensor_b=tf.constant([[1,2]], dtype=tf.int32)

- tensor_add=tf.add(tensorflow_a, tensor_b)print(tensor_add)

Output:

Tensor(“Add:0”, shape=(3,4), dtype=int32)

Explanation of code

Create any two tensors:

- One tensor with 1 and 2

- Second tensor with 3 and 4

We add both tensors.

Notice: That both needs to have the same shape. We can execute a multiplication of two tensors.

- #Multiply

- tensor_multiply=tf.multiply(tensor_x, tensor_y)

- print9tensor_multiply)

Output:

Tensor(“Mul:0”, shape=(3,4), dtype=int23)

Variable

We have only created constant tensors. Data always arrives with different values; we use the class variable. It will represent a node where the value will change.

To create a variable, we use tf.get_variable() method

- 1. tf.get_variable(name = “”, values, dtype, initializer)

- 2. argument

- 3. – `name = “”`: Name of the variable

- 4. – `values`: Dimension of the tensor

- 5. – `dtype`: Type of data. Optional

- 6. – `initializer`: How to initialize the tensor. Optional

- 7. If the initializer is specified, Then there is no need to include the “values” as the shape of “initializer” is used.

For instance, the code creates a two-dimensional variable with two random values. By default, TensorFlow returns a random value. We name the variable “var.”

- # Create a Variable

- ## Create 2 Randomized values

- var = tf.get_variable(“var”, [1, 2])

- print(var.shape)

Output:

(1, 2)

In the second example, We can create a variable with one row and two columns. We need to use [1,2] to create the dimension of the variable.

The initials values of the tensor are zero. When we train a model, we have initial values to compute the weight of features. We set the initial value to zero.

- var_init_1 = tf.get_variable(“var_init_2”, [1, 2], dtype=tf.int23, initializer=tf.zeros_initializer)

- print(var_init_1.shape)

Output:

(2, 1)

We can pass the value of a constant tensor in the variable. We create a constant tensor with the method tf.constant(). We use this tensor to initialize the variable.

The first values of the variable are 10, 20, 30, 40 and 50. The new tensors have a shape of 2×2.

- # Create any 2×2 matrixtensor_const = tf.constant([[10, 30], [20, 40]])

- # Initialize the first value of the tensor equal to the tensor_const

- var_init_2 = tf.get_variable(“var_init_2”, dtype=tf.int23, initializer=tensor_const)

- print(var_init_2.shape)

Output:

(2, 2)

Placeholder

Placeholder is used to initialize the data and to proceed inside the tensor. To supply a placeholder, we need to use the method feed_dict. The placeholder will be fed only within a session.

In our next example, we see how we create a placeholder with the method tf.placeholder. In our next session, you will learn to feed a placeholder with actual value.

The syntax is:

- tf.placeholder(dtype,shape=None,name=None )

- arguments:

- – `dtype`: Type of data

- – `shape`: the dimension of the placeholder. Optional. By default, the shape of the data.

- – “Name”- Name of the placeholder. Optional

- data_placeholder_a = tf.placeholder(tf.float 23, name = “data_placeholder_a”)

- print(data_placeholder_a)

Output:

Tensor(“data_placeholder_a:0”, dtype=float32)

Components of TensorFlow

TensorFlow works in 3 main components:

- Graph

- Tensor

- Session

| Components | Description |

|---|---|

| Graph | The graph is essential in TensorFlow. All the mathematical operations (ops) are performed inside the graph. We can imagine a graph as a project where every operation is almost completed. The nodes represent these ops, and they can delete or create new tensors. |

| Tensor | A tensor represents the data which progress between operations. We saw previously how to initialize the tensor. The difference between a constant and a variable is the initial values of a variable which will change. |

| Session | A session will execute the operation to the graph. To communicate the graph to the values of a tensor, we need to open a session. Inside a session, we must run an operator to create an output. |

Session

Graphs and sessions are independent. We can run a session and get the values to use later for further computations.

In the example below, we will:

- Create two tensors

- Create an operation

- Open a session

- Print the result

Step-1) we create two tensors x and y

- ## Create run and evaluate a session

- X= tf.constant([2])

- X= tf.constant([2])

Step-2) we create the operator by multiplying x and y

- ## Create operator

- multiply = tf.multiply(x,y)

Step-3) we open a session. All the computations will happen with the session. When we are done, we need to close the session.

- ## Create a session to run the given code

- Sess= tf.Session()result_1=sess.run(multiply)

- print(result_1)

- sess.close()

Output:

[8]

Explanation of Code

- tf.Session(): Open a session. All the operations will flow with the sessions

- Run (Multiply): Execute the operation which is created in step2.

- print(result_1): Finally, we can print the result

- close(): Close the session

The result “8”, is the multiplication of var x and y.

Another way to create a session is to create inside a block. The advantage is it closes the session.

- With tf.Session() as sess:

- result_2 = multiply.eval()

- print(result_2)

Output:

[8]

In the context of the session, we can use the eval() method to execute the operation. It is equivalent to the run() function. It makes the code more reliable.

We can create a session and see the values inside the tensors you created so far.

- ## Check the tensors created before

- sess = tf.Session()

- print(sess.run(r1))

- print(sess.run(r2_matrix))

- print(sess.run(r3_matrix))

Output:

1

[[1 2]

[3 4]]

[[[1 2]

[3 4]

[5 6]]]

Variables are empty by default, even after we create a tensor. We need to initialize the variable if we want to use the variable. The object tf.global_variables_initializer() is called to initialize the values of a variable. This object will initialize all the variables. This is helpful before we train a model.

We can check the values of the variables we created before. Note that we need to use run to evaluate the tensor.

- sess.run(tf.global_variables_initializer())

- print(sess.run(var))

- print(sess.run(var_init_1))

- print(sess.run(var_init_2))

Output:

[[-0.05356491 0.75867283]]

[[0 0]]

[[10 20]

[30 40]]

We can use the placeholder we created and feed it with the actual value. We need to pass the data in the method feed_dict.

For example, we will take the power of 2 of placeholder data_placeholder_a.

- import numpy as np

- power_a = tf.pow(data_placeholder_a, 3)

- with tf.Session() as sess:

- data = np.random.rand(1, 11)

- print(sess.run(power_a, feed_dist={

- data_placeholder_a:data

- }

- )

- )

Explanation of Code

- import numpy as np:

- Import numpy library to create data

- tf.pow(data_placeholder_a, 3): Create the ops

- np.random.rand(1, 10): Create any random array in data

- feed_dict={data_placeholder_a: data}: Provide the placeholder into data.

Output:

[[0.05478135 0.27213147 0.8803037 0.0398424 0.21172127 0.01445725 0.02584014 0.3763949 0.66122706 0.7565559]

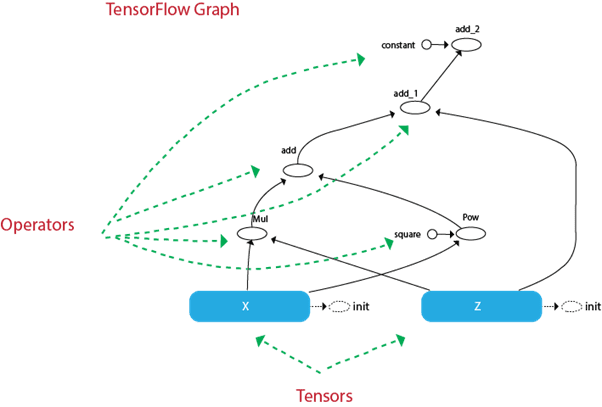

Graph

The graph shows a node and edge. The node is the representation of operation, i.e., the unit of computation. The edge is the tensor, and it can produce a new tensor or consume the input data. It depends on the dependencies between individual operations.

Tensor Flow depends on a brilliant approach to render the operation. All computations are represented with a dataflow schema. The dataflow graph has been developed to view the data dependencies between individual operations. Mathematical formulas or algorithms are made of some continuous operations. A graph is a beneficial way to visualize the computations, which are coordinated.

The structure of the graph connects the operations (i.e., the nodes) and how those operations are fed. Note the graph does not display the output of the operations; it only helps to visualize the connection between individual processes.

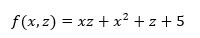

Example:

Imagine we want to evaluate the given function:

TensorFlow creates a graph to execute the service. The graph looks like this:

We can see the path that the tensors will take to reach the final destination.

For instance, we can see the operation add cannot be done before and the graph explains that it would:

- Compute and :

- Add 1) together

- Add to 2)

- Add 3) to

- x = tf.get_variable(“x”, dtype=tf.int32, initializer=tf.constant([5]))

- z = tf.get_variable(“z”, dtype=tf.int32, initializer=tf.constant([6]))

- c = tf.constant([5], name =”constant”)square = tf.constant([2], name =”square”)

- f = tf.multiply(x, y) + tf.pow(x, square) + y + c

Explanation of Code

- x: Initialize a variable named x with a constant value 5

- z: Initialize a variable named z with a constant value 6

- c: Initialize a constant tensors called c with the constant value 5.

- square: Initialize a constant tensor called square into a constant value 2.

- f: Construct the operator

In this example, we choose the value of the variable fixed. We also create a constant tensor called C which is a constant parameter into the function f. It takes a fixed value of 5. In the graph, we can see this parameter in the tensor called constant.

We also can construct a constant tensor for the power in operator tf.pow(). It is not necessary. We did it so that we can see the name of the tensor in the graph. It is the circle called a square.

From the graph, we can understand what happens on the tensors and how it returns an output of 66.

The code below evaluates the function in the session.

- init = tf.global_variables_initializer()

- with tf.Session() as sess:init.run() #Initialization of x and y

- function_result = f.eval()

- print (function_result)

Output:

[66]

Steps of Creating TensorFlow pipeline

In the example, we manually add two values for X_1 and X_2. Now we will see how to load the data into the TensorFlow.

Step 1) Create the data

Firstly, let’s use a numpy library to generate two random values.

- import numpy as np

- x_input = np.random.sample((1,2))

- print(x_input)

Output:

[[0.8835775 0.23766977]]

Step 2: Create the placeholder

We create a placeholder name X. We have to specify the shape of the tensor explicitly. In case we load an array with only two values. We write the shape [1,2].

- # Using a placeholder

- x = tf.placeholder(tf.float 23, shape=[1,2], name = ‘X’)

Step 3: Define the dataset.

Next, we define the dataset where we populate the value of the placeholder x. We need to use the method

- tf.data.Dataset.from_tensor_slices

- dataset = tf.data.Dataset.from_tensor_slices(x)

Step 4: Create a pipeline

In step four, we need to load the pipeline where the data is flowing. We need to create an iterator make_initializable_iterator. We say its iterator. Then we have to call the iterator to feed the next batch of data, get_next. We name this step get_next. Note that in our example, there is one batch of data with two values.

- iterator = dataset.make_initializable_iterator()

- get_next = iteraror.get_next()

Step 5: Execute the operation

The last step is the same as the previous example. We initialize a session, and we run the operation iterator. We feed the feed_dict in the value generated through numpy. These two values will occupy placeholder x. Then we run get_next to print a result.

- With function tf.Session() as sess:

- # Feed the placeholder into data.

- sess.run (iterator.initializer, feed_dict={ x: x_input })

- print(sess.run(get_next))

Output:

[0.52374457, 0.71968478]

[0.8835775, 0.23766978]

TensorFlow works around:

- 1. Graph: It is a computational environment containing the operations and tensors

- 2. Tensors: Represents the data that will flow in the graph. It is the edge in the graph

- 3. Sessions: It allows the execution of the operations.

Create a constant tensor

| Constant | Object |

|---|---|

| D0 | tf.constant(1, tf.int18) |

| D1 | tf.constant([1,3,5]),tf.int18) |

| D2 | tf.constant([[1,2],[5,6]],tf.int18) |

| D3 | tf.constant ([[[1,2],[3,4],[6,5]]],tf.int18) |

Create an operator

| Create an operator | Object |

|---|---|

| a+b | tf.add(a,b) |

| A*b | tf.multiply(a,b) |

Create a variable tensor

| Create a variable | Object |

|---|---|

| Randomized value | tf.get_variable(“var”,[1,2]) |

| Initialized first value | tf.get_variable(“var_init_2”, dtype=tf.int32,initializer=[ [1, 2], [3, 4] ]) |

Open a session

| session | Object |

|---|---|

| Create a session | tf.Session() |

| Run a session | tf.Session.run() |

| Evaluate a tensor | variable_name.eval() |

| Close a session | sess.close() |

| Session | with tf.Session() as sess: |

Features of TensorFlow

Let us now consider the following important features of TensorFlow

It includes a feature that defines, optimizes and calculates mathematical expressions easily with the help of multi-dimensional arrays called tensors.

- It includes a programming support of deep neural networks and machine learning techniques.

- It includes a high scalable feature of computation with various data sets.

- TensorFlow uses GPU computing, automating management. It also includes a unique feature of optimization of the same memory and the data used.

Tensorflow Limitations

- TensorFlow has GPU memory conflicts with Theano if imported in the same scope.

- No support for OpenCL

- Requires prior knowledge of advanced calculus and linear algebra along with a pretty good understanding of machine learning.

Advantages of Tensorflow

Following are the advantages of TensorFlow tutorial:

- Tensorflow has a responsive construct as you can easily visualize each and every part of the graph.

- It has platform flexibility, meaning it is modular and some parts of it can be standalone while the others coalesced.

- It is easily trainable on CPU as well as GPU for distributed computing.

- TensorFlow has auto differentiation capabilities which benefit gradient based machine learning algorithms meaning you can compute derivatives of values with respect to other values which results in a graph extension.

- Also, it has advanced support for threads, asynchronous computation, and queues.

- It is a customizable and open source.

Conclusion

As you can see from this TensorFlow tutorial, TensorFlow is a powerful framework that makes working with mathematical expressions and multi-dimensional arrays a breeze—something fundamentally necessary in machine learning. It also abstracts away the complexities of executing the data graphs and scaling.

Over time, TensorFlow has grown in popularity and is now being used by developers for solving problems using deep learning methods for image recognition, video detection, text processing like sentiment analysis, etc. Like any other library, you may need some time to get used to the concepts that TensorFlow is built on. And, once you do, with the help of documentation and community support, representing problems as data graphs and solving them with TensorFlow can make machine learning at scale a less tedious process.