- Multilayer Perceptron Tutorial – An Complete Overview

- AWS Machine Learning Tutorial | Ultimate Step-by-Step Guide

- Keras Tutorial : What is Keras? | Learn from Scratch

- Machine Learning Algorithms for Data Science Tutorial

- Machine Learning-Random Forest Algorithm Tutorial

- Naive Bayes

- Classification – Machine Learning Tutorial

- Tensorflow Tutorial

- Deep Learning Tutorial

- Perceptron Tutorial

- Machine Learning Tutorial

- Multilayer Perceptron Tutorial – An Complete Overview

- AWS Machine Learning Tutorial | Ultimate Step-by-Step Guide

- Keras Tutorial : What is Keras? | Learn from Scratch

- Machine Learning Algorithms for Data Science Tutorial

- Machine Learning-Random Forest Algorithm Tutorial

- Naive Bayes

- Classification – Machine Learning Tutorial

- Tensorflow Tutorial

- Deep Learning Tutorial

- Perceptron Tutorial

- Machine Learning Tutorial

Deep Learning Tutorial

Last updated on 25th Sep 2020, Blog, Machine Learning, Tutorials

What is Deep Learning?

Deep Learning is a subset of Artificial Intelligence – a machine learning technique that teaches computers and devices logical functioning. Deep learning gets its name from the fact that it involves going deep into several layers of network, which also includes a hidden layer. The deeper you dive, you more complex information you extract.

Deep learning methods rely on various complex programs to imitate human intelligence. This particular method teaches machines to recognise motifs so that they can be classified into distinct categories. Pattern recognition is an essential part of deep learning and thanks to machine learning, computers do not even need to depend on extensive programming. Through deep learning, machines can use images, text or audio files to identify and perform any task in a human-like manner.

All the self-driving cars you see, personalised recommendations you come across, and voice assistants you use are all examples of how deep learning is affecting our lives daily. If appropriately trained computers can successfully imitate human performance and at times, deliver accurate results – the key here is exposure to data. Deep learning focuses on iterative learning methods that expose machines to huge data sets. By doing so, it helps computers pick up identifying traits and adapt to change. Repeated exposure to data sets help machines understand differences, logics and reach a reliable data conclusion. Deep learning has evolved in recent times to become more reliable with complex functions. It’s no wonder that this particular domain is garnering a lot of attention and attracting young professionals.

Why is Deep Learning Important?

To say Deep Learning is important is, to say nothing about its growing popularity. It contributes heavily towards making our daily lives more convenient, and this trend will grow in the future. Whether it is parking assistance through technology or face recognition at the airport, deep learning is fuelling a lot of automation in today’s world.

However, deep learning’s relevance can be linked most to the fact that our world is generating exponential amounts of data today, which needs structuring on a large scale. Deep learning uses the growing volume and availability of data has been most aptly. All the information collected from these data is used to achieve accurate results through iterative learning models.

The repeated analysis of massive datasets eradicates errors and discrepancies in findings which eventually leads to a reliable conclusion. Deep learning will continue to make an impact in both business and personal spaces and create a lot of job opportunities in the upcoming time.

Subscribe For Free Demo

Error: Contact form not found.

How does Deep Learning work?

At its core, deep learning relies on iterative methods to teach machines to imitate human intelligence. An artificial neural network carries out this iterative method through several hierarchical levels. The initial levels help the machines learn simple information, and as the levels increase, the information keeps building. With each new level machines pick up further information and combines it with what it had learnt in the last level. At the end of the process, the system gathers a final piece of information which is a compound input. This information passes through several hierarchies and has semblance to complex logical thinking.

Let’s break it down further with the help of an example –

Consider the case of a voice assistant like Alexa or Siri, to see how it uses deep learning for natural conversation experiences. In the initial levels of neural network, when the voice assistant is fed data, it will try to identify voice inundations, intonations and more. For the higher levels, it will pick up information on vocabulary and add the findings of the previous levels to that. In the following levels, it will analyse the prompts and combine all its conclusions. For the topmost level of the hierarchical structure, the voice assistant will have learnt enough to be able to analyse a dialogue and based on that input, deliver a corresponding action.

How do Neurons work?

When the input nodes are provided with information, each node is assigned a value (in numerical form). Nodes with higher numbers have more activation value, and based on the transfer function and connection strength, nodes transfer the activation value.

Once the nodes receive the activation value it calculates the entire amount and modifies it according to the transfer function. The next step in the process is applying the activation function which helps the neuron to decide if a signal needs to be passed. After the activation process, weights are assigned to the synapses, to design the artificial neural network. Weights are crucial for teaching an ANN how to function. Weights can also be adjusted to decide the extent to which signals can be passed. Activation weights are frequently altered while training an Artificial Neural Network. Following the activation process, the network reaches the output nodes. This is the step which acts as an interface between the user and the system. The output node interprets the information for the user to understand. Cost functions compare the expected and real output to evaluate the model performance. Depending on your requirement, you can choose from a range of cost functions in order to reduce loss function. A lower loss function will result in a more accurate output.

Backpropagation, or backward propagation is a method of calculating error function gradient in keeping with the weights of the neural network. This process of backward calculation helps in eliminating the incorrect weights and reaching the desired goal.

Forward propagation, on the other hand, is a cumulative method of reaching the goal output. In this method, the input layers processes the information and propagates it forward through the network. Once expected results are compared with the outcome values, errors are calculated and the information is propagated backwards. After adjusting the weights to reach the optimal level, the network can be tested for the final outcome.

What is the difference between Deep Learning and Machine Learning?

Though often used interchangeably, deep learning and machine learning are both part of artificial intelligence and are not the same thing. Machine Learning is a broader spectrum which uses data to define and create learning models. Machine learning tries to understand the structure of data with statistical models. It starts with data mining where it extracts relevant information from data sets manually after which it uses algorithms to direct computers to learn from data and make predictions. Machine learning has been in use for a long time and has evolved over time. Deep Learning is a comparatively new field which focuses only on neural networking to learn and function. Neural networking, as discussed earlier, replicates the human neurals artificially to screen and gather information from data automatically. Since deep learning involves end-to-end learning where raw data is fed to the system, the more data it studies, the more precise and accurate the results are.

This brings us to the other difference between deep learning and machine learning. While the former can scale up with larger volumes of data, machine learning models are limited to shallow learning where it reaches a plateau after a certain level, and any more addition of new data makes no difference.

Following are the key differences between the two domain:

- Data Set Size: Deep Learning doesn’t perform well with a smaller data set. Machine Learning algorithms can process a smaller data set though (still big data but not the propensity of a deep learning data set) without compromising its performance. The accuracy of the model increases with more data, but a smaller data set may be the right thing to use for a particular function in traditional machine learning. Deep Learning is enabled by neural networks constructed logically by asking a series of binary questions or by assigning weights or a numerical value to every bit of data that passes through the network. Given the complexity of these networks at its multiple layers, deep learning projects require data as large as a Google image library or an Amazon inventory or Twitter’s cannon of tweets.

- Featured Engineering: An essential part of all machine learning algorithms, featured engineering and its complexity marks the difference between ML and DL. In traditional machine learning, an expert defines the features to be applied in the model and then hand-codes the data type and functions. In Deep Learning, on the other hand, featured engineering is done at sub-levels, including low to high-level features segregation to be fed to the neural networks. It eliminates the need for an expert to define the features required for processing by making the machine learn low-level features as simple as shape, size, textures, and pixel values, and high-level features such as facial data points and a depth map.

- Hardware Dependencies: Sophisticated high-end hardware is required to carry the heavyweight of matrix multiplication operations and computations that are the trademark of deep learning. Machine learning algorithms, on the other hand, can be carried out on low-end machines as well. Deep Learning algorithms require GPUs so that complex computations can be efficiently optimized.

- Execution Time: It is easy to assume that a deep learning algorithm will have a shorter execution time as it is more developed than a machine learning algorithm. On the contrary, deep learning requires a larger time frame to train not just because of the enormous data set but also because of the complexity of the neural network. A machine learning algorithm can take anything from seconds to hours to train, but a deep learning algorithm can go up to weeks, in comparison. However, once trained, the runtime of a deep learning algorithm is substantially less than that of machine learning.

An example would make these differences easier to understand:

Consider an app which allows users to take photos of any person and then helps to find apparels that are the same or similar to the ones featured in the photo. Machine learning will use data to identify the different clothing item featured in the photo. You have to feed the machine with the information. In this case, the item labelling will be done manually, and the machine will categorise data based on predetermined definitions.

In the case of deep learning, data labelling do not have to be done manually. Its neural network will automatically create its model and define the features of the dress. Now, based on that definition, it will scan through shopping sites and fetch you other similar clothing items.

Advantages

The deep learning does not require feature extraction manually, and it takes images directly as an input. It requires high-performance GPUs and lots of data for processing. The feature removal and classification are carried out by the deep learning algorithms this process is known as convolution neural network.

The performance of deep learning algorithms is improved when the amount of data increased. There are some advantages of deep learning which are given below:

- 1. The architecture of deep learning is flexible to be modified by new problems in the future.

- 2. The Robustness to natural variations in data is automatically learned.

- 3. Neural Network-based approach is applied in many different applications and data type.

Disadvantages

There are several disadvantages of deep learning which are given below:

- 1. We require a very large amount of data in deep learning to perform better than other techniques.

- 2. There is no standard theory to guide you in selecting the right deep learning tools. This technology requires knowledge of topology, training methods, and other parameters. As a result, it is not simple to be implemented by less skilled people.

- 3. The deep learning is extremely expensive to train the complex data models.

- 4. It requires expensive GPUs and hundreds of machines, and this increases the cost of the user.

Here is a quick introduction to the difference between Deep Learning and Machine Learning.

How to get started with Deep Learning?

Before getting started with Deep Learning, candidates must ensure that their mathematical and programming language skills are in place. Since Deep Learning is a subset of artificial intelligence, familiarity with the broader concepts of the domain is often a prerequisite. Following are the core skills of Deep Learning:

- Maths: If you are already freaking out at the sheer mention of maths, let me put your fears to rest. The mathematical requirements of deep learning are basic, the kind that’s taught at the undergraduate level. Calculus, probability and linear algebra are few of the examples of topics that you need to be through with. For professionals who are keen on picking up deep learning skills but do not have a degree in maths, there are plenty of ebooks and maths tutorials available online, which will help you learn the basics. These basic maths skills are required for understanding how the mathematical blocks of neural network work. Mathematical concepts like tensor and tensor operations, gradient descent and differentiation is crucial to neural networking. Refer to books like Calculus Made Easy by Silvanus P. Thompson, Probability Cheatsheet v2.0, The best linear algebra books, An Introduction to MCMC for Machine Learning to understand the basic concepts of maths.

- Programming Knowledge: Another prerequisite of grasping Deep Learning is knowledge of various programming languages. Any deep learning book will reveal that there are several applications for Deep Learning in Python as it is a highly interactive, portable, dynamic, and object-oriented programming language. It has extensive support libraries that limit the length of code to be written for specific functions. It is easily integrated with C, C++, or Java and its control capabilities along with excellent support for objects, modules, and other reusability mechanisms makes it the numero uno choice for deep learning projects. Easy to understand and implement, aspiring professionals in the domain start with Python as it is open-sourced. However, several questions have been raised on its runtime errors and speed. While Python is used in several desktop and server applications, it is not used for many mobile computing applications. While Python is a popular choice for many, owing to its versatility, Java and Ruby are equally suitable for beginners. Books like Learn to Program (Ruby), Grasshopper: A Mobile App to Learn Basic Coding (Javascript), A Gentle Introduction to Machine Fundamentals and Scratch: A Visual Programming Environment From MIT are some of the online resources you can refer to pick up coding skills. Great Learning, one of India’s most premier ed-tech platforms, even has a python curriculum designed for beginners who want to transition smoothly from non-technical backgrounds into Artificial Intelligence and Machine Learning. Following is a breakdown of the course showcasing what it covers:

- Cloud Computing: Since almost all kinds of computing are hosted by cloud today, basic knowledge of cloud is essential to master Deep Learning. Beginners can start by understanding how cloud service providers work. Dive deep into concepts like compute, databases, storage and migration. Familiarity with major cloud service providers like AWS and Azure will also give you a competitive advantage. Cloud computing also requires an understanding of networking which a concept closely associated with Machine Learning. Clearly, these techniques are not mutually exclusive and familiarising yourself with these concepts will help you each of the skills faster.

Now that we have covered the fundamentals of deep learning, it is time to dive deeper into the different ways in which Deep Learning can be put to use.

Deep Learning Types

- Deep Learning for Computer Vision: Deep Learning methods used to teach computers image classification, object identification and face recognition involves computer vision. Simply put, computer vision tries to replicate human perception and its various functions. Deep learning does that by feeding computers with information on:

1. Viewpoint variation: This is where an object is viewed from different perspectives so that its three-dimensional features are well recognised.

2. Difference in illumination: This refers to objects viewed in different lighting conditions.

3. Background clutter: This helps to distinguish obscure objects from a cluttered background.

4. Hidden parts of images: objects which are partially hidden in pictures need to be identified.

- Deep Learning for Text and Sequence: Deep learning is used in several text and audio classifications, namely like speech recognition, sentiment classification, Machine translation, DNA sequence analysis, video activity recognition and more. In each of these cases, sequence models are used to train computers to understand, identify and classify information. Different kinds of recurrent neural networks like many-to-many, many-to-one and one-to-many are used for sentiment classification, object recognition and more.

- Generative Deep Learning: Generative models are used for data distribution through unsupervised learning. Variational Autoencoder (VAE) and Generative Adversarial Networks (GAN) aims at distributing data optimally so that computers can generate new data points from different variations. VAE maximises the lower limit for data-log likelihood, whereas GAN tries to strike a balance between Generator and Discriminator.

Top Open Source Deep Learning Tools

Of the various deep learning tools available, these are the top freely available ones:

1. TensorFlow: one of the best frameworks, TensorFlow is used for natural language processing, text classification and summarization, speech recognition and translation and more. It is flexible and has a comprehensive list of libraries and tools which lets you build and deploy ML applications. TensorFlow finds most of its application in developing solutions using deep learning with python as there are several hidden layers (depth) in deep learning in comparison to traditional machine learning networks. Most of the data in the world is unstructured and unlabeled that makes Deep Learning TensorFlow one of the best libraries to use. A neural network nodes represent operations while edges stand for multidimensional data arrays (tensors) flowing between them.

2. Microsoft Cognitive Toolkit: Most effective for image, speech and text-based data, MCTK supports both CNN and RNN. For complex layer-type, users can use high-level language, and the fine granularity of the building blocks ensures smooth functioning.

3. Caffe: One of the deep learning tools built for scale, Caffe helps machines to track speed, modularity and expression. It uses interfaces with C, C++, Python, MATLAB and is especially relevant for convolution neural networks.

4. Chainer: A Python-based deep learning framework, Chainer provides automatic differentiation APIs based on the define-by-run approach (a.k.a. dynamic computational graphs). It can also build and train neural networks through high-level object-oriented APIs.

5. Keras: Again, a framework that can work both on CNN and RNN, Keras is a popular choice for many. Built on Python, it is capable of running on TensorFlow, CNTK, or Theano. It supports fast experimentation and can go from idea to result without any delay. The default library for Keras is TensorFlow. Keras is dynamic as it supports both recurrent networks and Convolutional Neural Networks and can also work on a combination of the two. Keras is popular for its user-friendliness guaranteed by its simple API. It is easier to debug Keras models as they are developed in Python. The compact models provide ease of extensibility with new modules that can be directly added as classes and functions in a building blocks kind of configuration.

6. Deeplearning4j: Also a popular choice, Deeplearning4j is a JVM-based, industry-focused, commercially supported, distributed deep-learning framework. The most significant advantage of using Deeplearning4j is speed. It can skim through massive volumes of data in very little time.

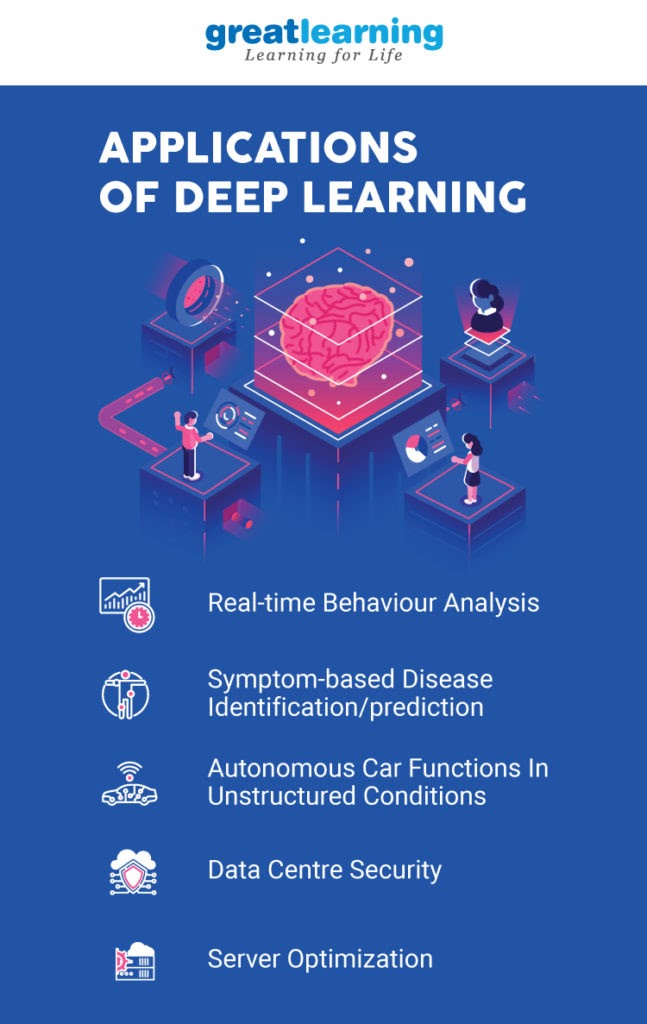

Commonly-Used Deep Learning Applications

- Virtual Assistants: Amazon Echo, Google Assistant, Alexa, and Siri are all exploiting deep learning capabilities to build a customized user experience for you. They ‘learn’ to recognize your voice and accent and present you a secondary human experience through a machine by using deep neural networks imitating not just speech but also the tone of a human. Virtual assistants help you shop, navigate, take notes and translate them to text, and even make salon appointments for you.

- Facial Recognition: The iPhone’s Facial Recognition uses deep learning to identify data points from your face to unlock your phone or spot you in images. Deep Learning helps them protect the phone from unwanted unlocks and making your experience hassle-free even when you have changed your hairstyle, lost weight, or in poor lighting. Every time you unlock your phone, deep learning uses thousands of data points to create a depth map of your face and the inbuilt algorithm uses those to identify if it is really you or not.

- Personalization: E-Commerce and Entertainment giants like Amazon and Netflix, etc. are building their deep learning capacities further to provide you with a personalized shopping or entertainment system. Recommended items/series/movies based on your ‘pattern’ are all based on deep learning. Their businesses thrive on pushing out options in your subconscious based on your preferences, recently visited items, affinity to brands/actors/artists, and overall browsing history on their platforms.

- Natural Language Processing: One of the most critical technologies, Natural Language Processing is taking AI from good to great in terms of use, maturity, and sophistication. Organizations are using deep learning extensively to enhance these complexities in NLP applications. Document summarization, question answering, language modelling, text classification, sentiment analysis are some of the popular applications that are already picking up momentum. Several jobs worldwide that depend on human intervention for verbal and written language expertise will become redundant as NLP matures.

- Healthcare: Another sector to have seen tremendous growth and transformation is the healthcare sector. From personal virtual assistants to fitness bands and gears, computers are recording a lot of data about a person’s physiological and mental condition every second. Early detection of diseases and conditions, quantitative imaging, robotic surgeries, and availability of decision-support tools for professionals are turning out to be game-changers in the life sciences, healthcare, and medicine domain.

- Autonomous Cars: Uber AI Labs in Pittsburg are engaging in some tremendous work to make autonomous cars a reality for the world. Deep Learning, of course, is the guiding principle behind this initiative for all automotive giants. Trials are on with several autonomous cars that are learning better with more and more exposure. Deep learning enables a driverless car to navigate by exposing it to millions of scenarios to make it a safe and comfortable ride. Data from sensors, GPS, geo-mapping is all combined together in deep learning to create models that specialize in identifying paths, street signs, dynamic elements like traffic, congestion, and pedestrians.

- Text Generation: Soon, deep learning will create original text (even poetry), as technologies for text generation is evolving fast. Everything from the large dataset comprising text from the internet to Shakespeare is being fed to deep learning models to learn and emulate human creativity with perfect spelling, punctuation, grammar, style, and tone. It is already generating caption/title on a lot of platforms which is testimony to what lies ahead in the future.

- Visual Recognition: Convolutional Neural Networks enable digital image processing that can further be segregated into facial recognition, object recognition, handwriting analysis, etc. Computers can now recognize images using deep learning. Image recognition technology refers to the technology that is based on the digital image processing technology and utilizes artificial intelligence technology, especially the machine learning method, to make computers recognize the content in the image. Further applications include colouring black and white images and adding sound to silent movies which has been a very ambitious feat for data scientists and experts in the domain.

Conclusion

The deep learning is the subset of Machine learning where artificial neural network, algorithms inspired by the human brain, learns from a large amount of data. The field of artificial intelligence is essential when machines can do tasks that typically need human intelligence. The deep learning algorithm would perform a task or job repeatedly. We refer to deep learning because the neural networks have several layers that enable learning.