A Complete Overview of Big Data Analytics Course

The Big Data Analytics Training in Bangalore is crafted to provide learners with in-depth knowledge of data processing, analysis, and visualization using tools like Hadoop, Spark, and MongoDB training. Through the Big Data Analytics course in Bangalore, participants gain hands-on experience with real-world datasets, guided by industry experts and project-based learning. This Big Data Analytics certification course is available in flexible formats, including online, self-paced, and instructor-led sessions to suit varied learning needs. Enrolling in the Big Data Analytics online course boosts your technical skills and enhances your employability in data-driven roles across industries. Completing the course and earning a Big Data Analytics Certification Course in Bangalore opens up job opportunities in top tech and analytics companies. Our Big Data Analytics training with placement support ensures you are job-ready with the right tools, techniques, and confidence to handle complex data challenges.

Additional Info

Emerging Future Trends of the Big Data Analytics Course

- AI-Powered Data Analysis:

Big Data Analytics training is rapidly integrating AI to enhance data interpretation and decision-making. Learners are now exposed to intelligent systems that automate pattern recognition and prediction. AI tools help simplify complex analytics tasks, making them faster and more accurate. Training programs include AI-driven algorithms for real-time insights. This trend empowers professionals to handle vast datasets more efficiently. As AI evolves, its role in analytics training will continue to grow.

- Real-Time Data Processing:

The demand for instant insights is driving the focus on real-time data analytics. Training now emphasizes tools like Apache Kafka and Spark Streaming. Learners gain skills to process and analyze live data feeds from diverse sources. This prepares them for environments where decisions must be made immediately. Real-time analytics is vital for sectors like finance, healthcare, and e-commerce. Future training will increasingly center around these high-speed processing systems.

- Cloud-Based Analytics Tools:

Cloud technology is transforming the way big data is stored and analyzed. Training programs now cover platforms like AWS, Azure, and Google Cloud. Learners explore scalable storage, distributed computing, and remote collaboration tools. The shift to cloud-based solutions reduces infrastructure costs and increases accessibility. Professionals trained in cloud analytics are more adaptable to hybrid work models. This trend makes analytics more flexible, efficient, and globally accessible.

- Data Privacy and Ethics:

With data volumes rising, privacy and ethics are becoming essential training components. Big Data Analytics courses now include lessons on GDPR, data anonymization, and ethical data usage. Learners understand the importance of securing personal information and maintaining compliance. Ethical considerations are integrated into case-based learning scenarios. This ensures professionals are prepared to navigate sensitive data issues responsibly. The future of data handling demands strong ethical awareness.

- Edge Computing Integration:

Edge computing is bringing analytics closer to data sources like IoT devices. Training programs are adapting by including edge processing concepts. Learners explore how to analyze data directly on devices with limited connectivity. This reduces latency and enhances real-time decision-making. Edge analytics is crucial in industries such as manufacturing, logistics, and smart cities. As edge computing expands, it will become a staple of analytics training.

- Visualization with Augmented Reality (AR):

AR is adding a new dimension to data visualization in training environments. Future Big Data courses are beginning to include AR tools for immersive data interpretation. Learners can interact with 3D graphs, models, and dashboards in real-time. This approach makes complex data more understandable and engaging. AR enhances learning retention and decision-making accuracy. As technology advances, AR will transform how analysts explore and present data.

- Automated Machine Learning (AutoML):

AutoML is simplifying model building by automating algorithm selection and tuning. Training in Big Data Analytics now incorporates AutoML platforms like H2O.ai and Google Cloud AutoML. Students learn to build effective models with minimal coding effort. This allows professionals to focus more on strategy and less on technical complexity. AutoML democratizes analytics, making it accessible to a broader audience. As a result, it’s becoming a core part of future-focused curriculums.

- Cross-Platform Data Integration:

As data sources multiply, integration across platforms is a key training focus. Courses now teach how to unify structured, unstructured, and semi-structured data. Learners practice connecting databases, APIs, and cloud services into one pipeline. This ensures seamless data flow and comprehensive insights. Integration skills are vital for holistic analytics strategies. The trend supports a more connected, intelligent data ecosystem.

- Domain-Specific Analytics:

Big Data training is shifting toward specialization in industries like finance, retail, and healthcare. Learners gain insights into domain-specific datasets, regulations, and KPIs. Customized projects and simulations prepare them for real-world challenges. This targeted approach makes training more relevant and impactful. Professionals become more valuable with tailored analytics knowledge. Future courses will offer increasingly niche-focused training paths.

- DataOps and Continuous Learning:

The rise of DataOps emphasizes agility, collaboration, and continuous delivery in analytics. Training now includes CI/CD for data pipelines, version control, and automated testing. Learners adopt a DevOps mindset for managing data workflows efficiently. This boosts productivity and ensures consistent data quality. Continuous learning is encouraged through evolving toolsets and real-time problem-solving. DataOps will become a foundational element in modern analytics education.

Essential Tools and Technologies for Big Data Analytics Course

- Hadoop:

Hadoop is a foundational big data framework that allows distributed storage and processing of large datasets. In training, it helps learners understand data management using HDFS and MapReduce. It enables scalability across commodity hardware, making it cost-effective. Hadoop supports multiple data formats and sources. Mastering it is essential for building data processing pipelines.

- Apache Spark:

Apache Spark offers fast, in-memory data processing and supports advanced analytics like machine learning. It allows learners to perform large-scale data analysis with minimal latency. Training focuses on Spark Core, SQL, Streaming, and MLlib. Its flexibility makes it suitable for batch and real-time tasks. Spark is a must-know tool for data engineers and scientists.

- Hive:

Hive simplifies querying big data using a SQL-like language called HiveQL. It is built on top of Hadoop and is ideal for structured data analysis. Training includes creating databases, tables, and running analytical queries. It bridges the gap between traditional SQL users and big data systems. Hive is widely used for data warehousing and summarization.

- Kafka:

Apache Kafka is a distributed streaming platform used for building real-time data pipelines. It allows learners to understand event-driven architecture and data ingestion. Training includes producing, consuming, and processing high-volume data streams. Kafka is essential in modern data architectures requiring low-latency communication. Its scalability and fault-tolerance make it popular in production systems.

- Tableau:

Tableau is a leading data visualization tool used to convert complex data into interactive dashboards. In big data training, learners use Tableau to present analytical results clearly. It supports connection to large-scale databases and real-time feeds. Training includes chart building, filters, and storyboarding. Tableau skills are crucial for communicating data insights effectively.

- Python:

Python is a versatile programming language with rich libraries like Pandas, NumPy, and Matplotlib. Training covers data cleaning, transformation, and statistical analysis using Python. It enables automation and integration across various data platforms. Learners also explore machine learning with libraries such as Scikit-learn. Python’s simplicity makes it a go-to tool for big data professionals.

- MongoDB:

MongoDB is a NoSQL database that handles unstructured and semi-structured data efficiently. In training, learners understand document-oriented storage and flexible schema design. It's ideal for storing JSON-like data and scaling horizontally. MongoDB supports aggregation pipelines and indexing for analytics. It's widely used in data-centric applications with evolving structures.

- Apache Flink:

Apache Flink is designed for real-time data stream processing and complex event handling. It enables learners to build systems that process continuous flows of data. Flink supports event time processing, fault-tolerance, and high throughput. It's particularly valuable in financial analytics and monitoring systems. Training includes working with streaming APIs and deploying Flink jobs.

- Elasticsearch:

Elasticsearch is a powerful search and analytics engine used for handling large text-based datasets. Training teaches indexing, full-text search, and real-time querying capabilities. It’s useful in log analytics, recommendation engines, and operational monitoring. Learners explore how to visualize data using Kibana. Elasticsearch adds speed and flexibility to big data systems.

- Talend:

Talend is an open-source data integration tool that simplifies ETL (Extract, Transform, Load) processes. In training, learners build workflows to move and cleanse data across systems. It supports cloud and on-premise environments with drag-and-drop interfaces. Talend integrates well with Hadoop, Spark, and cloud platforms. It’s essential for managing complex data pipelines effectively.

Essential Roles and Responsibilities of Big Data Analytics Course

- Data Analyst:

A Data Analyst in Big Data Analytics Training focuses on extracting insights from complex datasets. They learn to clean, organize, and visualize data using tools like Excel, SQL, and Tableau. Their role involves identifying patterns, trends, and key performance indicators. Training enhances their statistical thinking and business understanding. Analysts act as the bridge between data and decision-makers. They help companies make informed, data-backed choices.

- Data Engineer:

Data Engineers are trained to build and manage data pipelines and architectures. They work with tools like Hadoop, Spark, and Kafka to handle large-scale data movement. In training, they master techniques to store, transform, and optimize data for analytics. Their responsibilities include ensuring data integrity and system scalability. They play a crucial role in preparing data for analysts and scientists. A solid foundation in programming and cloud technologies is essential.

- Big Data Developer:

Big Data Developers focus on creating applications that process massive datasets in distributed systems. They gain skills in Java, Scala, Python, and big data platforms like Hive and Pig. During training, they learn to build real-time and batch processing solutions. Their work supports analytics, reporting, and operational tools. Developers ensure that big data solutions run efficiently and securely. They are vital in implementing scalable analytics frameworks.

- Data Scientist:

Data Scientists use big data to build predictive models and perform advanced statistical analysis. In training, they explore machine learning, AI integration, and data visualization techniques. They work with large, unstructured data from various sources and derive valuable insights. Their role involves experimenting, coding, and testing data models for accuracy. Scientists solve complex problems and influence business strategy through data. This role requires analytical creativity and technical expertise.

- Business Intelligence Analyst:

BI Analysts are responsible for turning big data into actionable business intelligence. Training teaches them to use tools like Power BI and Tableau for dashboard creation. They work closely with stakeholders to define KPIs and data-driven goals. Their job is to ensure that business leaders can make decisions based on real-time insights. BI Analysts align business objectives with data outcomes. They help organizations monitor and improve performance.

- Data Architect:

Data Architects design the blueprint of big data systems and infrastructure. They are trained in data modeling, storage solutions, and cloud integration. Their role ensures data flows seamlessly between sources, processing systems, and analytics tools. Architects focus on scalability, performance, and data security. They guide engineers and developers in implementing robust systems. Their planning enables efficient long-term data handling.

- Machine Learning Engineer:

Machine Learning Engineers apply algorithms to build intelligent systems using big data. In training, they learn about supervised and unsupervised learning, NLP, and deep learning. Their role requires handling high-volume datasets and training predictive models. They automate analytics and make systems smarter over time. This role demands coding expertise and mathematical fluency. They work closely with data scientists to deploy scalable solutions.

- ETL Developer:

ETL Developers specialize in Extract, Transform, Load processes in big data environments. Training covers tools like Talend, Informatica, and Apache Nifi. They ensure that data is cleansed, structured, and loaded into data warehouses or lakes. ETL Developers play a vital role in data preparation for analysis. They maintain the reliability and performance of data pipelines. Their work supports accurate and timely analytics delivery.

- Big Data Consultant:

Big Data Consultants guide organizations on how to implement data-driven strategies effectively. Training equips them with both technical and business knowledge. They evaluate current data capabilities and recommend tools, platforms, and architectures. Consultants help bridge the gap between data solutions and business value. They work across industries to optimize performance through analytics. Their insights help companies gain a competitive advantage.

- Data Visualization Specialist:

Visualization Specialists transform raw data into clear, engaging visuals that tell a story. In training, they master tools like Tableau, D3.js, and Power BI. They work with analysts and scientists to communicate findings visually. Their charts and dashboards enable quick interpretation of complex metrics. This role combines design thinking with data literacy. They play a key role in making data accessible to non-technical audiences.

Leading Companies Looking for Big Data Analytics Experts

- Amazon:

Amazon consistently recruits big data professionals to optimize customer experiences, streamline logistics, and enhance recommendation systems. With AWS leading in cloud services, Amazon needs talent proficient in analytics tools like Hadoop and Spark. Their data-driven culture demands continuous innovation powered by insights. Professionals with training in big data are crucial for A/B testing, fraud detection, and supply chain forecasting. A strong analytics team helps maintain their global competitive edge.

- Google:

Google utilizes massive datasets to power everything from search results to ad targeting. Professionals with big data training play a vital role in developing machine learning models and improving user personalization. Google Cloud Platform also provides services that require internal expertise and client-facing support. The company values individuals who can extract insights from structured and unstructured data. Their scale and diversity of projects make big data expertise essential.

- IBM:

IBM, a pioneer in data science, continuously seeks big data professionals to support its AI and cloud initiatives. With platforms like Watson and offerings in hybrid cloud, IBM integrates big data into enterprise-level solutions. Trained professionals are needed for client consulting, product development, and research. The company values individuals with strong backgrounds in analytics, statistics, and cloud integration. Their focus on digital transformation makes big data expertise highly sought-after.

- Accenture:

Accenture works with global clients to deliver data-driven strategies, requiring constant hiring of analytics-trained professionals. Their consulting services rely on real-time insights and predictive modeling. Accenture offers solutions in customer behavior analysis, supply chain optimization, and risk mitigation. Professionals trained in big data help deliver results that align with digital transformation goals. Their cross-industry clientele demands versatile analytics capabilities.

- Capgemini:

Capgemini prioritizes big data in its digital and cloud services for clients across industries. Their need for analytics professionals spans data engineering, AI integration, and performance analysis. Capgemini’s Insight & Data practice specifically targets individuals trained in big data technologies. Their transformation projects require people who can turn complex data into actionable strategies. The company fosters a strong learning culture, encouraging ongoing skill development in analytics.

- Deloitte:

Deloitte hires big data professionals to strengthen its advisory and consulting services. They use advanced analytics for business audits, financial forecasting, and operational efficiency. Deloitte’s analytics practice integrates AI, machine learning, and data visualization tools. Trained individuals are essential for helping clients harness the power of big data in real time. With a wide industry reach, Deloitte offers diverse opportunities for analytics professionals.

- Facebook (Meta):

Meta leverages big data to power its ad algorithms, user engagement metrics, and content moderation systems. The company requires skilled professionals who can navigate petabytes of data daily. Analytics training is vital to ensure data integrity, optimize performance, and create predictive models. Meta’s products—from Instagram to Oculus—rely heavily on user data for growth strategies. Professionals who understand big data tools are central to innovation and privacy controls.

- Oracle:

Oracle seeks big data experts to support its database management and cloud infrastructure solutions. Their offerings in autonomous databases and analytics platforms demand skilled professionals for customization and optimization. Oracle Cloud uses advanced analytics to serve enterprise clients, making trained talent indispensable. The company’s emphasis on real-time data and secure analytics positions it as a leader in this space. Professionals with expertise in SQL, NoSQL, and data lakes are highly valued.

- SAP:

SAP uses big data analytics to empower its enterprise software solutions, especially through SAP HANA and cloud analytics. Professionals trained in data integration and visualization help deliver business insights to global clients. SAP’s focus on intelligent enterprise transformation hinges on strong analytics capabilities. The company often collaborates with industries like manufacturing, finance, and retail. It relies on analytics experts to drive innovation and customer success.

- TCS (Tata Consultancy Services):

TCS consistently hires big data-trained professionals to support digital transformation projects across various sectors. Their global clientele requires data solutions for everything from customer personalization to risk modeling. TCS has a robust analytics and insights division where professionals use tools like Spark, Python, and Azure. Big data training is a key qualifier for many of their consulting and tech roles. The company's scale ensures ongoing demand for skilled data professionals.

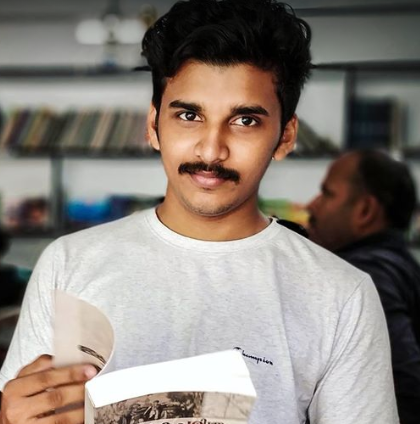

Regular 1:1 Mentorship From Industry Experts

Regular 1:1 Mentorship From Industry Experts