- Get 50+ SAP BW on HANA Interview Questions and Answers

- Data Warehousing Interview Questions and Answers

- ODI Interview Questions and Answers

- Ab Initio Interview Questions and Answers

- Data Warehouse Interview Questions and Answers

- Netezza Interview Questions and Answers

- SAP Bods Interview Questions and Answers

- SAS DI Interview Questions and Answers

- Datastage Interview Questions and Answers

- SSIS Interview Questions and Answers

- Informatica MDM Interview Questions and Answers

- Informatica Interview Questions and Answers

- Get 50+ SAP BW on HANA Interview Questions and Answers

- Data Warehousing Interview Questions and Answers

- ODI Interview Questions and Answers

- Ab Initio Interview Questions and Answers

- Data Warehouse Interview Questions and Answers

- Netezza Interview Questions and Answers

- SAP Bods Interview Questions and Answers

- SAS DI Interview Questions and Answers

- Datastage Interview Questions and Answers

- SSIS Interview Questions and Answers

- Informatica MDM Interview Questions and Answers

- Informatica Interview Questions and Answers

Datastage Interview Questions and Answers

Last updated on 05th Oct 2020, Blog, Datawarehouse, Interview Question

The IBM InfoSphere DataStage®\ offering is an industry-leading cloud-ready data integration solution. It provides real-time delivery of trusted data into data lakes, data warehouses, or any other multi or hybrid cloud environment to feed business-ready data into AI applications. Real-time analytics is now easier than ever, with a cloud-native architecture built on containers and microservices.

1.Highlight the main features of Datastage?

Ans:

The main features of Datastage are highlighted below:

- It is the data integration component of the IBM Infosphere information server.

- It is a GUI based tool. We just need to drag and drop the Datastage objects and we can convert it to Datastage code.

- It is used to perform the ETL operations (Extract, Transform, Load)

- It provides connectivity to multiple sources & multiple targets at the same time

- It provides partitioning and parallels processing techniques that enable the Datastage jobs to process a huge volume of data quite faster.

- It has enterprise-level connectivity.

2.What are the primary usages of the Datastage tool?

Ans:

Datastage is an ETL tool that is primarily used for extracting data from source systems, transforming that data and finally loading it to target systems.

3.What are the main differences you have observed between 7.x and 8.x versions of DataStage?

Ans:

Here are the main differences between both the versions

| 7.x | 8.x |

|---|---|

| 7.x version was platform dependent | This version is platform independent |

| It has 2-tier architecture where datastage is built on top of Unix server | It has 3-tier architecture where we have a UNIX server database at the bottom then XMETA database which acts as a repository and then we have datastage on top. |

| There is no concept of parameter set | We have parameter sets which can be used anywhere in the project. |

| We had designer and manager as two separate clients | In this version, the manager client was merged into designer client |

| We had to manually search for the jobs in this version | Here we have a quick find option in the repository where we can search easily for the jobs. |

4.Can you highlight the main features of the IBM Infosphere information server?

Ans:

The main features of IBM Infosphere information server suite are:

- It provides a single platform for data integration. It has the capability to connect to multiple source systems as well as write to multiple target systems.

- It is based on centralized layers. All the components of the suite are able to share the baseline architecture of the suite.

- It has layers for the unified repository, for integrated metadata services and a common parallel engine.

- It provides tools for analysis, cleansing, monitoring, transforming and delivering data.

- It has massively parallel processing capabilities. It turns out the processing to be very fast.

5.What are the different layers in the information server architecture?

Ans:

Below are the different layers of information server architecture

- Unified user interface

- Common services

- Unified parallel processing

- Unified Metadata

- Common connectivity

6.What could be a data source system?

Ans:

It could be a database table, a flat-file, or even an external application like people soft.

7.On which interface you will be working as a developer?

Ans:

As a Datastage developer, we work on the Datastage client interface which is known as a Datastage designer that needs to be installed on the local system. In the backend, it is connected to the Datastage server.

8.What are the different common services in Datastage?

Ans:

Below is the list of common services in Datastage:

- Metadata services

- Unified service deployment

- Security services

- Looping and reporting services.

9.How do you start developing a Datastage project?

Ans:

The very first step is to create a Datastage job on the Datastage server. All the Datastage objects that we create are stored in the Datastage project. A Datastage project is a separate environment on the server for jobs, tables, definitions, and routines.

A Datastage project is a separate environment on the server for jobs, tables, definitions, and routines.

10.What is a DataStage job?

Ans:

The Datastage job is simply a DataStage code that we create as a developer. It contains different stages linked together to define data and process flow.

Stages are nothing but the functionalities that get implemented.

For Example:

Let’s assume that I want to do a sum of the sales amount. This can be a ‘group by’ operation that will be performed by one stage.

Now, I want to write the result to a target file. So, this operation will be performed by another stage. Once, I have defined both the stages, I need to define the data flow from my ‘group by’ stage to the target file stage. This data flow is defined by DataStage links.

Once, I have defined both the stages, I need to define the data flow from my ‘group by’ stage to the target file stage. This data flow is defined by DataStage links.

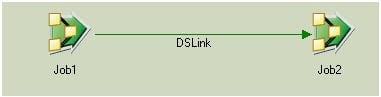

11.What are DataStage sequences?

Ans:

Datastage sequence connects the DataStage jobs in a logical flow.

12.If you want to use the same piece of code in different jobs, how will you achieve this?

Ans:

This can be done by using shared containers. We have shared containers for reusability. A shared container is a reusable job element consisting of stages and links. We can call a shared container in different Datastage jobs.

Subscribe For Free Demo

Error: Contact form not found.

13.Where do the Datastage jobs get stored?

Ans:

The Datastage jobs get stored in the repository. We have various folders in which we can store the Datastage jobs.

14.Where do you see different stages in the designer?

Ans:

All the stages are available within a window called ‘Palette’. It has various categories depending upon the kind of function that the stage provides.

The various categories of stages in the Palette are – General, Data Quality, Database, Development, File, Processing, etc.

15.What are the Processing stages?

Ans:

The processing stages allow us to apply the actual data transformation.

For example,

The ‘aggregator’ stage under the Processing category allows us to apply all the ‘group by’ operations. Similarly, we have other stages in Processing like the ‘Join’ stage that allows us to join together the data coming from two different input streams.

16.What are the steps needed to create a simple basic Datastage job?

Ans:

Click on the File -> Click on New -> Select Parallel Job and hit Ok. A parallel job window will open up. In this Parallel job, we can put together different stages and define the data flow between them. The simplest DataStage job is an ETL job.

In this, we first need to extract the data from the source system for which we can use either a file stage or database stage because my source system can either be a database table or a file.

Suppose we are reading data from a text file. In this case, we will drag and drop the ‘Sequential File’ stage to the parallel job window. Now, we need to perform some transformation on top of this data. We will use the ‘Transformer’ stage which is available under the Processing category. We can write any logic under the Transformer stage.

Finally, we need to load the processed data to some target table. Let’s say my target database is DB2. So, for this, we will select the DB2 connector stage. Then we will be connecting these data states through sequential links.

After this, we need to configure the stages so that they point to the correct filesystem or database.

For example,

For the Sequential file stage, we need to define the mandatory parameters like the file name, file location, column metadata.

Then we need to compile the Datastage job. Compiling the job checks for the syntax of the job and creates an executable file for the Datastage job that can be executed at run time.

17.Name the different sorting methods in Datastage.

Ans:

There are two methods available:

- Link sort

- Inbuilt Datastage Sort

18.In a batch if a job fails in between and you want to restart the batch from that particular job and not from the scratch then what will you do?

Ans:

In Datastage, there is an option in the job sequence – ‘Add checkpoints so the sequence is restart-able on failure’. If this option is checked, then we can rerun the job sequence from the point where it failed.

19.How do you import and export the Datastage jobs?

Ans:

For this, below command-line functions for this

- Import: dsimport.exe

- Export: dsexport.exe

20.What are routines in Datastage? Enlist various types of routines.

Ans:

Routine is a set of functions that are defined by the DS manager. It is run via the transformer stage.

There are 3 kinds of routines:

- Parallel routines

- Mainframe routines

- Server routines

21.How do you remove duplicate values in DataStage?

Ans:

There are two ways to handle duplicate values

- We can use remove duplicate stage to eliminate duplicates.

- We can use the Sort stage to remove duplicate. The sorting stage has a property called ‘allow duplicates’. We won’t get duplicate values in the output of sort on setting this property equal to false.

22.What are the different kinds of views available in a Datastage director?

Ans:

There are 3 kinds of views available in the Datastage director. They are:

- Log view

- Status view

- Job view

23.Distinguish between Informatica & Datastage. Which one would you choose and why?

Ans:

Both Informatica and DataStage are powerful ETL tools.

Enlisted points differentiate between both the tools:

| Informatica | Datastage | |

|---|---|---|

| Parallel Processing | Informatica does not support parallel processing. | In contrast to this, datastage provides a mechanism for parallel processing. |

| Implementing SCDs | It is quite simple to implement SCDs (Slowly changing dimensions) in Informatica. | However, it is complex to implement SCDs in datastage. Datastage supports SCDs merely through custom scripts. |

| Version Control | Informatica supports version controlling through check-in and check-out of objects. | However, we don’t have this functionality available in datastage. |

| Available Transformations | Lesser transformations are available. | Datastage offers more variety of transformations than Informatica. |

| Power of lookup | Informatica provides very powerful dynamic cache lookup | We don’t have any similar thing in the datastage. |

24.Give an idea of system variables.

Ans:

System variables are the read-only variables beginning with ‘@’ which can be read by either the transformer stage or routine. They are used to get the system information.

25.What is the difference between passive stage and active stage?

Ans:

Passive stages are utilized for extraction and loading whereas active stages are utilized for transformation.

26.What are the various kinds of containers available in Datastage?

Ans:

We have below 2 containers in Datastage:

- Local container

- Shared container

27.Is the value of the staging variable stored temporarily or permanently?

Ans:

Temporarily. It is a temporary variable.

28.What are the different types of jobs in Datastage?

Ans:

We have two types of jobs in Datastage:

- Server jobs (They run in a sequential manner)

- Parallel jobs (They get executed in a parallel way)

29.What is the use of a Datastage director?

Ans:

Through the Datastage director, we can schedule a job, validate the job, execute the job and monitor the job.

30.What are the various kinds of the hash file?

Ans:

We have 2 types of hash files:

- Static hash file

- Dynamic hash file

31.What is a quality stage?

Ans:

The quality stage (also called the integrity stage) is a stage that aids in combining the data together coming from different sources.

32.What are the types of stages?

Ans:

- General Objects

- Stages of Data Quality

- Development and Debug Stages

- Database connectors

- Restructure stages

- Real-time stages

- Debug and Development stages

- Sequence activities

33.What are the components of DataStage?

Ans:

DataStage has the number of client and server components. It has four main components, namely:

- Datastage Designer

- Datastage Director

- Datastage Manager

- Datastage Administrator

34.Explain a few features of DataStage.

Ans:

- Extracts data from any number or types of database.

- Handles all the metadata definitions required to define your data warehouse.

- You can view and modify the table definitions at any point during the design of your application.

- Aggregates data

- You can modify SQL SELECT statements used to extract data

- DataStage transforms data easily. It has a set of predefined transforms and functions.

- You can use it to convert your data and you can easily extend the functionality by defining your own transforms to use.

- Loads the data warehouse

35.What are the jobs available in DataStage?

Ans:

- Server job

- Parallel job

- Sequencer job

- Container job

36.State the difference between Server job and parallel job

Ans:

Server Jobs work in a sequential way while parallel jobs work in parallel fashion (Parallel Extender works on the principle of pipeline and partition) for I/O processing.

37.At which location DataStage repository is stored?

Ans:

DataStage stores its repository in IBM Universe Database.

38.Who are the DataStage clients or users?

Ans:

DataStage tool can be used by-

- DataStage Administrator

- DataStage Designer

- DataStage Manager

- DataStage Director

39.Explain Metadata.

Ans:

Data about data is known as metadata. A table definition describing the structure of the table is known as metadata.

40.State maps and locales

Ans:

Maps: Maps defines the sets that can be utilized in a project

Locales: Locales define the local formats for date, time, sorting order, etc. that can be utilized in a project.

41.State the types of server components.

Ans:

- Repository

- DataStage Server

- DataStage Package Installer

42.Define universal stage

Ans:

A stage that extracts data from or loads data into a Universe database using SQL. It is used to represent a data source, an aggregation step, or a target data table.

43.What is a dynamic array in DataStage?

Ans:

Dynamic arrays map the structure of DataStage file records to character string data. Any character string can be a dynamic array. A dynamic array is a character string containing elements that are substrings separated by delimiters.

44.What are the components of Ascential Datastage?

Ans:

Client Components: Administrator, Director, Manager, and Designer.

Server Components: Repository, Server, and Plugins.

45.What are the two types of containers used in DataStage?

Ans:

DataStage has two types of containers – Local Container and Shared Container

46.What is the hash file stage in DataStage?

Ans:

Hash file stage is a binary file used for lookup, for better performance.

47.Define state staging variable

Ans:

Staging variables are the temporary variables created in the transformer for calculation.

48.How can you convert the columns to rows in DataStage?

Ans:

The conversion of columns into rows can be done in DataStage utilizing pivot stage.

49.What is the merge stage?

Ans:

The Merge stage combines a sorted master data set with one or more sorted update data sets. The columns from the records in the master and update data sets are merged so that the output record contains all the columns from the master record plus any additional columns from each update record.

50.State the term repository.

Ans:

A repository is a central store that contains all the information required to build a data mart or data warehouse.

51.What is the work of a DataStage server?

Ans:

A DataStage server runs executable jobs that extract, transform, and load data into a data warehouse.

52.State a few advantages of DataStage.

Ans:

A data warehousing strategy has the following advantages :

- Capitalizes on the potential value of the organization’s information.

- Improves the quality and accessibility of data.

- Combines valuable archive data with the latest data in operational sources.

- Increases the amount of information available to users.

- Reduces the requirement of users to access operational data.

- Reduces the strain on IT departments, as they can produce one database to serve all user groups.

- Allows new reports and studies to be introduced without disrupting operational systems.

- Promotes users to be self-sufficient.

53.We have two containers in DataStage – local and shared containers, what does it mean?

Ans:

Local Container is local to the particular job in which we have developed the container and Shared Container can be used in any other jobs.

54.I have stored my data at orabulk stage, what does it mean?

Ans:

OraBulk stage is used to Bulk Load the Oracle Target Database. By storing data at the OraBulk stage of DataStage means your data is stored at the Oracle Target Database.

55.Define Active and Passive stages.

Ans:

Passive Stage is used for data extraction and loading

Active Stage is used to implement and process the business rules

56.What do you mean by data repository?

Ans:

MetaData is a data about the data. It contains-

- Query statistics

- ETL statistics

- Business subject area

- Source information

- Target information

- Source to target mapping Information

57.What is meant by join stage and merge stage?

Ans:

Join stage is used to perform join operations on one or more data sets input to the stage and then outputs the resulting dataset.

Merge stage is used to combine a sorted master data set with one or more sorted updated data sets. The columns from the records in the master and update data sets

arc merged so that the output record contains all the columns from the master record plus any additional columns from each update record that required.

58.What is the default cache size in DataStage?

Ans:

The default cache size in DataStage is 256 mb.

59.Can you reuse the components? If yes, then how?

Ans:

The components in DataStage can be reused by using shared and local containers.

60.How can we eliminate duplicate rows?

Ans:

DataStage provides us the capability to eliminate duplicate rows in EE (Enterprise Edition) only. Using EE of DataStage, the elimination of duplicate rows can be done based on a key column.

61.What command line functions are used to import and export the DataStage jobs?

Ans:

dsimport.exe command-line function is used to import the DataStage components

dsexport.exe command-line function is used to export the DataStage components

62.Did you use the conditional scheduling in your project? What is the use of it?

Ans:

Once during my project, I have used conditional scheduling. Using Sequencer Job we can create conditional scheduling.

63.What can we do with the DataStage Director?

Ans:

Validating, Scheduling, Executing, and Monitoring Jobs (server Jobs).

64.What is a Hash file and a Sequential file?

Ans:

Hash file saves data on a hash algorithm and on a hash key value, while a sequential file doesn’t have any key value to save the data. Based on this hash key feature, searching in a Hash file is faster than in a sequential file.

65.What is meant by NLS in DataStage?

Ans:

NLS is referred to as National Language Support

66.Explain integrity stage?

Ans:

Integrity stage is also known as the quality stage that helps in assistance in integrating various types of data from different sources.

67.Describe Oconv () And Iconv () Functions In Datastage?

Ans:

In Datastage, OConv () and IConv() functions are used to convert formats from one format to another i.e. conversions of time, roman numbers, radix, date, numeral ASCII etc. IConv () is mostly used to change formats for the system to understand. While, OConv () is used to change formats for users to understand.

68.Explain BCP stage?

Ans:

The BCP stage is used to store a big amount of data in one target table of Microsoft SQL Server.

69.What is the need of a link partitioner and link collector in DataStage?

Ans:

In Datastage, Link Partitioner is used to split data into various parts by certain partitioning methods. Link Collector is used to collect data from many partitions to a single data and save it in the target table.

70.State the difference between DataStage and DataStage TX?

Ans:

Datastage is a tool from ETL (Extract, Transform and Load) and DataStage TX is a tool from EAI (Enterprise Application Integration).

71.What is the use of Surrogate key?

Ans:

In Datastage, Surrogate Key is used instead of unique key. Surrogate key is mostly used for retrieving data faster. It uses Index to perform the retrieval operation. Surrogate key is a 4-byte integer which replaces the transaction / business / OLTP key in the dimension table. We can store up to 2 billion records.

72.Why is the surrogate key needed?

Ans:

The need of Surrogate key is for integrating the data may help better for the primary key. Additionally, it is used for index maintenance, joins, table size, key updates, disconnected inserts and partitioning.

73.How will you declare a constraint in DataStage?

Ans:

Constraints are expressions and you can specify a constraint for each output link from a transformer stage. You can also specify that a particular link is to act as an otherwise link and catch those rows that have failed to satisfy the constraints on all other output links.

- Select an output link and click the constraints button

- Double-click the output link’s constraint entry field

- Choose Constraints from the background or header shortcut menus

- A dialog box will appear which allows you either to define constraints for any of the transformer output links or to define a link as an otherwise link

74.How to execute a DataStage job from the command line?

Ans:

- Using “dsjob”. Following is the command:

- dsjob -run -jobstatus projectname jobname

75.What components are there in DataStage architecture?

Ans:

DataStage has three main components:

- 1.Client component

- DataStage Administrator

- DataStage Manager

- DataStage Designer

- DataStage Director

- DataStage Engine

- MetaData Repository

- Package Installer

- Used to create the project

- Contains set of properties

76.Explain Types of Fact Tables?

Ans:

- Factless Fact: It contains only foreign keys to the dimension tables.

- Additive Fact: Measures can be added across any dimensions.

- Semi-Additive: Measures can be added across some dimensions. Eg. % age, discount

- Non-Additive: Measures cannot be added across any dimensions. Eg. Average

- Conformed Fact: The equation or the measures of the two fact tables are the same under the facts are measured across the dimensions with the same set of measures.

77.What are the types of dimension tables?

Ans:

- Conformed Dimension: If a dimension table is connected to more than one fact table, the granularity that is defined in the dimension table is common across between the fact tables.

- Junk Dimension: The Dimension table, which contains only flags.

- Monster Dimension: If rapidly changes occur in dimension then it is known as Monster Dimension.

- Degenerate Dimension: It is line item-oriented fact table design.

78.What are Macros?

Ans:

They are built from DataStage functions and do not require arguments. A number of macros are provided in the JOBCONTROL.H file to facilitate getting information about the current job, and links and stages belonging to the current job. These can be used in expressions (for example for use in Transformer stages), job control routines, filenames and table names, and before/after subroutines.

79.What is Conformed Dimension?

Ans:

In DataStage, a conformed dimension is a dimension that has the same meaning to every fact with what it relates. It can exist as a single dimension table that relates to multiple fact tables within the same data warehouse, or as identical dimension tables in separate data marts.

80.Difference between Validated Ok and Compiled in DataStage

Ans:

Validating a job is all about running the job in ‘Check only’ mode.

The checks that will perform are-

- The connections are established to data sources or data warehouse

- The SELECT statements are prepared

- Intermediate files are opened in Hashed file, UniVerse or ODBC stages

The compilation is done by-

- Checking data stage engine to determine whether all needed properties are given

- Upon selecting Validate Job, the data stage checks the validity of all given properties

81.When do we use a parallel job and a server job?

Ans:

Using the parallel job or a server job depends on the processing need, functionality, time to implement, and cost. The server job usually runs on a single node, it executes on a DataStage Server Engine and handles small volumes of data. The parallel job runs on multiple nodes; it executes on a DataStage Parallel Engine and handles large volumes of data.

82.What is Usage Analysis in DataStage?

Ans:

If we want to check whether a certain job is part of the sequence, then we need to right-click on the Manager on the job and then choose the Usage Analysis.

83.How to find the number of rows in a sequential file?

Ans:

For counting the number of rows in a sequential file, we should use the @INROWNUM variable.

84.What is the difference between a sequential file and a hash file?

Ans:

The hash file is based on a hash algorithm, and it can be used with a key value. The sequential file, on the other hand, does not have any key-value column. The hash file can be used as a reference for a lookup, while a sequential file cannot be used for a lookup. Due to the presence fo the hash key, the hash file is easier to search than a sequential file.

85.How do we clean a DataStage repository?

Ans:

For cleaning a DataStage repository, we have to go to DataStage Manager > Job in the menu bar > Clean Up Resources.

If we want to further remove the logs, then we need to go to the respective jobs and clean up the log files.

86.What is the difference between an Operational DataStage and a Data Warehouse?

Ans:

An Operational DataStage can be considered as a staging area for real-time analysis for user processing; thus it is a temporary repository. Whereas, the data warehouse is used for long-term data storage needs and has the complete data of the entire business.

87.What is job control in DataStage?

Ans:

This tool is used for controlling a job or executing multiple jobs in a parallel manner. It is deployed using the Job Control Language within the IBM DataStage tool.

88.How to do DataStage jobs performance tuning?

Ans:

First, we have to select the right configuration files. Then, we need to select the right partition and buffer memory. We have to deal with the sorting of data and handling null-time values. We need to try to use modify, copy, or filter instead of the transformer. Reduce the propagation of unnecessary metadata between various stages.

89.Compare massive parallel processing with symmetric multiprocessing.

Ans:

In massive parallel processing, many computers are present in the same chassis. While in the symmetric multiprocessing, there are many processors that share the same hardware resources. Massive parallel processing is called ‘shared nothing’ as there is no aspect between various computers. And it is faster than the symmetric multiprocessing.

90.How can we kill a DataStage job?

Ans:

To kill a DataStage job, we need to first kill the individual processing ID so that this ensures that the DataStage is killed.

91.Explain the feature of data type conversion in DataStage.

Ans:

If we want to do data conversion in DataStage, then we can use the data conversion function. For this to be successfully executed, we need to ensure that the input or the output to and from the operator is the same, and the record schema needs to be compatible with the operator.

92.What is the significance of the exception activity in DataStage?

Ans:

Whenever there is an unfamiliar error happening while executing the job sequencer, all the stages after the exception activity are run. So, this makes the exception activity so important in the DataStage.

93.What are data and descriptor files?

Ans:

Both these files are serving different purposes in DataStage. A descriptor file contains all the information or description, while a data file is the one that just contains data.

94.What is the process for removing duplicates in DataStage?

Ans:

Duplicates in DataStage can be removed using the sort function. While running the sort function, we need to specify the option which allows for duplicates by setting it to false.

95.What is the difference between join, merge, and lookup stages?

Ans:

The fundamental difference between these three stages is the amount of memory they take. Other than that how they treat the input requirement and the various records are also factors that differentiate one another. Based on the memory usage, the lookup stage uses a very less amount of memory. Both lookup and merge stages use a huge amount of memory

Conclusion

You should carry a handy knowledge on Datastage architecture, its main features and you should be able to explain how it is different from some other popular ETL tools. Additionally, you should have a fair idea on different stages & their usage, end- to- end a way of creating a Datastage job & running it.