What is Azure Databricks | A Complete Guide with Best Practices

Last updated on 04th Nov 2022, Artciles, Blog

- In this article you will get

- 1.Databricks in Azure

- 2.Pros and Cons of Azure Databricks

- 3.Databricks Data Science & Engineering

- 4.Databricks Runtime

- 5.Databricks Machine Learning

- 6.Conclusion

Databricks in Azure

Azure Databricks is data analytics platform optimized for Microsoft Azure cloud services platform. An Azure Databricks provides a three environments:

- Databricks SQL.

- Databricks data science and engineering.

- Databricks machine learning.

Databricks SQL:

Databricks SQL offers a user-friendly platform. This helps to analysts, who work on a SQL queries, to run queries on a Azure Data Lake, create multiple virtualizations, and can build and share dashboards.

Databricks Data Science and Engineering:

Databricks data science and engineering offers an interactive working environment for the data engineers, data scientists, and machine learning engineers. The two ways to send data through a big data pipeline are:

- Ingest into an Azure through Azure Data Factory in the batches.

- A Stream real-time by using the Apache Kafka, Event Hubs, or IoT Hub.

Databricks Machine Learning:

Databricks machine learning is the complete machine learning environment. It helps to manage the services for an experiment tracking, model training, feature development, and management. It also does a model serving.

Pros and Cons of an Azure Databricks

Pros:

- It can be process the large amounts of data with the Databricks and since it is part of Azure; the data is a cloud-native.

- The clusters are simple to set up and configure.

- It has Azure Synapse Analytics connector as well as ability to connect to the Azure DB.

- It is integrated with an Active Directory.

- It supports the multiple languages. Scala is a main language, but it also works well with a Python, SQL, and R.

Cons:

- It does not an integrate with a Git or any other versioning tool.

- It, currently, only supports the HDInsight and not Azure Batch or AZTK.

Databricks Data Science & Engineering

Databricks Data Science & Engineering is also called a Workspace. It is an analytics platform that is based on a Apache Spark.

Databricks Data Science & Engineering comprises a complete open-source Apache Spark cluster technologies and also capabilities. Spark in a Databricks Data Science & Engineering includes following components:

Spark SQL and DataFrames: This is a Spark module for working with a structured data. A DataFrame is distributed collection of a data that is organized into a named columns. Streaming: This integrates with a HDFS, Flume, and Kafka. Streaming is a real-time data processing and analysis for the analytical and interactive applications.

MLlib: It is short for a Machine Learning Library consisting of general learning algorithms and utilities including the classification, regression, clustering, collaborative filtering, dimensionality reduction as well as an underlying optimization primitives.

GraphX: Graphs and graph computation for the broad scope of a use cases from cognitive analytics to the data exploration.

Spark Core API: This has support for a R, SQL, Python, Scala, and Java.

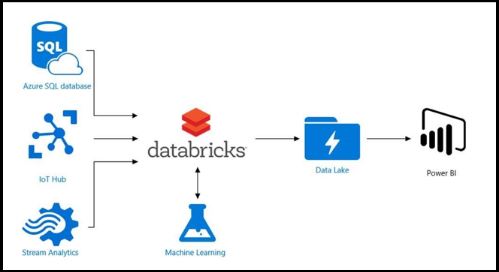

Integrating with an Azure Active Directory enables to run a complete Azure-based solutions by using a Databricks SQL. By integrating with Azure databases, Databricks SQL can store a Synapse Analytics, Cosmos DB, Data Lake Store, and a Blob Storage. By integrating with a Power BI, Databricks SQL allows users to a discover and share an insights more easily. BI tools, like Tableau Software, can also be used.

Databricks Runtime

The core components that run on a clusters managed by an Azure Databricks offer several runtimes:

- It includes an Apache Spark but also adds a numerous other features to improve big data analytics.

- Databricks Runtime for the machine learning is built on a Databricks runtime and provides a ready environment for the machine learning and data science.

- Databricks Runtime for genomics is the version of Databricks runtime that is optimized for working with a genomic and biomedical data.

- Databricks Light is an Azure Databricks packaging of an open-source Apache Spark runtime.

Databricks Machine Learning

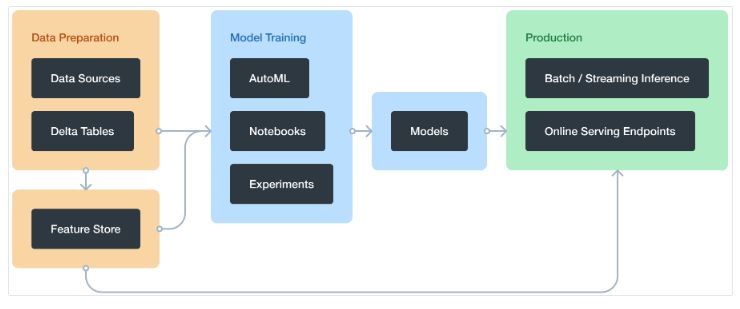

Databricks machine learning is the integrated end-to-end machine learning platform incorporating managed services for an experiment tracking, model training, feature development and management, and feature and also model serving. Databricks machine learning automates creation of the cluster that is optimized for machine learning. A Databricks Runtime ML clusters include most famous machine learning libraries like a TensorFlow, PyTorch, Keras, and XGBoost. It also includes the libraries, such as Horovod, that are needed for a distributed training.

With a Databricks machine learning, we can:

- Train models either manually or with an AutoML.

- Track training parameters and models by using the experiments with MLflow tracking.

- Create a feature tables and access them for the model training and inference.

- Share, manage, and serve the models by using a Model Registry.

- Also have access to all of capabilities of the Azure Databricks workspace like notebooks, clusters, jobs, data, Delta tables, security and admin controls, and many more.

Conclusion

Azure Databricks is simple , fast, and collaborative Apache spark-based analytics platform. It accelerates the innovation by bringing together data science, data engineering, and business. This helps to take a collaboration to the another step and makes a process of data analytics more productive, secure, scalable, and an optimized for Azure.

Are you looking training with Right Jobs?

Contact Us- What is Azure Active Directory B2C ? : Step-By-Step Process with REAL-TIME Examples

- Azure ExpressRoute | Everything You Need to Know | Expert’s Top Picks

- A Definitive Guide for Azure Automation | Benefits and Special Features

- Microsoft Azure Application Gateway | Step-By-Step Process with REAL-TIME Examples

- Introduction to Azure ASR-enabled servers | All you need to know [ OverView ]

Related Articles

Popular Courses

- Hadoop Developer Training

11025 Learners - Apache Spark With Scala Training

12022 Learners - Apache Storm Training

11141 Learners

- What is Dimension Reduction? | Know the techniques

- Difference between Data Lake vs Data Warehouse: A Complete Guide For Beginners with Best Practices

- What is Dimension Reduction? | Know the techniques

- What does the Yield keyword do and How to use Yield in python ? [ OverView ]

- Agile Sprint Planning | Everything You Need to Know