Artificial Neural Network Tutorial

Last updated on 29th Sep 2020, Blog, Tutorials

Artificial intelligence and machine learning haven’t just grabbed headlines and made for blockbuster movies; they’re poised to make a real difference in our everyday lives, such as with self-driving cars and life-saving medical devices. In fact, according to Global Big Data Conference, AI is “completely reshaping life sciences, medicine, and healthcare” and is also transforming voice-activated assistants, image recognition, and many other popular technologies.

Artificial Intelligence is a term used for machines that can interpret the data, learn from it, and use it to do such tasks that would otherwise be performed by humans. Machine Learning is a branch of Artificial Intelligence that focuses more on training the machines to learn on their own without much supervision.

What is a neural network? If you are not familiar with these terms, then this neural network tutorial will help gain a better understanding of these concepts. So, here is an overview of the topics covered in this tutorial:

- What can a neural network do?

- How does a neural network work?

- Types of neural networks

- A use case on classifying dog and cat images using Keras

Subscribe For Free Demo

Error: Contact form not found.

What is a Neural Network?

You’ve probably already been using neural networks on a daily basis. When you ask your mobile assistant to perform a search for you—say, Google or Siri or Amazon Web—or use a self-driving car, these are all neural network-driven. Computer games also use neural networks on the back end, as part of the game system and how it adjusts to the players, and so do map applications, in processing map images and helping you find the quickest way to get to your destination.

A neural network is a system or hardware that is designed to operate like a human brain.

Neural networks can perform the following tasks:

- Translate text

- Identify faces

- Recognize speech

- Read handwritten text

- Control robots

- And a lot more

Let us continue this neural network tutorial by understanding how a neural network works.

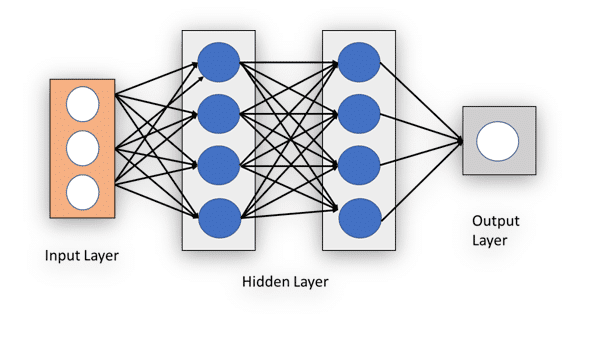

Working of Neural Network

A neural network is usually described as having different layers. The first layer is the input layer, it picks up the input signals and passes them to the next layer. The next layer does all kinds of calculations and feature extractions—it’s called the hidden layer. Often, there will be more than one hidden layer. And finally, there’s an output layer, which delivers the final result.

Let’s take the real-life example of how traffic cameras identify license plates and speeding vehicles on the road. The picture itself is 28 by 28 pixels, and the image is fed as an input to identify the license plate. Each neuron has a number, called activation, which represents the grayscale value of the corresponding pixel, ranging from 0 to 1—it’s 1 for a white pixel and 0 for a black pixel. Each neuron is lit up when its activation is close to 1.

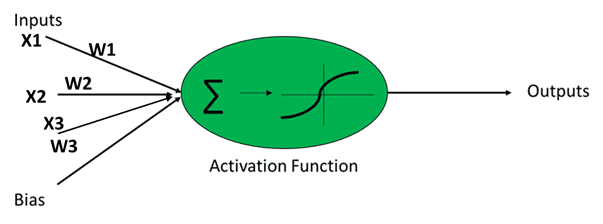

Pixels in the form of arrays are fed into the input layer. If your image is bigger than 28 by 28 pixels, you must shrink it down, because you can’t change the size of the input layer. In our example, we’ll name the inputs as X1, X2, and X3. Each of those represents one of the pixels coming in. The input layer then passes the input to the hidden layer. The interconnections are assigned weights at random. The weights are multiplied with the input signal, and a bias is added to all of them.

The weighted sum of the inputs is fed as input to the activation function, to decide which nodes to fire for feature extraction. As the signal flows within the hidden layers, the weighted sum of inputs is calculated and is fed to the activation function in each layer to decide which nodes to fire.

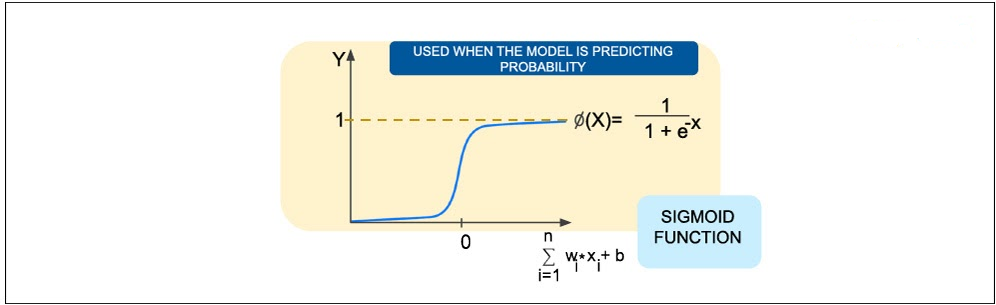

1.Sigmoid Function

The sigmoid function is used when the model is predicting probability.

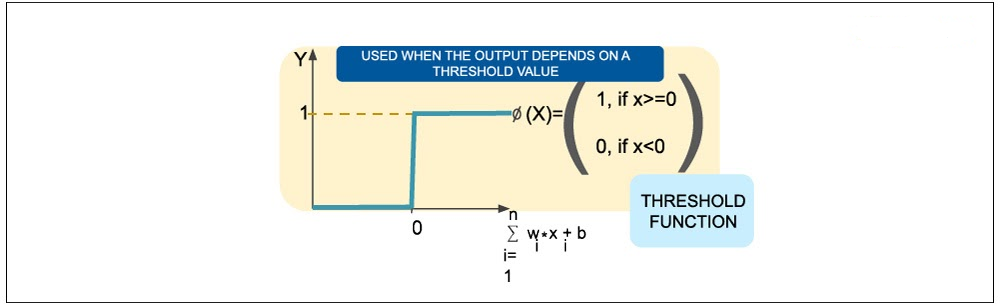

2.Threshold Function

The threshold function is used when you don’t want to worry about the uncertainty in the middle.

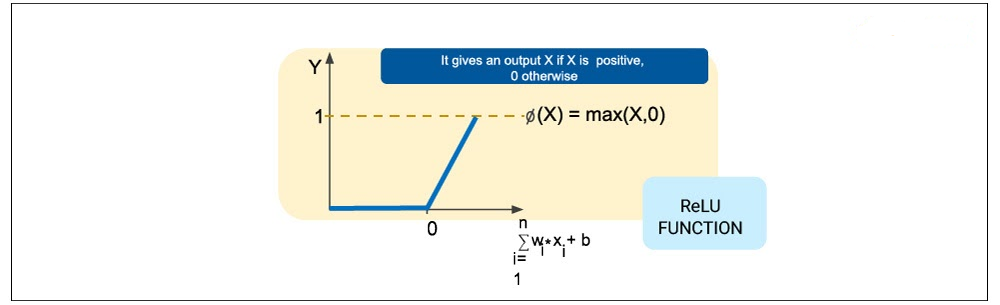

3.ReLU (rectified linear unit) Function

The ReLU (rectified linear unit) function gives the value but says if it’s over 1, then it will just be 1, and if it’s less than 0, it will just be 0. The ReLU function is most commonly used these days.

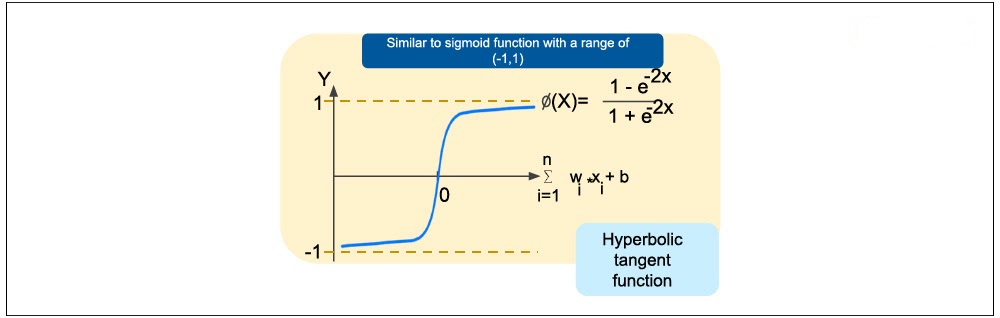

4.Hyperbolic Tangent FunctionThe hyperbolic tangent function is similar to the sigmoid function but has a range of -1 to 1.

Now that you know what an activation function is, let’s get back to the neural network. Finally, the model will predict the outcome, applying a suitable application function to the output layer. In our example with the car image, optical character recognition (OCR) is used to convert it into the text to identify what’s written on the license plate. In our neural network example, we show only three dots coming in, eight hidden layer nodes and one output, but there’s really a huge amount of input and output.

Error in the output is back-propagated through the network and weights are adjusted to minimize the error rate. This is calculated by a cost function. You keep adjusting the weights until they fit all the different training models you put in.

The output is then compared with the original result, and multiple iterations are done for maximum accuracy. With every iteration, the weight at every interconnection is adjusted based on the error. That math gets complicated, so we’re not going to dive into it here. But, we would look at how it’s being done while executing the code for our use-case.

In the following section of the neural network tutorial, let us explore the types of neural networks.

Structure of Neural Network

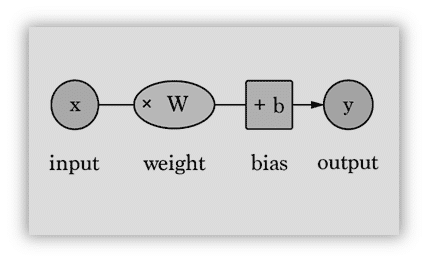

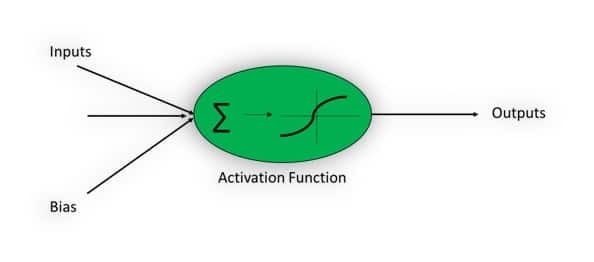

Artificial Neuron

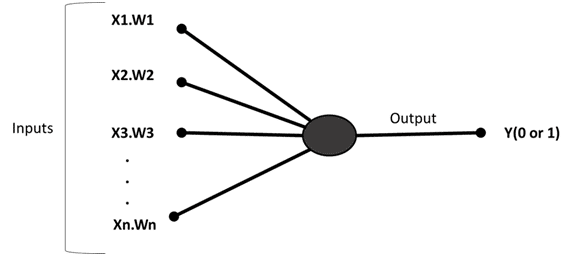

Artificial Neurons are also called perceptrons. This consist of the following basic terms:

- Input

- Weight

- Bias

- Activation Function

- Output

How perceptron works?

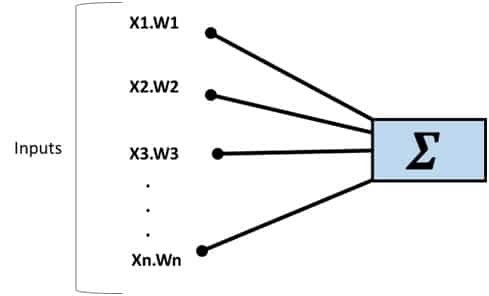

A. All the inputs X1, X2, X3,…., Xn multiplies with their respective weights.

B. All the multiplied values are added.

C. Sum of the values are applied to the activation function.

Weights and Bias

- Weights W1, W2, W3,…., Wn shows the strength of a neuron.

- Bias allows you to change/vary the curve of the activation curve.

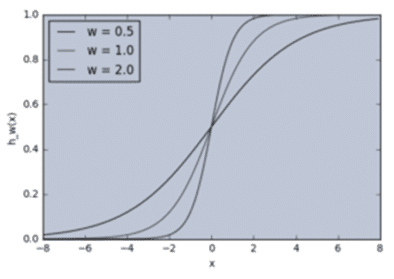

Weight without bias curve graph:

- W1 = 0.5

- W2 = 1.0

- W3 = 2.0

- X1 = ‘w = 0.5’

- X2 = ‘w = 1.0’

- X3 = ‘w = 2.0’

- for W, X in [(W1, X1), (W2, X2), (W3, X3)]:

- f = 1 / (1 + np.exp(-X*W))

- plt.plot(X, f, label=l)

- plt.xlabel(‘x’)

- plt.ylabel(‘h_w(x)’)

- plt.legend(loc=2)

- plt.show()

Here, by changing weights, you can vary input and output. Different weights change the output slope of the activation function. This can be useful to model Input-Output relationships.

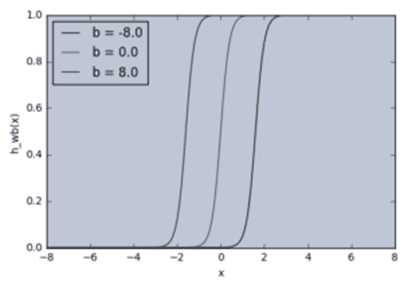

What if you only want output to be changed when X>1? Here, the role of bias starts.

Let’s alter the above example with bias as input.

- w = 5.0

- b1 = -8.0

- b2 = 0.0

- b3 = 8.0

- X1 = ‘b = -8.0’

- X2 = ‘b = 0.0’

- X3 = ‘b = 8.0’

- for b, X in [(b1,Xl1), (b2, X2), (b3, X3)]:

- f = 1 / (1 + np.exp(-(X*w+b)))

- plt.plot(X, f, label=l)

- plt.xlabel(‘x’)

- plt.ylabel(‘h_wb(x)’)

- plt.legend(loc=2)

- plt.show()

As you can see, by varying the bias b, you can change when the node activates. Without a bias, you cannot vary the output.

Input layer, Hidden layer and Output layer

Input Layer

Input layer contains inputs and weights. Example: X1, W1, etc.

Hidden Layer

In a neural network, there can be more than one hidden layer. Hidden layer contains the summation and activation function.

Output Layer

Output layer consists the set of results generated by the previous layer. It also contains the desired value, i.e. values that are already present in the output layer to check with the values generated by the previous layer. It may be also used to improve the end results.

Let’s understand with an example.

Suppose you want to go to a food shop. Based on the three factors you will decide whether to go out or not, i.e.

- for bad weather.

- You have vehicle available or not, i.e. X2. Say X2=1 for vehicles available and X2=0 for not having a vehicle.

- You have money or not, i.e. X3. Say X3=1 for having money and X3=0 for not having money.

Based on the conditions, you choose weight on each condition like W1=6 for money as money is the first important thing you must have, W2=2 for vehicle and W3=2 for weather and say you have set a threshold to 5.

In this way, perceptron makes a decision making model by calculating X1W1, X2W2, and X3W3 and comparing these values to the desired output.

Activation Function

Activation functions are used for non-linear complex functional mappings between the inputs and required variables. They introduce non-linear properties to our Network.

They convert an input of an artificial neuron to output. That output signal now is used as input in the next layer.

Simply, input between the required values like (0, 1) or (-1, 1) are mapped with the activation function.

Why Activation Function?

Activation Function helps to solve the complex non-linear model. Without activation function, output signal will just be a linear function and your neural network will not be able to learn complex data such as audio, image, speech, etc.

Some commonly used activation functions are:

- Sigmoid or Logistic

- Tanh — Hyperbolic tangent

- ReLu -Rectified linear units

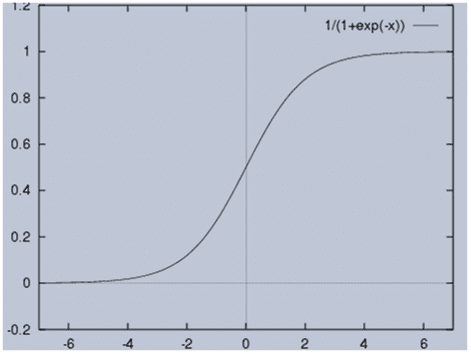

Sigmoid Activation Function:

Sigmoid Activation Function can be represented as:

- f(x) = 1 / 1 + exp(-x)

- It has some disadvantages like slow convergence, vanishing gradient problem or it kill gradient, etc. Output of Sigmoid is not zero centered that makes its gradient to go in different directions.

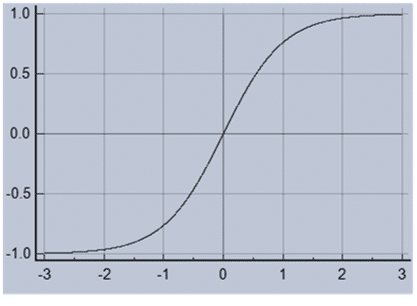

Tanh- Hyperbolic tangent

Tanh can be represented as:

- f(x) = 1 — exp(-2x) / 1 + exp(-2x)

It solves the problem occurring with Sigmoid function. Output of Tanh is zero centered because range is between -1 and 1.

Optimization is easy as compared to Sigmoid function.

Types of Neural Networks

The different types of neural networks are discussed below:

- 1.Feed-forward Neural Network

This is the simplest form of data travels only in one direction (input to output). This is the example we just looked at. When you actually use it, it’s fast; when you’re training it, it takes a while. Almost all vision and speech recognition applications use some form of this type of neural network. - 2.Radial Basis Functions Neural Network

This model classifies the data point based on its distance from a center point. If you don’t have training data, for example, you’ll want to group things and create a center point. The network looks for data points that are similar to each other and groups them. One of the applications for this is power restoration systems. - 3.Kohonen Self-organizing Neural Network

Vectors of random input are input to a discrete map composed of neurons. Vectors are also called dimensions or planes. Applications include using it to recognize patterns in data like a medical analysis. - 4.Recurrent Neural Network

In this type, the hidden layer saves its output to be used for future prediction. The output becomes part of its new input. Applications include text-to-speech conversion. - 5.Convolution Neural Network

In this type, the input features are taken in batches—as if they pass through a filter. This allows the network to remember an image in parts. Applications include signal and image processing, such as facial recognition.

- 6.Modular Neural Network

This is composed of a collection of different neural networks working togethe to get the output. This is cutting-edge and is still in the research phase.

The next section of the neural network tutorial deals with the use of cases of neural networks.

Neural Network – Use Case

Let’s use the system to tell the difference between a cat and a dog. Our problem statement is that we want to classify photos of cats and dogs using a neural network. We have a variety of dogs and cats in our sample images, and just sorting them out is pretty amazing!

Coding Language and Environment

We will implement our use case by building a neural network in Python(version 3.6). We’re going to start by importing the required packages using Keras:

Let’s talk about the environment we’re working on. You can visit the official website of Keras and the first thing you’ll notice is that Keras operates on top of TensorFlow, CNTK or Theano. TensorFlow is probably one of the most widely used packages with Keras.

Keras is user-friendly and has modularity and extensibility. It also works with Python, which is important because a lot of people in data science now use Python. When you’re working with Keras, you can add layer after layer with the different information in each, which makes it powerful and fast.

Side note: Here, we’re using Anaconda with Python in it, and we have created our own package called keraspython36. If you’re doing a lot of experimenting with different packages, you probably want to create your own environment in there.

In the Anaconda Navigator, our keraspython36 is listed under Environments. From the Home menu, we can launch the Jupyter Notebook, making sure we use the right environment that we just set up. You can use any kind of setup editor you’re comfortable with for what you’re doing, but we’re using Python and Jupyter Notebook for our example.

Conclusion

So we’ve successfully built a neural network using Python that can distinguish between photos of a cat and a dog. Imagine all the other things you could distinguish and all the different industries you could dive into with that. What an exciting time to live in with these tools we get to play with.

Are you looking training with Right Jobs?

Contact Us- Artificial Intelligence Tutorial

- Top Real World Artificial Intelligence Applications

- Artificial Intelligence for Beginners

- Top AI and Machine Learning Trends for 2020

- Artificial Intelligence Interview Questions and Answers

Related Articles

Popular Courses

- Machine Learning Online Training

11025 Learners - Artificial Intelligence Course Training

12022 Learners - RPA Training

11141 Learners

- What is Dimension Reduction? | Know the techniques

- Difference between Data Lake vs Data Warehouse: A Complete Guide For Beginners with Best Practices

- What is Dimension Reduction? | Know the techniques

- What does the Yield keyword do and How to use Yield in python ? [ OverView ]

- Agile Sprint Planning | Everything You Need to Know