What is Ridge Regression? Learn with examples

Last updated on 28th Jan 2023, Artciles, Blog

- In this article you will get

- What is Ridge regression?

- What is multicollinearity?

- Here are some examples of regularisation methods

- Ridge Regression example

What is Ridge regression?

The Ridge regression method is designed specifically for the analysis of multicollinear multiple regression data. Despite the fact that logistic and linear regression are the most popular members of the regression family.

Due to the complicated science involved, ridge regression, one of the most basic regularisation methods, is not widely employed. If you have a broad grasp of the notion of multiple regression, it’s not too difficult to examine the science underlying Ridge regression in r. The only difference between regularisation and regression is how the model coefficients are calculated. You must be well-versed in multicollinearity in order to comprehend this.

What is multicollinearity?

Multicollinearity is a statistical word that may be used to relate to the idea of collinearity. In order to achieve a desired degree of precision, multiple regression models often linearly predict one value with others.When there are strong correlations between more than two anticipated variables, we say that there is multicollinearity.

The ridge regression formula in R is as follows, with an illustration:

Height, weight, age, yearly salary, etc. are all examples of such measurables.

Two alternative regularisation methods are shown here; both are capable of producing parsimonious models with a high number of features, but their applications and underlying qualities couldn’t be more unlike.

- Ridge regression

- Lasso regression

Here are some examples of regularisation methods

Larger feature coefficients should be punished.Reduce as much as possible the discrepancy between observed and anticipated data.

Now let’s look at how the ridge regression model works in practise:

To regularise ridge regression, L2 is used. Here, the comparable penalty is applied to the square of the magnitude of the coefficients.Here is how we might express the goal of minimization.

Let’s now examine the ridge regression model’s operation:

To regularise ridge regression, L2 is used. Here, the comparable penalty is applied to the square of the magnitude of the coefficients. Here is how we might express the goal of minimization.

The ridge regression coefficients are determined by a response vector y Rn and a predictor matrix X Rnp.

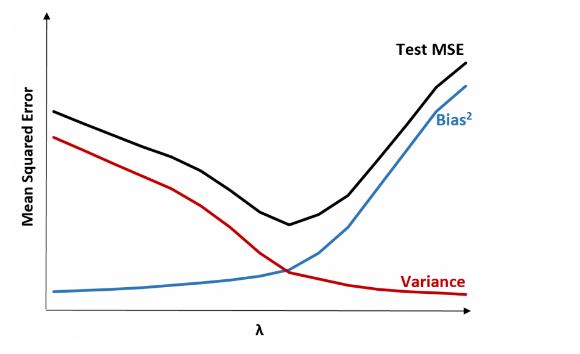

- Here λ is the turning factor that controls the strength of the penalty term.

- If λ = 0, the objective becomes similar to simple linear regression. So we get the same coefficients as simple linear regression.

- If λ = ∞, the coefficients will be zero because of infinite weightage on the square of coefficients as anything less than zero makes the objective infinite.

- If 0 < λ < ∞, the magnitude of λ decides the weightage given to the different parts of the objective.

- In simple terms, the minimization objective = LS Obj + λ (sum of the square of coefficients).

- Where LS Obj is Least Square Objective that is the linear regression objective without regularisation.

Ridge regression in r creates some bias since it reduces the coefficients to zero. However, it has the potential to significantly decrease the variation, leading to a lower mean-squared error. Shrinkage is proportional to the product of the ridge penalty and (theta). Since larger values result in more shrinkage, alternative estimations of the coefficient are possible.

Ridge Regression example

Ridge regression may be used to analyse prostate-specific antigen and clinical parameters in patients undergoing radical prostatectomy, for instance. Ridge regression works well when some of the genuine coefficients are modest or even zero. However, it performs poorly when all the actual coefficients are around the same size. However, linear regression may still be performed within a somewhat tight (short) range of values.

Only a small number of additional regressions would improve the accuracy of analysis and prediction. Following is a list of them:

- Linear regression

- Logistic regression

- Polynomial regression

- Stepwise regression

- Lasso regression

- Regression analysis

- Multiple regression

Linear Regression:In predictive modelling, it is the go-to method since it is both simple and effective. The result is an equation in which the factors outside of the dependent one are all tied to the dependent one. For further information, check out the Wiki page on Linear Regression.

Logistic Regression:It is a sort of regression model in which the dependant variable is a boolean value. This technique is used to shed light on the connection between two binary independent variables at the ratio level. To learn more about Logistic Regression, check out this article.

Polynomial Regression:Here we have another kind of regression in which the independent variable might have a power greater than one. The equation of the polynomial of the second order is as follows:

- Y =Θ1 +Θ2*x +Θ3*x2

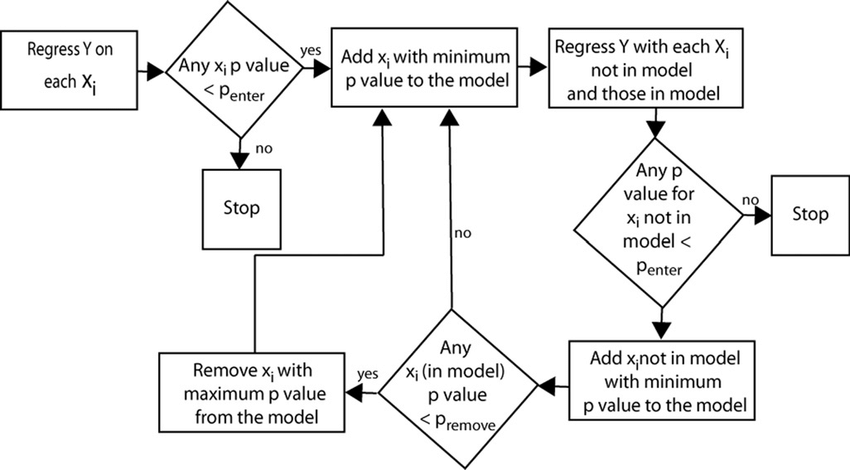

Stepwise Regression:Also, it’s a form of regression method that helps construct a predictive model via the process of adding and eliminating variables. This method calls for careful consideration and the expertise of those familiar with statistical testing. Learn the fundamentals of stepwise regression to get a handle on this.

Lasso Regression:In many ways, LASSO is analogous to strict regression. This model produced highly-featured parsimonious models. There are a plethora of variables to include into the model, and the computing demands are substantial. Learn more about Lasso Regression by reading the associated article.

Regression Analysis:It’s a method used in statistics that helps researchers determine the quantitative connection between an outcome and its consequences. This method was used for making predictions.

Multiple Regression:It is an effective method for predicting future values of a variable based on the values we now know. The information that has already been gathered is sorted into predictors. As an example, let’s use the notation X1, X2, X3, etc. Improve your comprehension by learning more about multiple regression.

Are you looking training with Right Jobs?

Contact Us- What is information security architect?

- What is the Average AWS Solutions Architect Salary?

- Technical Architect | Free Guide Tutorial & REAL-TIME Examples

- Introduction to HBase and Its Architecture | A Complete Guide For Beginners

- What is IoT Architecture | How its Work [ OverView ]

Related Articles

Popular Courses

- Hadoop Developer Training

11025 Learners - Apache Spark With Scala Training

12022 Learners - Apache Storm Training

11141 Learners

- What is Dimension Reduction? | Know the techniques

- Difference between Data Lake vs Data Warehouse: A Complete Guide For Beginners with Best Practices

- What is Dimension Reduction? | Know the techniques

- What does the Yield keyword do and How to use Yield in python ? [ OverView ]

- Agile Sprint Planning | Everything You Need to Know