- Probability Density Function in Statistics Tutorial | Definition, Formula & Examples

- Best Clojure Tutorial | Ultimate Guide to Learn [UPDATED]

- Introduction to RapidMiner Tutorial | Get Started with RapidMiner

- R Tutorial

- Spark SQL Tutorial

- Data Extraction in R Tutorial

- Introduction to RapidMiner Tutorial | Get Started with RapidMiner

- Data Scientist vs Data Analyst vs Data Engineer Tutorial

- Unsupervised Learning with Clustering- Machine Learning Tutorial

- Data Scientist Report 2020 Tutorial

- Tutorial on Statistics and Probability for Data Science | All you need to know [ OverView ]

- Machine Learning-K-Means Clustering Algorithm Tutorial

- Julia Tutorial

- Data Science Tutorial

- Probability Density Function in Statistics Tutorial | Definition, Formula & Examples

- Best Clojure Tutorial | Ultimate Guide to Learn [UPDATED]

- Introduction to RapidMiner Tutorial | Get Started with RapidMiner

- R Tutorial

- Spark SQL Tutorial

- Data Extraction in R Tutorial

- Introduction to RapidMiner Tutorial | Get Started with RapidMiner

- Data Scientist vs Data Analyst vs Data Engineer Tutorial

- Unsupervised Learning with Clustering- Machine Learning Tutorial

- Data Scientist Report 2020 Tutorial

- Tutorial on Statistics and Probability for Data Science | All you need to know [ OverView ]

- Machine Learning-K-Means Clustering Algorithm Tutorial

- Julia Tutorial

- Data Science Tutorial

Spark SQL Tutorial

Last updated on 12th Oct 2020, Blog, Data Science, Tutorials

What is Spark SQL?

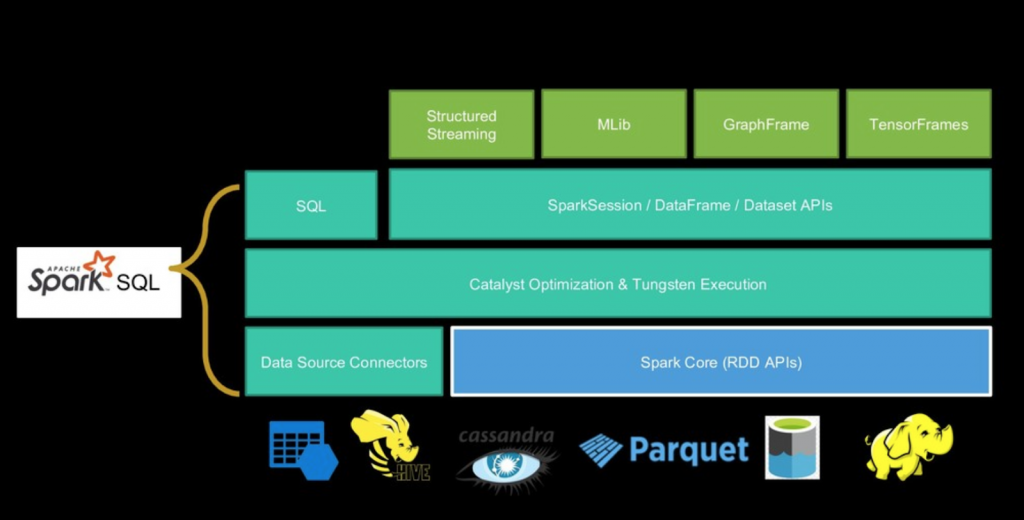

Spark SQL is a module for structured data processing, which is built on top of core Apache Spark.

Apache Spark SQL provides the following:

- DataFrame API: It is a library for working with data as tables

- Defining Data Frames: Defines Data Frames containing Rows and Columns

- Catalyst Optimizer: It is an extensible optimization framework A SQL Engine and Command Line Interface.

Importance of Spark SQL

Spark SQL is an Apache Spark module used for structured data processing, which:

- Acts as a distributed SQL query engine

- Provides DataFrames for programming abstraction

- Allows to query structured data in Spark programs

- Can be used with platforms such as Scala, Java, R, and Python.

Features of Spark SQL

The features of Apache Spark SQL are:

- Hive Compatibility: Compatible with the existing Hive queries, UDFs, and data.

- SQL Queries Support: Mixes SQL queries with Spark programs.

- Components Support: Includes a cost-based optimizer, code generations, and columnar storage.

- Spark Engine Inclusion: Allows it to scale to multi-hour queries and thousands of nodes.

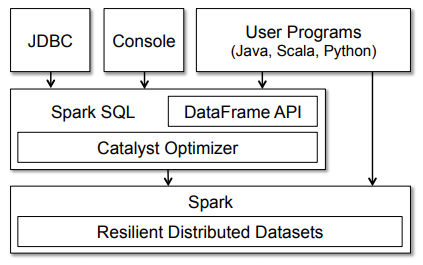

Spark SQL Architecture

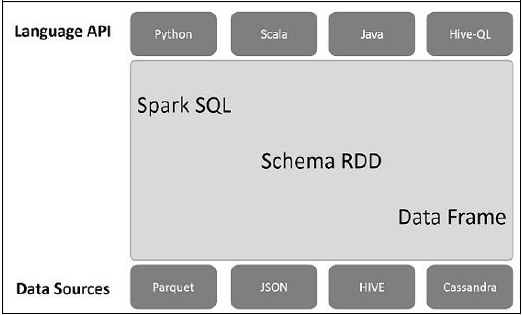

The following illustration explains the architecture of Spark SQL −

This architecture contains three layers namely, Language API, Schema RDD, and Data Sources.

- Language API: Spark is compatible with different languages and Spark SQL. It is also supported by these languages- API (python, scala, java, HiveQL).

- Schema RDD : Spark Core is designed with a special data structure called RDD. Generally, Spark SQL works on schemas, tables, and records. Therefore, we can use the Schema RDD as a temporary table. We can call this Schema RDD as a Data Frame.

- Data Sources: Usually the Data source for spark-core is a text file, Avro file, etc. However, the Data Sources for Spark SQL is different. Those are Parquet files, JSON documents, HIVE tables, and Cassandra databases.

Subscribe For Free Demo

Error: Contact form not found.

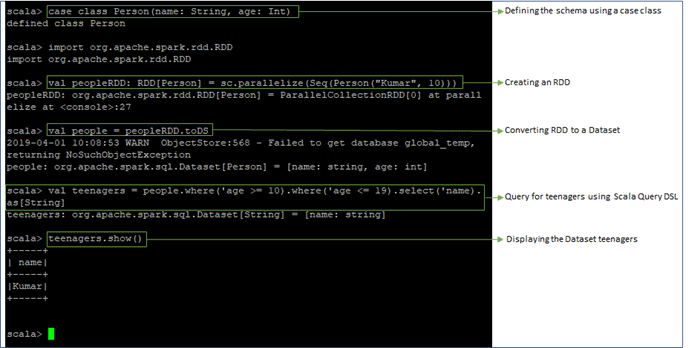

Querying Using Spark SQL

In Spark SQL, Datasets comprise distributed computation that is converted into a sequence of RDDs called DAG (Directed Acyclic Graph). Here is a simple example for querying using Spark SQL

.

If you have any query related to Spark and Hadoop, kindly refer to our Big Data Hadoop and Spark Community!

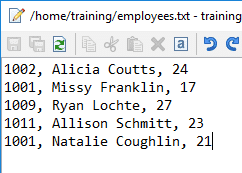

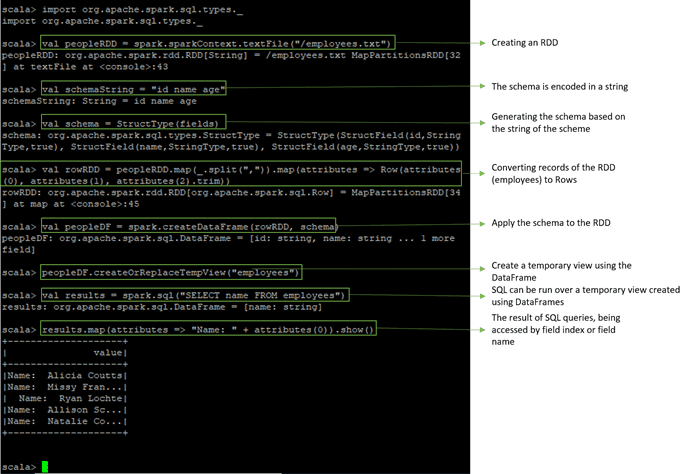

Adding a Schema to RDDs Programmatically

- We can add a schema to an existing RDD programmatically. It can be done by importing the respective API, applying transformations, etc.

- In the below example, a text file is being stored into an RDD. And then, we are adding a schema to that RDD.

The file employees.txt has the following data:

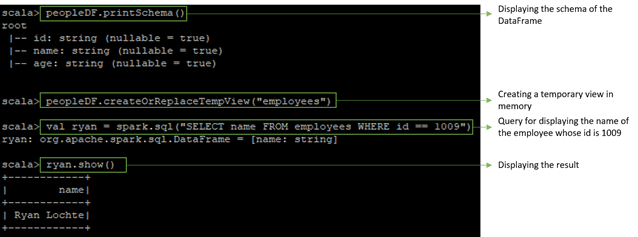

Caching Tables In-memory

- Here, we are creating a temporary view in memory for the above text file. Temporary views can be accessed faster as they are stored in memory. Since queries are performed on a cached table, we will get the desired results in no time.

- Caching is mainly done for faster execution.

Spark SQL DataFrames

- There were some limitations with RDDs. When working with structured data, there was no inbuilt optimization engine.

- On the basis of attributes, the developer optimized each RDD. Also, there was no provision to handle structured data. The DataFrame in Spark SQL overcomes these limitations of RDD.

- Spark DataFrame is Spark 1.3 release. It is a distributed collection of data ordered into named columns. Concept wise it is equal to the table in a relational database or a data frame in R/Python. We can create DataFrame using:

- Structured data files

- Tables in Hive

- External databases

- Using existing RDD

Spark SQL Datasets

- Spark Dataset is an interface added in version Spark 1.6. it is a distributed collection of data. Dataset provides the benefits of RDDs along with the benefits of Apache Spark SQL’s optimized execution engine. Here an encoder is a concept that does conversion between JVM objects and tabular representation.

- A Dataset can be made using JVM objects and after that, it can be manipulated using functional transformations (map, filter etc.). The Dataset API is accessible in Scala and Java. Dataset API is not supported by Python. But because of the dynamic nature of Python, many benefits of Dataset API are available. The same is the case with R. Using a Dataset of rows we represent DataFrame in Scala and Java. Follow this comparison guide to learn the comparison between Java vs Scala.

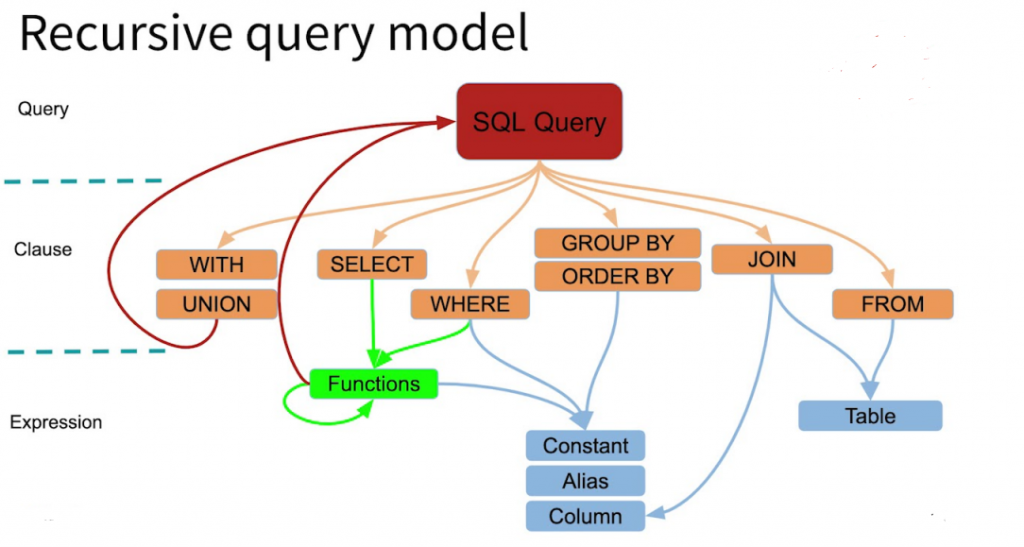

Spark Catalyst Optimizer

- The optimizer used by Spark SQL is Catalyst optimizer. It optimizes all the queries written in Spark SQL and DataFrame DSL. The optimizer helps us to run queries much faster than their counter RDD part. This increases the performance of the system.

- Spark Catalyst is a library built as a rule-based system. And each rule focuses on the specific optimization. For example, ConstantFolding focuses on eliminating constant expression from the query.

Uses of Apache Spark SQL

- It executes SQL queries.

- We can read data from existing Hive installations using SparkSQL.

- When we run SQL within another programming language we will get the result as Dataset/DataFrame.

Functions

- Built-In function : It offers a built-in function to process the column value. We can access the inbuilt function by importing the following command: Import org.apache.spark.sql.functions

- User Defined Functions(UDFs) : UDF allows you to create the user defined functions based on the user-defined functions in Scala. Refer this guide to learn the features of Scala.

- Aggregate functions : These operate on a group of rows and calculate a single return value per group.

- Windowed Aggregates(Windows) : These operate on a group of rows and calculate a single return value for each row in a group.

Advantages of Spark SQL

- Integrated : Apache Spark SQL mixes SQL queries with Spark programs. With the help of Spark SQL, we can query structured data as a distributed dataset (RDD). We can run SQL queries alongside complex analytic algorithms using the tight integration property of Spark SQL.

- Unified Data Access : Using Spark SQL, we can load and query data from different sources. The Schema-RDDs lets a single interface to productively work structured data. For example, Apache Hive tables, parquet files, and JSON files.

- High compatibility : In Apache Spark SQL, we can run unmodified Hive queries on existing warehouses. It allows full compatibility with existing Hive data, queries and UDFs, by using the Hive fronted and MetaStore.

- Standard Connectivity : It can connect through JDBC or ODBC. It includes server mode with industry standard JDBC and ODBC connectivity.

- Scalability : To support mid-query fault tolerance and large jobs, it takes advantage of the RDD model. It uses the same engine for interactive and long queries.

- Performance Optimization : The query optimization engine in Spark SQL converts each SQL query to a logical plan. Further, it converts to many physical execution plans. Among the entire plan, it selects the most optimal physical plan for execution. Read more about Apache Spark performance tuning techniques in detail.

- For batch processing of Hive tables : We can make use of Spark SQL for fast batch processing of Hive tables.

Conclusion

In conclusion to Spark SQL, it is a module of Apache Spark that analyses the structured data. It provides Scalability, it ensures high compatibility of the system. It has standard connectivity through JDBC or ODBC. Thus, it provides the most natural way to express the Structured Data.