- 10 Best Data Analytics Tools for Big Data Analysis | Everything You Need to Know

- What is Azure Databricks | A Complete Guide with Best Practices

- Elasticsearch Nested Mapping : The Ultimate Guide with Expert’s Top Picks

- Various Talend Products and their Features | Expert’s Top Picks with REAL-TIME Examples

- What is Apache Pig ? : A Definitive Guide | Everything You Need to Know [ OverView ]

- Introduction to HBase and Its Architecture | A Complete Guide For Beginners

- What is Azure Data Lake ? : Expert’s Top Picks | Everything You Need to Know

- What is Splunk Rex : Step-By-Step Process with REAL-TIME Examples

- What is Data Pipelining? : Step-By-Step Process with REAL-TIME Examples

- Dedup : Splunk Documentation | Step-By-Step Process | Expert’s Top Picks

- What Is a Hadoop Cluster? : A Complete Guide with REAL-TIME Examples

- Spark vs MapReduce | Differences and Which Should You Learn? [ OverView ]

- Top Big Data Challenges With Solutions : A Complete Guide with Best Practices

- Hive vs Impala | What to learn and Why? : All you need to know

- What is Apache Zookeeper? | Expert’s Top Picks | Free Guide Tutorial

- What is HDFS? Hadoop Distributed File System | A Complete Guide [ OverView ]

- Who Is a Data Architect? How to Become and a Data Architect? : Job Description and Required Skills

- Kafka vs RabbitMQ | Differences and Which Should You Learn?

- What is Apache Hadoop YARN? Expert’s Top Picks

- How to install Apache Spark on Windows? : Step-By-Step Process

- What is Big Data Analytics ? Step-By-Step Process

- Top Big Data Certifications for 2020

- What is Hive?

- Big Data Engineer Salary

- How Facebook is Using Big Data?

- Top Influencers in Big Data and Analytics in 2020

- How to Become a Big Data Hadoop Architect?

- What Are the Skills Needed to Learn Hadoop?

- How to Become a Big Data Analyst?

- How Big Data Can Help You Do Wonders In Your Business

- Essential Concepts of Big Data and Hadoop

- How Big Data is Transforming Retail Industry?

- How big Is Big Data?

- How to Become a Hadoop Developer?

- Hadoop Vs Apache Spark

- PySpark Programming

- 10 Best Data Analytics Tools for Big Data Analysis | Everything You Need to Know

- What is Azure Databricks | A Complete Guide with Best Practices

- Elasticsearch Nested Mapping : The Ultimate Guide with Expert’s Top Picks

- Various Talend Products and their Features | Expert’s Top Picks with REAL-TIME Examples

- What is Apache Pig ? : A Definitive Guide | Everything You Need to Know [ OverView ]

- Introduction to HBase and Its Architecture | A Complete Guide For Beginners

- What is Azure Data Lake ? : Expert’s Top Picks | Everything You Need to Know

- What is Splunk Rex : Step-By-Step Process with REAL-TIME Examples

- What is Data Pipelining? : Step-By-Step Process with REAL-TIME Examples

- Dedup : Splunk Documentation | Step-By-Step Process | Expert’s Top Picks

- What Is a Hadoop Cluster? : A Complete Guide with REAL-TIME Examples

- Spark vs MapReduce | Differences and Which Should You Learn? [ OverView ]

- Top Big Data Challenges With Solutions : A Complete Guide with Best Practices

- Hive vs Impala | What to learn and Why? : All you need to know

- What is Apache Zookeeper? | Expert’s Top Picks | Free Guide Tutorial

- What is HDFS? Hadoop Distributed File System | A Complete Guide [ OverView ]

- Who Is a Data Architect? How to Become and a Data Architect? : Job Description and Required Skills

- Kafka vs RabbitMQ | Differences and Which Should You Learn?

- What is Apache Hadoop YARN? Expert’s Top Picks

- How to install Apache Spark on Windows? : Step-By-Step Process

- What is Big Data Analytics ? Step-By-Step Process

- Top Big Data Certifications for 2020

- What is Hive?

- Big Data Engineer Salary

- How Facebook is Using Big Data?

- Top Influencers in Big Data and Analytics in 2020

- How to Become a Big Data Hadoop Architect?

- What Are the Skills Needed to Learn Hadoop?

- How to Become a Big Data Analyst?

- How Big Data Can Help You Do Wonders In Your Business

- Essential Concepts of Big Data and Hadoop

- How Big Data is Transforming Retail Industry?

- How big Is Big Data?

- How to Become a Hadoop Developer?

- Hadoop Vs Apache Spark

- PySpark Programming

What is Apache Hadoop YARN? Expert’s Top Picks

Last updated on 28th Oct 2022, Artciles, Big Data, Blog

- In this article you will get

- 1.Why YARN??

- 2.Introduction to Hadoop YARN

- 3.Components of YARN

- 4.Conclusion

Why YARN?

In Hadoop version 1.0 which is also referred to as a MRV1(MapReduce Version 1), MapReduce performed both the processing and resource management functions. It consisted of Job Tracker which was a single master.The Job Tracker allocated resources, performed scheduling and monitored a processing jobs.It assigned a map and reduce tasks on the number of subordinate processes called Task Trackers.The Task Trackers periodically reported their progress to a Job Tracker.The practical limits of such a design are reached with a cluster of 5000 nodes and 40,000 tasks are running concurrently.Apart from this limitation, an utilization of computational resources is be inefficient in MRV1.Also, Hadoop framework became limited only to a MapReduce processing paradigm.

To overcome all these problems YARN was introduced in a Hadoop version 2.0 in the year 2012 by the Yahoo and Hortonworks.The basic idea behind YARN is to relieve a MapReduce by taking over a responsibility of Resource Management and Job Scheduling. YARN started to give Hadoop the ability to run a non-MapReduce jobs within a Hadoop framework. With an introduction of YARN, a Hadoop ecosystem was completely revolutionalized.It became much many flexible, efficient and scalable.When Yahoo went live with YARN in a first quarter of 2013, it aided the company to shrink size of its Hadoop cluster from the 40,000 nodes to 32,000 nodes.But number of jobs doubled to a 26 million per month.

Introduction to Hadoop YARN

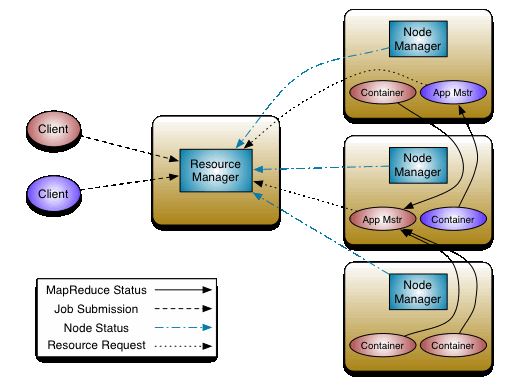

Now that have enlightened with need for a YARN, let me introduce to the core component of a Hadoop v2.0, YARN allows various data processing methods like a graph processing, interactive processing, stream processing as well as a batch processing to run and process data stored in HDFS.Therefore YARN opens up a Hadoop to other types of a distributed applications beyond the MapReduce.Apart from a Resource Management, YARN also performs a Job Scheduling.YARN performs all the processing activities by allocating the resources and scheduling tasks.Apache Hadoop YARN Architecture consists of a following main components :

1.Resource Manager: Runs on master daemon and manages resource allocation in the cluster.

2.Node Manager: They run on a slave daemons and are responsible for an execution of a task on each single Data Node.

3.Application Master: Manages user job lifecycle and resource needs of an individual applications. It works along with a Node Manager and monitors the execution of tasks.

4.Container: Package of resources including a RAM, CPU, Network, HDD etc on the single node.

Components of the YARN

Can consider the YARN as the brain of Hadoop Ecosystem. The image below represents a YARN Architecture.

The first component of a YARN Architecture is,

Resource Manager:

It is ultimate authority in a resource allocation. On receiving processing requests, it passes parts of a requests to corresponding node managers accordingly, where actual processing takes place.It is arbitrator of a cluster resources and decides allocation of the available resources for the competing applications.Optimizes a cluster utilization like keeping all resources in use all time against different constraints like capacity guarantees, fairness, and SLAs.

It has a two main components:

- a) Scheduler

- b) Application Manager

a) Scheduler:

The scheduler is responsible for the allocating resources to the different running applications subject to constraints of the capacities, queues etc.It is called pure scheduler in ResourceManager, which means that it does not perform the any monitoring or tracking of status for the applications.If there is an application failure or a hardware failure,a Scheduler does not guarantee to restart a failed tasks.Performs scheduling based on a resource requirements of applications.It has pluggable policy plug-in, which is responsible for the partitioning the cluster resources among various applications. There are two such plug-ins: Capacity Scheduler and Fair Scheduler, which are currently used as a Schedulers in the ResourceManager.

b) Application Manager:

It is responsible for the accepting job submissions.Negotiates a first container from Resource Manager for executing the application at specific Application Master.Manages running a application masters in a cluster and offer a service for restarting Application Master container on failure.

Coming to second component which is :

Node Manager:

It takes care of the individual nodes in a Hadoop cluster and manages a user jobs and workflow on a given node.It registers with a resource manager and sends heartbeats with health status of a node.Its primary goal is to the manage application containers assigned to it by a resource manager.It keeps up-to-date with Resource Manager.Application master requests a assigned container from a Node Manager by sending it a Container Launch Context(CLC) which includes everything application needs in order to run.The Node Manager creates a requested container process and starts it.Monitors resource usage (memory, CPU) of the individual containers.Performs a Log management.It also kills a container as directed by a Resource Manager.

The third component of an Apache Hadoop YARN is,

Application Master:

An application is single job submitted to a framework.Each such application has unique Application Master associated with it which is framework specific entity.It is a process that coordinates an application’s execution in a cluster and also manages faults.Its task is to negotiate the resources from Resource Manager and work with Node Manager to execute and monitor component tasks.It is responsible for the negotiating appropriate resource containers from a ResourceManager, tracking their status and monitoring progress.Once started, it periodically sends a heartbeats to Resource Manager to affirm its health and to update a record of its resource demands.

The fourth component is:

Container:

It is the collection of physical resources like RAM, CPU cores, and disks on a single node.YARN containers are managed by the container launch context which is container life-cycle(CLC).This record contains map of environment variables, dependencies stored in remotely accessible storage, security tokens, payload for a Node Manager services and the command necessary to create process. It grants rights to the application to use a specific amount of resources (memory, CPU etc.) on specific.

Conclusion

YARN helps in overcoming a scalability issue of the MapReduce in Hadoop 1.0 as it divides a work of Job Tracker, of both job scheduling and monitoring progress of tasks.Also, the issue of availability is also overcome as be earlier in Hadoop 1.0 Job Tracker failure led to the restarting of task.