- Difference between Artificial Intelligence and Machine Learning | Everything You Need to Know

- How Quantum Computing Will Transform Cybersecurity | All you need to know [ OverView ]

- What is Design Thinking? : 5 Stages in the Design Thinking Process [OverView]

- What is Design Thinking Model?

- The Top 5 Technology Trend Creators | All you need to know [ OverView ]

- AI vs Data Science: Mapping Your Career Path [ Job & Future ]

- What is Computer Vision & How does it Works ? : Everything You Need to Know [ OverView ]

- What is TensorFlow? : Expert’s Top Picks | Everything You Need to Know

- Keras vs Tensorflow vs Pytorch | Difference You Should Know

- Advantages and Disadvantages of Artificial Intelligence

- What are the Deep Learning Algorithms You Should Know About?

- The Rise of Artificial Intelligence and Machine Learning Job Trends

- Top 5 Jobs In AI and Key Skills Needed To Help You Land One

- Top AI and Machine Learning Trends for 2020

- How to Build a Career in AI and Machine Learning?

- Artificial Intelligence for Beginners

- What Is Emotional Intelligence and Its Importance?

- What Is Artificial Neural Networks?

- Top Real World Artificial Intelligence Applications

- What is Artificial Intelligence?

- Difference between Artificial Intelligence and Machine Learning | Everything You Need to Know

- How Quantum Computing Will Transform Cybersecurity | All you need to know [ OverView ]

- What is Design Thinking? : 5 Stages in the Design Thinking Process [OverView]

- What is Design Thinking Model?

- The Top 5 Technology Trend Creators | All you need to know [ OverView ]

- AI vs Data Science: Mapping Your Career Path [ Job & Future ]

- What is Computer Vision & How does it Works ? : Everything You Need to Know [ OverView ]

- What is TensorFlow? : Expert’s Top Picks | Everything You Need to Know

- Keras vs Tensorflow vs Pytorch | Difference You Should Know

- Advantages and Disadvantages of Artificial Intelligence

- What are the Deep Learning Algorithms You Should Know About?

- The Rise of Artificial Intelligence and Machine Learning Job Trends

- Top 5 Jobs In AI and Key Skills Needed To Help You Land One

- Top AI and Machine Learning Trends for 2020

- How to Build a Career in AI and Machine Learning?

- Artificial Intelligence for Beginners

- What Is Emotional Intelligence and Its Importance?

- What Is Artificial Neural Networks?

- Top Real World Artificial Intelligence Applications

- What is Artificial Intelligence?

Keras vs Tensorflow vs Pytorch | Difference You Should Know

Last updated on 27th Oct 2022, Artciles, Artificial Intelligence, Blog

- In this article you will get

- 1.Introduction

- 2.What exactly is the PyTorch software?

- 3.PyTorch versus TensorFlow

- 4.Conclusion

Introduction

Deep learning is a subfield of Artificial Intelligence (AI), which has gained prominence in the public consciousness over the last few decades. Before a brand-new idea can be put into practice in the real world, there are bound to be some unanswered questions and unclear points that need to be clarified. But before we go into the specifics of the differences between PyTorch, TensorFlow, and Keras, let’s take a few moments to talk about and go through deep learning.

The following are facts that are non-competitive:

The following should serve as an introduction to TensorFlow, PyTorch, and Keras. In this section, we will present some differences between the three that are intended to serve in that capacity. These differences are not meant to show how one point of view is better than another. Instead, they are meant to introduce the topic that will be the focus of our discussion throughout this essay.

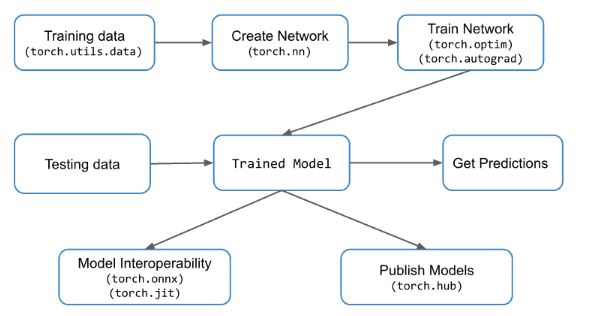

What exactly is the PyTorch software?

PyTorch is a framework for deep learning that was just made. It is based on the Torch library. It was developed by the Artificial Intelligence Research Division at Facebook, and it was open-sourced on GitHub in 2017. It is used for applications that deal with natural language processing.

PyTorch is well-known for its user-friendliness, straightforwardness, adaptability, memory-saving capabilities, and ability to generate dynamic graphs of computing operations. Additionally, it has a native-like feel, which makes code much easier to manage and speeds up the processing time.

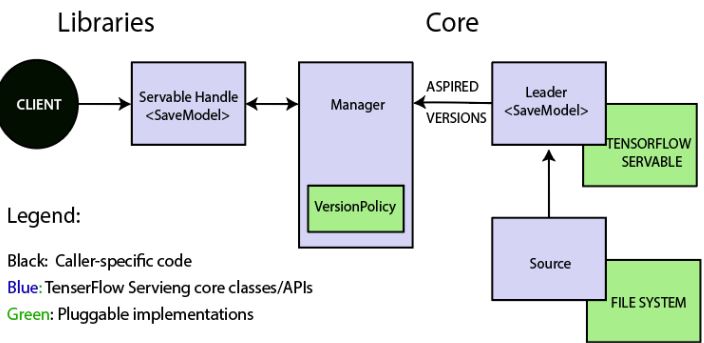

What exactly is this thing called TensorFlow?

TensorFlow is an open-source, end-to-end framework for deep learning that was developed by Google in 2015 and made available to the public in the same year. It is well-known for its documentation and training help, scalable production and deployment options, many abstraction levels, and support for many platforms, such as Android.

TensorFlow is a symbolic math library that is used for neural networks. It is ideally suited for dataflow programming across a range of tasks and may be utilized for a variety of applications. It gives users a number of different levels of abstraction that they can use to build and train models.

TensorFlow’s ecosystem of community resources, libraries, and tools makes it easier to build and deploy applications that use machine learning. TensorFlow is a promising newcomer to the world of deep learning, and it is becoming more and more popular quickly.

Also, as was said earlier, TensorFlow has embraced Keras, which makes it difficult to compare the two since it seems like a fair comparison wouldn’t be possible. Despite this, we will continue to compare the two frameworks for the sake of completeness, especially considering that Keras users are not required to use TensorFlow in order to use the framework.

PyTorch versus TensorFlow

Both TensorFlow and PyTorch provide helpful abstractions that make it simpler and quicker to create models by cutting down on the amount of boilerplate code. PyTorch takes a more “Pythonic” approach and is object-oriented, whereas TensorFlow provides users with a number of different alternatives to choose from. This is one way in which they are distinct from one another.

PyTorch is now utilized for a variety of deep learning tasks, and despite being the least popular of the three primary frameworks, its popularity among AI researchers is growing. This is despite the fact that PyTorch is currently the least popular framework. Even though PyTorch is the youngest of the three main frameworks, this is the case. Recent developments point to the likelihood of this situation evolving in the near future.

PyTorch is the software that researchers choose because it can be changed easily, has powerful debugging tools, and is easy to learn. It is compatible with the macOS, Linux, and Windows operating systems.

There is a lot of information about TensorFlow’s framework, and users can find a lot of trained models and tutorials to use. As a result, it is the tool of choice for a significant number of industry experts as well as researchers. TensorFlow provides improved visualization, which makes it easier for developers to debug their code and monitor the progress of the training process. PyTorch, on the other hand, offers only a limited amount of visualization.

As a result of the TensorFlow Serving framework, TensorFlow is also superior to PyTorch when it comes to putting trained models into production. Since PyTorch does not include a framework of this kind, developers are required to make use of either Django or Flask as a back-end server.

PyTorch’s performance in the data parallelism domain is at its best because it uses Python’s built-in support for asynchronous execution. On the other hand, in order to make distributed training possible with TensorFlow, you will need to manually code and optimize each operation that is done on a particular device. In a nutshell, it is possible to do everything that can be done in PyTorch using TensorFlow; the only difference is that it will require more effort on your part.

Due to the fact that PyTorch is so widely used in the scientific community, you should familiarize yourself with it right away if you are just beginning to investigate the world of deep learning. If, on the other hand, you already know about machine learning and deep learning and want to get a job in the field as soon as possible, you should learn TensorFlow first.

Adoption is Difference #0 in this case.

At the moment, several academics and professionals working in various industries see TensorFlow as a tool that should be used. The framework has thorough documentation, and in the event that the documentation is insufficient, there are a great number of really well-written tutorials available over the internet. Begin your search on github where you’ll discover hundreds of models that have already been installed and trained.

PyTorch is currently in beta, but despite the fact that it was released not too long ago in comparison to its main rival, it is gradually gaining traction. In addition, documentation and formal tutorials would be helpful. Additionally, PyTorch comes with various user-friendly implementations of well-known computer vision architectures that are included in the package.

1:Dynamic and static graph definitions.

Both frameworks operate on tensors and regard any model as a directed acyclic graph (DAG), but there is a significant gap between the two in terms of how the tensors themselves may be defined.

The adage “data is code and code is data” is one that TensorFlow adheres to. Before a model can be executed in TensorFlow, a graph must first be statically defined. The use of tf is required for any and all forms of communication with the outside world. Object of the session and tf. Tensors serving as placeholders, which at runtime will have their contents replaced by data received from outside sources.

Things are much more imperative and dynamic with PyTorch. You may construct, modify, and execute nodes as you go; there are no special session interfaces or placeholders. In general, the framework is more closely connected with the Python language, and the majority of the time, it has a more natural feel. Writing in TensorFlow might give you the impression that your model is hidden behind a wall, with just a few teeny-tiny passageways via which you can speak with it. In any case, it seems that this is still largely a question of personal preference.

However, these techniques diverge not just from one another from the point of view of software engineering; rather, there are a number of dynamic neural network topologies that may profit from the dynamic approach. In the case of RNNs, the length of the input sequence will remain the same while using static graphs. This implies that if you want to construct a sentiment analysis model for English sentences, you have to set the sentence length to some maximum number and pad any smaller sequences with zeros. If you don’t do this, the model won’t work. That is rather inconvenient, isn’t it? In addition to this, you will face an increased number of challenges in the field of recursive RNNs and tree-RNNs. Tensorflow only provides limited support for dynamic inputs when using Tensorflow Fold at the moment. PyTorch is equipped with it by default.

2:Debugging

Since the computation graph in PyTorch is only created at runtime, you are free to use any of your preferred Python debugging tools, such as pdb, ipdb, the print statements you’ve come to rely on, or even the PyCharm debugger.

TensorFlow does not operate in this manner at all. You have the ability to make use of a specialised tool known as tfdbg, which enables the evaluation of tensorflow expressions during runtime and the browsing of all tensors and operations in session scope. It goes without saying that you won’t be able to debug any Python code with it, so you’ll have to use pdb on its own.

3:Visualization

When it comes to visualisation, Tensorboard is an excellent tool to use. This tool is included with TensorFlow, and it is very helpful for troubleshooting as well as comparing the results of several training sessions. Imagine, for instance, that after training a model you made some adjustments to its hyperparameters and then retrained it. Both runs may be presented on the Tensorboard concurrently to highlight any potential discrepancies between them.

Tensorboard has the capability to show a variety of summaries, any of which may be compiled using the tf.summary module. For the sake of our toy exponent example, we will create summary operations and make use of tf.summary. FileWriter so that they may be stored on the disc.

4:Deployment

If we start talking about deployment, TensorFlow is the obvious victor for now: it offers TensorFlow Serving, which is a framework that deploys your models on a customised gRPC server. If we start talking about deployment, TensorFlow is the clear winner. Additionally, mobile help is available.

When we move back to PyTorch, we could develop a REST API on top of the model using Flask or another alternative. If not, we’ll look at other options. In the event that gRPC is not an appropriate fit for the requirements of your use case, this may still be accomplished using TensorFlow models. On the other hand, if you are concerned about performance, TensorFlow Serving could be the better choice.

Tensorflow also has capability for distributed training, which is something that PyTorch does not yet have.

5:Data Parallelism

Declarative data parallelism is one of the most notable characteristics that sets PyTorch apart from TensorFlow; to take use of this, use the torch.nn command.

Using DataParallel to encapsulate any module will result in that module being (almost magically) parallelized across the batch dimension. Utilizing several GPUs in this manner enables you to do so with little effort.

On the other hand, TensorFlow gives you the ability to precisely adjust every operation so that it may be executed on a certain device. However, determining parallelism involves a great deal more human labour and calls for careful consideration.

6: A framework vs a library

Let’s construct a CNN-like classifier for handwritten digits, shall we? Now PyTorch will really begin to take on the appearance of a framework. To refresh your memory, a programming framework provides us with helpful abstractions in a particular domain as well as an easy method to apply those abstractions to solve specific issues. This is the defining characteristic that differentiates a library from a framework.

The datasets module, which offers wrappers for common datasets used to evaluate deep learning systems, is being introduced here. Also nn. This module is used to generate a convolutional neural network classifier that is specific to the user’s data. nn. PyTorch supplies us with a component known as a module, which allows us to construct intricate deep learning systems. The torch.nn package has a significant quantity of ready-to-use modules that we may use as the foundation for our model. Notice how PyTorch makes use of an object-oriented approach to define fundamental building blocks and provide us with some “rails” to go on, all while having the capability to add functionality through subclassing.