- Commvault Tutorial for Beginners | Ultimate Guide to Learn [NEW & UPDATED]

- SAP MM (Material Management) Tutorial | A Complete Guide

- MOST In-DEMAND Snowflake Tutorial [ STEP-IN ] | Learn Now

- SAP MM (Material Management) Tutorial | A Complete Guide

- MOST In-DEMAND Snowflake Tutorial [ STEP-IN ] | Learn Now

- Informatica Master Data Management Concepts (MDM) Tutorial | Quickstart – MUST- READ

- Teradata

- Informatica Tutorial: The Ultimate Guide [STEP-IN] | Learnovita

- ETL Tutorial

- SSIS Cheat Sheet

- Informatica Architecture

- Netezza Tutorial

- Datastage Tutorial

- SSIS Tutorial

- Commvault Tutorial for Beginners | Ultimate Guide to Learn [NEW & UPDATED]

- SAP MM (Material Management) Tutorial | A Complete Guide

- MOST In-DEMAND Snowflake Tutorial [ STEP-IN ] | Learn Now

- SAP MM (Material Management) Tutorial | A Complete Guide

- MOST In-DEMAND Snowflake Tutorial [ STEP-IN ] | Learn Now

- Informatica Master Data Management Concepts (MDM) Tutorial | Quickstart – MUST- READ

- Teradata

- Informatica Tutorial: The Ultimate Guide [STEP-IN] | Learnovita

- ETL Tutorial

- SSIS Cheat Sheet

- Informatica Architecture

- Netezza Tutorial

- Datastage Tutorial

- SSIS Tutorial

ETL Tutorial

Last updated on 08th Oct 2020, Blog, Datawarehouse, Tutorials

Today let me take a moment and explain my testing fraternity about one of the much in demand and upcoming skills for my tester friends i.e. ETL testing (Extract, Transform, and Load).

It has been observed that Independent Verification and Validation is gaining huge market potential and many companies are now seeing this as prospective business gain.

Customers have been offered a different range of products in terms of service offerings, distributed in many areas based on technology, process, and solutions. ETL or data warehouse is one of the offerings which are developing rapidly and successfully.

Subscribe For Free Demo

Error: Contact form not found.

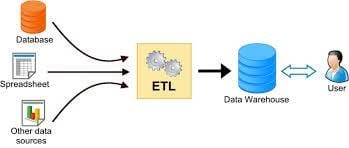

Through ETL process, data is fetched from the source systems, transformed as per business rules and finally loaded to the target system (data warehouse). A data warehouse is an enterprise-wide store which contains integrated data that aids in the business decision-making process. It is a part of business intelligence.

Why do organizations need Data Warehouse?

Organizations with organized IT practices are looking forward to creating the next level of technology transformation. They are now trying to make themselves much more operational with easy-to-interoperate data.

Having said that data is most important part of any organization, it may be everyday data or historical data. Data is the backbone of any report and reports are the baseline on which all the vital management decisions are taken.

Most of the companies are taking a step forward for constructing their data warehouse to store and monitor real-time data as well as historical data. Crafting an efficient data warehouse is not an easy job. Many organizations have distributed departments with different applications running on distributed technology.

ETL tool is employed in order to make a flawless integration between different data sources from different departments. ETL tool will work as an integrator, extracting data from different sources; transforming it into the preferred format based on the business transformation rules and loading it in cohesive DB known are Data Warehouse.

Well planned, well defined and effective testing scope guarantees smooth conversion of the project to the production. A business gains the real buoyancy once the ETL processes are verified and validated by an independent group of experts to make sure that data warehouse is concrete and robust.

ETL or Data warehouse testing is categorized into four different engagements irrespective of technology or ETL tools used:

- New Data Warehouse Testing – New DW is built and verified from scratch. Data input is taken from customer requirements and different data sources and new data warehouse is built and verified with the help of ETL tools.

- Migration Testing – In this type of project customer will have an existing DW and ETL performing the job but they are looking to bag new tool in order to improve efficiency.

- Change Request – In this type of project new data is added from different sources to an existing DW. Also, there might be a condition where customer needs to change their existing business rule or they might integrate the new rule.

- Report Testing – Report is the end result of any Data Warehouse and the basic propose for which DW builds. The report must be tested by validating layout, data in the report and calculation.

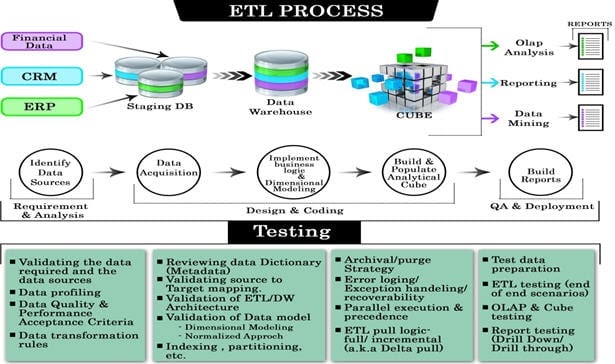

ETL process

(Note: Click on the image for enlarged view)

ETL Testing Techniques

1) Data transformation Testing:

Verify that data is transformed correctly according to various business requirements and rules.

2) Source to Target count Testing:

Make sure that the count of records loaded in the target is matching with the expected count.

3) Source to Target Data Testing:

Make sure that all projected data is loaded into the data warehouse without any data loss and truncation.

4) Data Quality Testing:

Make sure that ETL application appropriately rejects, replaces with default values and reports invalid data.

5) Performance Testing:

Make sure that data is loaded in data warehouse within prescribed and expected time frames to confirm improved performance and scalability.

6) Production Validation Testing:

Validate the data in production system & compare it against the source data.

7) Data Integration Testing:

Make sure that the data from various sources has been loaded properly to the target system and all the threshold values are checked.

8) Application Migration Testing:

In this testing, it is ensured that the ETL application is working fine on moving to a new box or platform.

9) Data & constraint Check:

The datatype, length, index, constraints, etc. are tested in this case.

10) Duplicate Data Check:

Test if there is any duplicate data present in the target systems. Duplicate data can lead to wrong analytical reports.

Apart from the above ETL testing methods other testing methods like system integration testing, user acceptance testing, incremental testing, regression testing, retesting and navigation testing is also carried out to make sure everything is smooth and reliable.

ETL/Data Warehouse Testing Process

Similar to any other testing that lies under Independent Verification and Validation, ETL also goes through the same phase.

- Requirement understanding

- Validating

- Test Estimation based on a number of tables, the complexity of rules, data volume and performance of a job.

- Test planning based on the inputs from test estimation and business requirement. We need to identify here that what is in scope and what is out of scope. We also look out for dependencies, risks and mitigation plans in this phase.

- Designing test cases and test scenarios from all the available inputs. We also need to design mapping document and SQL scripts.

- Once all the test cases are ready and are approved, testing team proceed to perform pre-execution check and test data preparation for testing

- Lastly, execution is performed till exit criteria are met. So, execution phase includes running ETL jobs, monitoring job runs, SQL script execution, defect logging, defect retesting and regression testing.

- Upon successful completion, a summary report is prepared and closure process is done. In this phase, sign off is given to promote the job or code to the next phase.

The first two phases i.e. requirement understanding and validation can be regarded as pre-steps of ETL test process.

So, the main process can be represented as below:

It is necessary to define test strategy which should be mutually accepted by stakeholders before starting actual testing. A well-defined test strategy will make sure that correct approach has been followed meeting the testing aspiration.

ETL/Data Warehouse testing might require writing SQL statements extensively by testing team or maybe tailoring the SQL provided by the development team. In any case, a testing team must be aware of the results they are trying to get using those SQL statements.

Difference between Database and Data Warehouse Testing

There is a popular misunderstanding that database testing and data warehouse is similar while the fact is that both hold different direction in testing.

- Database testing is done using a smaller scale of data normally with OLTP (Online transaction processing) type of databases while data warehouse testing is done with large volume with data involving OLAP (online analytical processing) databases.

- In database testing normally data is consistently injected from uniform sources while in data warehouse testing most of the data comes from different kind of data sources which are sequentially inconsistent.

- We generally perform the only CRUD (Create, read, update and delete) operation in database testing while in data warehouse testing we use read-only (Select) operation.

- Normalized databases are used in DB testing while demoralized DB is used in data warehouse testing.

There is a number of universal verifications that have to be carried out for any kind of data warehouse testing.

Below is the list of objects that are treated as essential for validation in this testing:

- Verify that data transformation from source to destination works as expected

- Verify that expected data is added to the target system

- Verify that all DB fields and field data is loaded without any truncation

- Verify data checksum for record count match

- Verify that for rejected data proper error logs are generated with all details

- Verify NULL value fields

- Verify that duplicate data is not loaded

- Verify data integrity

ETL Testing Challenges

This testing is quite different from conventional testing. There are many challenges we faced while performing data warehouse testing.

Here are few challenges I experienced on my project:

- Incompatible and duplicate data

- Loss of data during ETL process

- Unavailability of the inclusive testbed

- Testers have no privileges to execute ETL jobs by their own

- Volume and complexity of data are very huge

- Fault in business process and procedures

- Trouble acquiring and building test data

- Unstable testing environment

- Missing business flow information

ETL — Extract/Transform/Load — is a process that extracts data from source systems, transforms the information into a consistent data type, then loads the data into a single depository. ETL testing refers to the process of validating, verifying, and qualifying data while preventing duplicate records and data loss.

ETL testing ensures that the transfer of data from heterogeneous sources to the central data warehouse occurs with strict adherence to transformation rules and is in compliance with all validity checks. It differs from data reconciliation used in database testing in that ETL testing is applied to data warehouse systems and used to obtain relevant information for analytics and business intelligence.

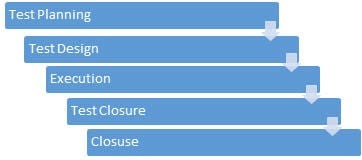

Eight stages of the ETL testing process

Effective ETL testing detects problems with the source data early on—before it is loaded to the data repository — as well as inconsistencies or ambiguities in business rules intended to guide data transformation and integration. The process can be broken down into eight stages.

- 1.Identify business requirements — Design the data model, define business flow, and assess reporting needs based on client expectations. It’s important to start here so the scope of the project is clearly defined, documented, and understood fully by testers.

- 2.Validate data sources — Perform a data count check and verify that the table and column data type meets specifications of the data model. Make sure check keys are in place and remove duplicate data. If not done correctly, the aggregate report could be inaccurate or misleading.

- 3.Design test cases — Design ETL mapping scenarios, create SQL scripts, and define transformational rules. It is important to validate the mapping document as well, to ensure it contains all of the information.

- 4.Extract data from source systems — Execute ETL tests per business requirement. Identify types of bugs or defects encountered during testing and make a report. It is important to detect and reproduce any defects, report, fix the bug, resolve, and close bug report — before continuing to Step 5.

- 5.Apply transformation logic — Ensure data is transformed to match schema of target data warehouse. Check data threshold, alignment, and validate data flow. This ensures the data type matches the mapping document for each column and table.

- 6.Load data into target warehouse — Perform a record count check before and after data is moved from staging to the data warehouse. Confirm that invalid data is rejected and that the default values are accepted.

- 7.Summary report — Verify layout, options, filters and export functionality of summary report. This report lets decision-makers/stakeholders know details and results of the testing process and if any step was not completed i.e. “out of scope” and why.

- 8.Test Closure — File test closure.

A final step is for the ETL tester to test the tool, its functions, and the ETL system.

Nine types of ETL tests

ETL testing fits into four general categories: new system testing (data obtained from varied sources), migration testing (data transferred from source systems to data warehouse), change testing (new data added to data warehouse), and report testing (validate data, make calculations).

ETL Tests that may be executed in each stage are:

Category | ETL Tests | |

| New System Testing | — Data quality testing | — Metadata testing |

| Migration Testing | — Data quality testing — Source to target count testing — Source to target data testing — Performance testing — Data transformation testing — Data integration testing | |

| Change Testing | — Data quality testing — Source to target count testing — Source to target data testing — Production validation — Data integration testing | |

| Report Testing | — Report testing |

- 1.Production validation, also called “production reconciliation” or “table balancing,” validates data in production systems and compares it against source data. This guards data against faulty logic, failed loads, or operational processes that are not loaded to the system.

- 2.Source to target count testing verifies that the number of records loaded into the target database match the expected record count.

- 3.Source to target data testing ensures projected data is added to the target system without loss or truncation, and that the data values meet expectations after transformation.

- 4.Metadata testing performs data type, length, index, and constraint checks of ETL application metadata (load statistics, reconciliation totals, data quality metrics).

- 5.Performance testing makes sure that data is loaded into the data warehouse within expected time frames and that the test server response to multiple users and transactions is adequate for performance and scalability.

- 6.Data transformation testing runs SQL queries for each row to verify that the data is correctly transformed according to business rules.

- 7.Data quality testing runs syntax tests (invalid characters, pattern, case order) and reference tests (number, date, precision, null check) to make sure the ETL application rejects, accepts default values, and reports invalid data.

- 8.Data integration testing confirms that the data from all sources has loaded to the target data warehouse correctly and checks threshold values.

- 9.Report testing reviews data in summary report, verifying layout and functionality are as expected, and makes calculations.

Testing during the ETL process can also include user acceptance testing, GUI testing, and application migration tests to ensure the ETL architecture performs well on other platforms. Incremental ETL tests can verify that new records and updates are processed as expected.

ETL testing challenges

Identifying challenges early in the ETL process can prevent bottlenecks and costly delays. Creating a source-to-target mapping document and establishing clear business requirements from the start is essential. Frequent changes to requirements—requiring ETL testers to change logic in scripts—can significantly slow progress. ETL testers need to have an accurate estimation of the data transformation requirements, the time it will take to complete them, and a clear understanding of end-user requirements. A few other challenges to watch out for from the beginning include:

- Data that is lost or corrupted during migration.

- Limited availability of source data.

- Underestimating data transformation requirements.

- Duplicate or incomplete data.

- Large volume of historical data that makes ETL testing in target system difficult.

- Unstable testing environment.

- Outdated ETL tools in use.

How to find the best ETL testing tool

ETL testing tools increase IT productivity and simplify the process of retrieving information from big data to gain insights. The tool itself contains procedures and rules for extracting and processing data, eliminating the need for traditional programming methods that are labor-intensive and expensive.

Another benefit is that ETL testing tools have built-in compatibility with cloud data warehouse, ERP and CRM platforms such as Amazon Web Services, Salesforce, Oracle, Kinesis, Google Cloud Platform, NetSuite, and more.

Capabilities to look for when comparing ETL testing tools include:

- Graphical interface to simplify the design and development of ETL processes.

- Automatic code generation to speed development and reduce errors.

- Built-in data connectors that can access data stored in file format, a database, packaged application, or legacy system.

- Content management facilities that enable context switching for ETL development, testing and production environments.

- Sophisticated debugging tools that let you track data flows in real-time and reports on row-by-row behavior.

Cloud-native ETL tools designed specifically for cloud computing architecture enable a business to reap the full benefits of a data warehouse endeavor.

The future of ETL testing: AI and the cloud

Enterprise organizations that rely on hand-coded scripts and in-house tools lose efficiency and the ability to scale with today’s evolving ETL cloud technologies. Fast-paced, agile DevOps teams that churn out multiple software application updates daily—using automated, continuous deployment practices—is becoming the norm.

As DevOps expands to cloud-based data environments and processes, there is a need for automated data integration with ETL testing tools that can process larger amounts of data autonomously — without need for human intervention — in real-time. The waterfall approach (identify a problem in data stream, fix, test schema, load to data warehouse, and analyze) is being replaced with cloud-native, agile solutions.

Data management cloud architectures and AI ‘smart’ data integration assistants are emerging new trends.

To see a demonstration of machine learning with Spark, and learn how to teach the system human decision-making and generate a classification model based on that learning, check out How to Match with Spark and Machine Learning:

Open source ETL testing

ETL testing is a multi-level, data-centric process. It uses complex SQL queries to access, extract, transform and load millions of records contained in various source systems into a target data warehouse. ETL testing tools handle much of this workload for DevOps, eliminating the need for costly and time-intensive development of proprietary tools.

Extensive ETL testing gives an enterprise confidence in the integrity of its big data and the business intelligence gained from that data, and lowers business risk. Talend Open Studio for Data Integration is an industry-leading leading open source ETL development and testing tool. With millions of downloads since 2006, it is free to use under an Apache license.

Subscription-based Talend Data Integration includes the same ETL testing functionality as well as enterprise class continuous delivery mechanisms to facilitate teamwork, run ETL testing jobs on remote systems, and an audit tool for qualitative and quantitative ETL metrics.

conclusion:

Data is important for businesses to make the critical business decisions. ETL testing plays a significant role validating and ensuring that the business information is exact, consistent and reliable. Also, it minimizes the hazard of data loss in production.

Hope these tips will help ensure your ETL process is accurate and the data warehouse build by this is a competitive advantage for your business.