- Must-Know [LATEST] Apache NiFi Interview Questions and Answers

- [SCENARIO-BASED ] Mahout Interview Questions and Answers

- 40+ [REAL-TIME] Apache Ambari Interview Questions and Answers

- [50+] Big Data Greenplum DBA Interview Questions and Answers

- 40+ [REAL-TIME] Informatica Analyst Interview Questions and Answers

- Must-Know [LATEST] FileNet Interview Questions and Answers

- 20+ Must-Know SAS Grid Administration Interview Questions

- [SCENARIO-BASED ] Apache Flume Interview Questions and Answers

- Top Storm Interview Questions and Answers [ TO GET HIRED ]

- Cassandra Interview Questions and Answers

- Sqoop Interview Questions and Answers

- kafka Interview Questions and Answers

- Hive Interview Questions and Answers

- Big Data Hadoop Interview Questions and Answers

- Elasticsearch Interview Questions and Answers

- HDFS Interview Questions and Answers

- HBase Interview Questions and Answers

- MapReduce Interview Questions and Answers

- Pyspark Interview Questions and Answers

- Hadoop Interview Questions and Answers

- Apache Spark Interview Questions and Answers

- Must-Know [LATEST] Apache NiFi Interview Questions and Answers

- [SCENARIO-BASED ] Mahout Interview Questions and Answers

- 40+ [REAL-TIME] Apache Ambari Interview Questions and Answers

- [50+] Big Data Greenplum DBA Interview Questions and Answers

- 40+ [REAL-TIME] Informatica Analyst Interview Questions and Answers

- Must-Know [LATEST] FileNet Interview Questions and Answers

- 20+ Must-Know SAS Grid Administration Interview Questions

- [SCENARIO-BASED ] Apache Flume Interview Questions and Answers

- Top Storm Interview Questions and Answers [ TO GET HIRED ]

- Cassandra Interview Questions and Answers

- Sqoop Interview Questions and Answers

- kafka Interview Questions and Answers

- Hive Interview Questions and Answers

- Big Data Hadoop Interview Questions and Answers

- Elasticsearch Interview Questions and Answers

- HDFS Interview Questions and Answers

- HBase Interview Questions and Answers

- MapReduce Interview Questions and Answers

- Pyspark Interview Questions and Answers

- Hadoop Interview Questions and Answers

- Apache Spark Interview Questions and Answers

Top Storm Interview Questions and Answers [ TO GET HIRED ]

Last updated on 03rd Aug 2022, Big Data, Blog, Interview Question

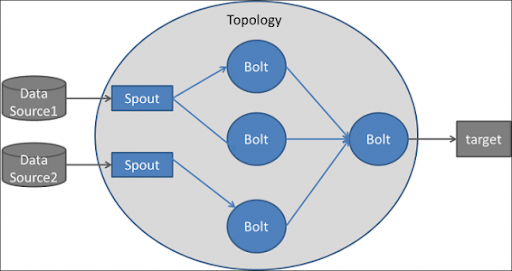

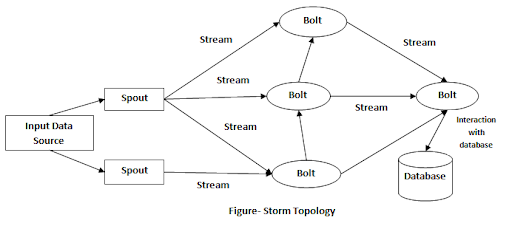

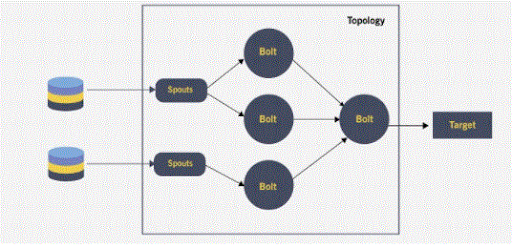

1. That elements are used for stream flow of data?

Ans:

For streaming of information flow, 3 elements are used:

- Bolt

- Spout

- Tuple

2. However, is Bolt used for stream flow of data?

Ans:

Bolts represent the process logic unit in Storm. One will utilize bolts to try and do any reasonable process like filtering, aggregating, joining, interacting with information stores, reproval external systems etc. Bolts can even emit tuples (data messages) for the next bolts to method. In addition, bolts are accountable to acknowledge the process of tuples when they’re done.

3. However, are Spouts used for stream flow of data?

Ans:

Spouts represent the supply of information in Storm. you’ll write spouts to scan information from information sources like databases, distributed file systems, electronic messaging frameworks etc. Spouts will broadly speaking be classified into following:

Reliable : These spouts have the potential to replay the tuples (a unit {of information|of knowledge|of information} during a data stream). This helps applications deliver the goods ‘at least once message processing’ linguistics as just in case of failures, tuples may be replayed and processed once more. Spouts for attractive information from electronic messaging frameworks are typically reliable as these frameworks give the mechanism to replay the messages.

Unreliable : These spouts don’t have the potential to replay the tuples. Once a tuple is emitted, it can not be replayed regardless of whether or not it absolutely was processed with success or not. this kind of sp

4. However, is Tuple used for stream flow of data?

Ans:

The tuple is the main organization in Storm. A tuple may be a named list of values, wherever every price may be any sort. Tuples are dynamically written — the kinds of the fields don’t ought to be declared. Tuples have helper strategies like getInteger and getString to urge field values while not having to forge the result. Storm has the knowledge to set up all the values during a tuple. By default, Storm is aware of the way to set up the primitive varieties, strings, and computer memory unit arrays. If you would like to use another sort, you’ll ought to implement and register a serializer for that sort.

5. Compare Spark & Storm?

Ans:

- SparkM

- StormM

- Data at rest information in motionM

- Task parallel Data parallelM

- Few seconds Sub-secondM

6. What are the key advantages of exploitation Storm for Real Time Processing?

Ans:

Real quick : It will method a hundred messages per second per node.

Fault Tolerant : It detects the fault mechanically and restarts the purposeful attributes.

Reliable : It guarantees that every unit of information are going to be dead a minimum of once or specifically once.

Easy to control : Operational Storms is kind of straightforward.

7.Does Apache act as a Proxy server?

Ans:

Yes, It acts as a proxy additionally by exploiting the mod_proxy module. This module implements a proxy, entranceway or cache for Apache. It implements proxying capability for AJP13 (Apache JServ Protocol version one.3), FTP, CONNECT (for SSL),HTTP/0.9, HTTP/1.0, and (since Apache one.3.23) HTTP/1.1. The module may be designed to attach to different proxy modules for these and different protocols.

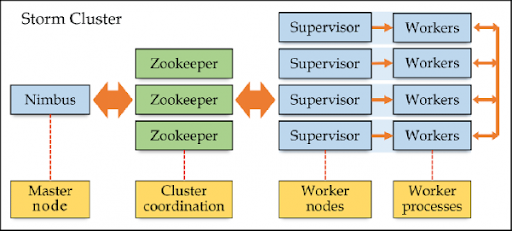

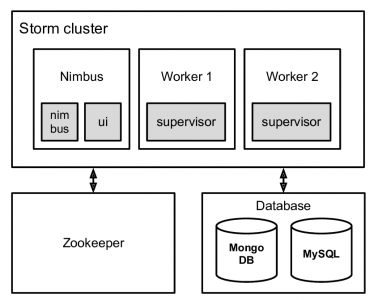

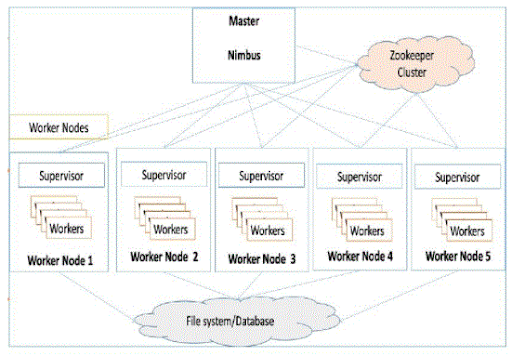

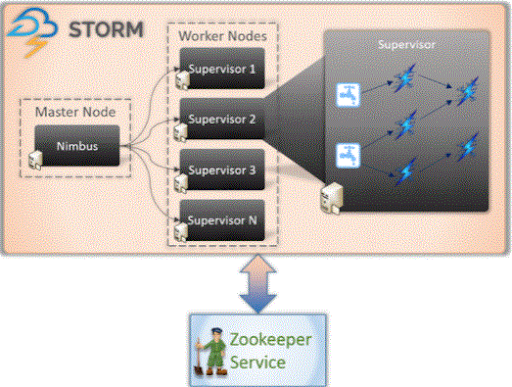

8. What’s the utilization of Zookeeper in Storm?

Ans:

Storm uses Zookeeper for coordinating the cluster. Zookeeper isn’t used for message passing, therefore the load that Storm places on Zookeeper is kind of low. Single node Zookeeper clusters ought to be adequate for many cases, however if wish|you would like if you wish} failover or are deploying massive Storm clusters you’ll want larger Zookeeper clusters. directions for deploying Zookeeper ar here.A few notes concerning Zookeeper preparation :

It’s essential that you simply run Zookeeper underneath supervising, since Zookeeper is fail-fast and can exit the method if it encounters any error case.

It’s essential that you simply originated a cron to compact Zookeeper’s information and dealing logs. The Zookeeper daemon doesn’t do that on its own, and if you don’t originate a cron, Zookeeper can quickly run out of disc space.

9. What’s ZeroMQ?

Ans:

Storm depends on ZeroMQ primarily for task-to-task communication in running Storm topologies.

10. What’s the 3-Tier Apache Storm architecture?

Ans:

11. What number of distinct layers are of Storm’s Codebase?

Ans:

There are 3 distinct layers to Storm’s codebase:

First : Storm was designed from the terribly getting down to be compatible with multiple languages. Nimbus could be a Thrift service and topologies are outlined as Thrift structures. The usage of Thrift permits Storm to be used from any language.

Second :: All of Storm’s interfaces are Java interfaces. therefore even if there’s tons of Clojure in Storm’s implementation, all usage should bear the Java API. This suggests that each feature of Storm is often accessible via Java.

Third :: Storm’s implementation is basically in Clojure. Line-wise, Storm is concerning [*fr1] Java code, [*fr1] Clojure code. However Clojure is far more communicative, therefore essentially the nice majority of the implementation logic is in Clojure.

12. What will it mean for a tuple coming back off a spout will trigger thousands of tuples to be created?

Ans:

A tuple coming back off a spout will trigger thousands of tuples to be created supporting it. Consider, for example:

- 22133,

- “sentence_queue”,

- new StringScheme()));

- builder.setBolt(“split”, new SplitSentence(), 10)

- .shuffleGrouping(“sentences”);

- builder.setBolt(“count”, new WordCount(), 20)

- .fieldsGrouping(“split”, new Fields(“word”));

This topology reads sentences off a Kestrel queue, splits the sentences into its constituent words, and so emits for every word the amount of times it’s seen that word before. A tuple coming back off the spout triggers several tuples being created supporting it: a tuple for every word within the sentence and a tuple for the updated count for every word.

Storm considers a tuple coming back off a spout “fully processed” once the tuple tree has been exhausted and each message within the tree has been processed. A tuple is taken into account failing once its tree of messages fails to be totally processed at intervals at a specific timeout. This timeout will be designed on a topology-specific basis to mistreat the Config.TOPOLOGY_MESSAGE_TIMEOUT_SECS configuration and default to thirty seconds.

13. Once does one decide the cleanup method?

Ans:

The cleanup technique is named once a Bolt is being concluded and will pack up any resources that were opened. There’s no guarantee that this technique is going to be known as on the cluster: for example, if the machine the task is running on blows up, there’s no thanks to invoke the strategy. The cleanup technique is meant after you run topologies in native mode (where a Storm cluster is simulated in process), and you would like to be able to run and kill several topologies while not suffering any resource leaks.

14. However, will we have a tendency to kill a topology?

Ans:

To kill a topology, merely run:

- Storm kill

- Give an equivalent name to Storm kill as you used once submitting the topology.

Storm won’t kill the topology right away. Instead, it deactivates all the spouts in order that they don’t emit from now on tuples, and so Storm waits Config.TOPOLOGY_MESSAGE_TIMEOUT_SECS seconds before destroying all the staff. This offers the topology enough time to finish any tuples it absolutely was processed once it got killed.

15. Mention what’s the distinction between Apache Hbase and Storm?

Ans:

Apache Hbase Storm| Apache Hbase | Storm |

|---|---|

| It provides processing in a time period . | It processes the info however not stored. |

| It offers you low-latency reads of processed knowledge for querying later. | It stores the info however doesn’t store |

16.What is combiner Aggregator?

Ans:

A CombinerAggregator is employed to mix a group of tuples into one field. it’s the subsequent signature:

public interface CombinerAggregator

Storm calls the init() methodology with every tuple, so repeatedly calls the combine()method till the partition is processed. The values passed into the combine() methodology are partial aggregations, the results of combining the values came back by calls to init().

17. What are the common configurations in Apache Storm?

Ans:

There are a spread of configurations you’ll be able to set per topology. an inventory of all the configurations you’ll be able to set are often found here. Those prefixed with “TOPOLOGY” are often overridden on a topology-specific basis (the alternative ones are cluster configurations and can’t be overridden). Here are some common ones that are set for a topology:

Config.TOPOLOGY_WORKERS : This sets the quantity of employee processes to use to execute the topology. for instance, if you set this to twenty five, there’ll be twenty five Java processes across the cluster death penalty all the tasks. If you had a combined one hundred fifty parallelisms across all elements within the topology, every employee method can have half dozen tasks running at intervals as threads.

Config.TOPOLOGY_ACKER_EXECUTORS : This sets the quantity of executors that may track tuple trees and observe once a spout tuple has been totally processed By not setting this variable or setting it as null, Storm can set the quantity of acker executors to be up to the quantity of employees designed for this topology. If this variable is ready to zero, then Storm can now back tuples as before as long as they are available off the spout, effectively disabling dependability.

Config.TOPOLOGY_MAX_SPOUT_PENDING : This sets the utmost variety of spout tuples that may be unfinished on one spout task quickly (pending suggests that the tuple has not been sacked for failing yet). it’s extremely counseled you set this config to forestall queue explosion.

Config.TOPOLOGY_MESSAGE_TIMEOUT_SECS : This can be the utmost quantity of your time a spout tuple must be totally completed before it’s thought of failing. This price defaults to thirty seconds, that is sufficient for many topologies.

Config.TOPOLOGY_SERIALIZATIONS : You’ll be able to register a lot of serializers to Storm exploitation this config in order that you’ll be able to use custom sorts at interval tuples.

18. Is it necessary to kill the topology whereas change the running topology?

Ans:

Yes, to update a running topology, the sole choice presently is to kill the present topology and feed back a brand new one. A planned feature is to implement a Storm swap command that swaps a running topology with a brand new one, making a certain lowest period and no likelihood of each topology’s process tuples at an equivalent time.

19. However will Storm UI be utilized in topology?

Ans:

Storm UI is employed in observation of the topology. The Storm UI provides info concerning errors happening in tasks and fine-grained stats on the outturn and latency performance of every element of every running topology.

20. Outline apache Storm architecture?

Ans:

21.Why doesn’t Apache embrace SSL?

Ans:

SSL (Secure Socket Layer) information transport needs cryptography, and lots of governments have restrictions upon the import, export, and use of cryptography technology. If Apache enclosed SSL within the base package, its distribution would involve all forms of legal and official problems, and it might not be freely accessible. Also, a number of the technology needed to speak to current purchasers exploitation SSL is proprietary by RSA information Security, United Nations agency restricts its use while not a license.

22. Will Apache embrace any variety of info integration?

Ans:

Apache may be an internet (HTTP) server, not an associate degree application server. The bottom package doesn’t embrace any such practicality. PHP project and therefore the mod_perl project permit you to figure with databases from at intervals the Apache setting.

23. Whereas putting in, why will Apache have 3 config files – srm.conf, access.conf and httpd.conf?

Ans:

The first 2 are remnants from the NCSA times, and customarily you ought to be fine if you delete the primary 2, and keep on with httpd.conf.

srm.conf :This can be the default file for the ResourceConfig directive in httpd.conf. it’s processed when httpd.conf however before access.conf.,

access.conf :This can be the default file for the AccessConfig directive in httpd.conf.It is processed when httpd.conf and srm.conf.,

httpd.conf :The httpd.conf file is well-commented and principally obvious.

24. A way to check for the httpd.conf consistency and any errors in it?

Ans:

This command can dump out an outline of however Apache parsed the configuration file. Careful examination of the information processing addresses and server names could facilitate uncover configuration mistakes.

25. Mention what’s the distinction between Apache Kafka and Apache Storm?

Ans:

It is a distributed and sturdy electronic messaging system that may handle immense amounts of knowledge and permits passage of messages from one end-point to a different. It’s a true time message process system, and you’ll be able to edit or manipulate information in real time. Apache storm pulls the info from Kafka and applies some needed manipulation.

26. Make a case for once to use field grouping in Storm? Is there any time-out or limit to best-known field values?

Ans:

Field grouping in Storm uses a mod hash to make your mind up that task to send a tuple, making certain that task is going to be processed within the correct order. For that, you don’t need any cache. So, there’s no time-out or limit to best-known field values.

The stream is divided by the fields laid out in the grouping. as an example, if the stream is classified by the “user-id” field, tuples with an equivalent “user-id” can continuously visit an equivalent task, however tuples with totally {different|completely different} “user-id”‘s might visit different tasks.

27. What’s mod_vhost_alias?

Ans:

This module creates dynamically organized virtual hosts, by permitting the information processing address and/or the Host: header of the hypertext transfer protocol request to be used as a part of the trail name to see what files to serve. This enables for simple use of an enormous variety of virtual hosts with similar configurations.

28. Tell Pine Tree State Is running apache as a root may be a security risk?

Ans:

No. Root method opens port eighty, however ne’er listens to that, therefore no user can truly enter the positioning with root rights. If you kill the basis method, you may see the opposite roots disappear also.

29. What’s Multiviews?

Ans:

MultiViews search is enabled by the MultiViews choices. it’s the overall name given to the Apache server’s ability to supply language-specific document variants in response to an invitation. This can be documented quite totally within the content negotiation description page. Additionally, Apache Week carried a commentary on this subject entitled It then chooses the most effective match to the client’s needs, and returns that document.

30. What’s the cluster design of Storms?

Ans:

31. Will Apache embrace an inquiry engine?

Ans:

Yes, Apache contains an enquiry engine. you’ll be able to search a report name in Apache by exploiting the “Search title”.

32. Justify however you’ll be able to contour log files exploitation Apache Storm?

Ans:

To scan from the log files, you’ll be able to piece your spout and emit per line because it reads the log. The output then may be allotted to a bolt for analyzing.

33. Mention however the Storm application may be helpful in money services?

Ans:

In money services, Storm may be useful in preventing:

Securities fraud :

- Perform time period anomaly detection on legendary patterns of activities and use learned patterns from previous modeling and simulations.

- Correlate dealings knowledge with alternative streams (chat, email, etc.) in a very cost-efficient data processing atmosphere.

- Reduce question time from hours to minutes on giant volumes of information.

- Build one platform for operational applications and analytics that reduces total value of possession (TCO)

Order routing :

Order routing is that the method by that associate degree order goes from the tip user to associate degree exchange. an associate degree order could go on to the exchange from the client, or it should go initially to a broker WHO then routes the order to the exchange.

Pricing :

Pricing is the method whereby a business sets the worth at which it’ll sell its merchandise and services, and will be a part of the business’s promoting set up.

Compliance Violations :

Compliance suggests that conformity to a rule, like a specification, policy, customary or law. restrictive compliance describes the goal that organizations are after to realize in their efforts to confirm that they’re attentive to and take steps to adjust to relevant laws and laws. And any disturbance in relating to compliance is violations in compliance.

34. Will we tend to use Active server pages(ASP) with Apache?

Ans:

Apache net Server package doesn’t embrace ASP support.

However, a variety of products offer ASP or ASP-like practicality for Apache. a number of these are:

Apache:ASP : Apache ASP provides Active Server Pages port to the Apache net Server with Perl scripting solely, and allows development of dynamic net applications with session management and embedded Perl code. There also are several powerful extensions, as well as XML taglibs, XSLT rendering, and new events not originally a part of the ASP AP.

mod_mono : It’s associate degree Apache two.0/2.2/2.4.3 module that gives ASP.NET support for the web’s favorite server, Apache. it’s hosted within Apache. Looking at your configuration, the Apache box may be one or a dozen separate processes, all of those processes can send their ASP.NET requests to the mod-mono-server method. The mod-mono-server method successively will host multiple freelance applications. It will do this by exploiting Application Domains to isolate the applications from one another, whereas employing a single Mono virtual machine.

35. Is Nimbus communicating with supervisors directly with Apache Storm?

Ans:

Apache Storm uses an enclosed distributed electronic communication system for the communication between nimbus and supervisors. A supervisor has multiple employee processes and it governs employee processes to complete the tasks allotted by the nimbus. employee method. An employee method can execute tasks associated with a selected topology.

36. What’s Topology Message Timeout secs in Apache Storm?

Ans:

It is the utmost quantity of your time assigned to the topology to totally method a message discharged by a spout. If the message is not acknowledged in a given time-frame, Apache Storm can fail the message on the spout.

37. What’s the ServerType directive in Apache Server?

Ans:

It defines whether or not Apache ought to spawn itself as a toddler method (standalone) or keep everything in an exceedingly single method (inetd). Keeping it inetd conserves resources.

The ServerType directive is enclosed in Apache one.3 for background compatibility with older UNIX-based versions of Apache. By default, Apache is ready for a standalone server which implies Apache can run as a separate application on the server. The ServerType directive isn’t obtainable in Apache two.0.

38. During which folder square measure Java Applications hold on in Apache?

Ans:

Java applications aren’t held on in Apache, it is solely connected to a different Java netapp hosting web server mistreatment of the mod_jk connective. mod_jk may be a replacement to the old mod_jserv. it’s a totally new Felis catus-Apache plug-in that handles the communication between Tomcat and Apache.Several reasons:

- mod_jserv was too advanced. As a result of it having been ported from Apache/JServ, it brought with it various JServ specific bits that aren’t required by Apache.

- mod_jserv supported solely Apache. Felis catus supports several net servers through a compatibility layer named the jk library. Supporting 2 totally different modes of labor became problematic in terms of support, documentation and bug fixes. mod_jk ought to fix that.

- The stratified approach provided by the jk. library makes it easier to support Apache 1.3.x and Apache2.xx.

- Better support for SSL. mod_jserv couldn’t faithfully determine whether or not a call for participation was created via hypertext transfer protocol or HTTPS. mod_jk can mistreat the newer Ajp13 protocol.

39. Justify what Apache Storm is? What square measures the elements of Storm?

Ans:

Apache Storm is an Associate in Nursing open supply distributed period computation system used for process real time massive knowledge analytics. Unlike Hadoop instruction execution, Apache Storm is for real-time operation and may be used with any programming language.

40. What’s the info model and elements of Apache Storm?

Ans:

41. Justify what streams are and stream grouping in Apache Storm?

Ans:

In Apache Storm, stream is referred to as a bunch or boundless sequence of Tuples whereas stream grouping determines however stream ought to be divided among the bolt’s tasks.

42. Justify elements of Apache Storm ?

Ans:

Components of Apache Storm includes:

Nimbus: It works as a Hadoop’s Job huntsman. It distributes code across the cluster, uploads computation for execution, apportion employees across the cluster and monitors computation and reallocates employees pro re nata

Zookeeper: It’s used as a treater for communication with the Storm Cluster

Supervisor: Interacts with Nimbus through Zookeeper, looking at the signals received from the Nimbus, it executes the method.

43. List out completely different stream groupings in Apache Storm?

Ans:

- Shuffle grouping

- Fields grouping

- Global grouping

- All grouping

- None grouping

- Direct grouping

- Local grouping

44. What’s Topology Message Timeout secs in Apache Storm?

Ans:

The maximum quantity of your time assigned to the topology to totally method a message discharged by a spout. If the message isn’t acknowledged in a very given timeframe, Apache Storm can fail the message on the spout.

45. What’s Hadoop Storm?

Ans:

Apache Storm could be a free and open supply distributed realtime computation system. Apache Storm makes it straightforward to faithfully method boundless streams of knowledge, doing for real time process what Hadoop did for instruction execution. Apache Storm is easy, is used with any artificial language, and could be a ton of fun to use.

46. A way to write the Output into a file victimization Storm?

Ans:

In Spout, once you are reading a file, build a FileReader object within the Open() methodology, at that point it initializes the reader object for the employee node. And use that object in nextTuple() methodology.

47. However, is it absolutely processed in Apache Storm?

Ans:

By job the nextTuple procedure or methodology on the Spout, Storm requests a tuple from the Spout. The Spout utilizes the SpoutoutputCollector given within the open methodology to discharge a tuple to 1 of its output streams. whereas discharging a tuple, the Spout allocates a “message id” that may be accustomed to acknowledge the tuple later.After that, the tuple gets sent to overwhelming bolts, and Storm takes charge of pursuing the tree of messages that’s created. If the Storm is assured that a tuple is processed totally, then it will decide the ack procedure on the originating Spout task with the message id that the Spout has given to the Storm.

48. What does one mean by “spouts” and “bolts”?

Ans:

Apache Storm utilizes custom-created “spouts” and “bolts” to explain data origins and manipulations to supply batch, distributed process of streaming information.

49. Wherever would you employ Apache Storm?

Ans:

Storm is employed for: Stream process- Apache Storm is tailored to the processing of a stream of knowledge in a period of time and updates varied databases. The process rate should balance that of the input file. Distributed RPC- Apache Storm will pose a sophisticated question, enabling its computation in a period of time. Continuous computation- information streams are unceasingly processed, and Storm presents the results to customers in a period of time. This may like the process of each message once it reaches or building it in little batches over a quick amount. Streaming trending themes from Twitter into internet browsers is Associate in Nursing illustration of continuous computation. period of time analytics- Apache Storm can interpret and reply to information because it arrives from multiple information origins in a period of time.

50. What’s Dzone massive information in Apache storm?

Ans:

51. What square measures the characteristics of Apache Storm?

Ans:

- It is a speedy and secure process system.

- It will manage large volumes of information at tremendous speeds.

- It is an ASCII text file and an element of Apache comes.

- It aids in the process of massive knowledge.

- Apache Storm is horizontally expandable and fault-tolerant.

52. However would one split a stream in Apache Storm?

Ans:

One will use multiple streams if one’s case needs that, that isn’t very cacophonous , however we’ll have a great deal of flexibility, we will use it for content-based routing from a bolt for example: Declaring the stream within the bolt:

- @Override

- public void declareOutputFields(final OutputFieldsDeclarer outputFieldsDeclarer)

- Emitting from the bolt on the stream:

- collector.emit(“stream1”, new Values(“field1 Value”));

- You hear the proper stream through the topology

- builder.setBolt(“myBolt1”, new MyBolt1()).shuffleGrouping(“boltWithStreams”, “stream1”);

- builder.setBolt(“myBolt2”, new MyBolt2()).shuffleGrouping(“boltWithStreams”,”stream2″);

53. Is there an easy approach to deploy Apache Storm on a neighborhood machine (say, Ubuntu) for evaluation?

Ans:

- StormSubmitter.submitTopology(“Topology_Name”, conf, Topology_Object);

But if you utilize the below code, the topology is submitted domestically within the same machine. During this case, a brand new native cluster is formed with nimbus, zookeepers, and supervisors within the same machine.

- LocalCluster cluster = new LocalCluster();

- cluster.submitTopology(“Topology_Name”, conf, Topology_Object);

54. What’s a directed acyclic graph in Storm?

Ans:

Storm could be a “topology” within the type of a directed acyclic graph (DAG) with spouts and bolts serving because the graph vertices. Edges on the graph square measure referred to as streams and forward knowledge from one node to ensuing. jointly, the topology operates as an information alteration pipeline.

55. What does one mean by Nodes?

Ans:

The two categories of nodes square measure the Master Node and employee Node. The Master Node administers a daemon Nimbus that allocates jobs to devices and administers their performance. The employee Node operates a daemon referred to as Supervisor, that distributes the responsibilities to alternative employee nodes and manages them as per demand.

56. What square measures the weather of Storm?

Ans:

Storm has 3 crucial parts, viz., Topology, Stream, and Spout. Topology could be a network composed of Stream and Spout. The Stream could be a limitless pipeline of tuples, and Spout is the origin of the info streams that transforms the info into the tuple of streams and forwards it to the bolts to be processed.

57. What square measure Storm Topologies?

Ans:

The philosophy for a period application is within a Storm topology. A Storm topology is cherished by MapReduce. One elementary distinction is that a MapReduce job ultimately concludes, whereas a topology continues endlessly (or till you kill it, of course). A topology could be a graph of spouts and bolts combined with stream groupings.

58. What’s the TopologyBuilder class?

Ans:

- java.lang.Object -> org.apache.Storm.topology.TopologyBuilder

- public category TopologyBuilder

- extends Object

TopologyBuilder displays the Java API for outlining a topology for Storm to administer. Topologies square measure Thrift formations within the conclusion, however because the Thrift API is thus repetitive, TopologyBuilder facilitates generating topologies. example for generating and submitting a topology:

- TopologyBuilder builder = new TopologyBuilder();

- builder.setSpout(“1”, new TestWordSpout(true), 5);

- builder.setSpout(“2”, new TestWordSpout(true), 3);

- builder.setBolt(“3”, new TestWordCounter(), 3)

- .fieldsGrouping(“1”, new Fields(“word”))

- .fieldsGrouping(“2”, new Fields(“word”));

- builder.setBolt(“4”, new TestGlobalCount())

- .globalGrouping(“1”);

- Map conf = new HashMap();

- conf.put(Config.TOPOLOGY_WORKERS, 4);

- StormSubmitter.submitTopology(“topology”, conf, builder.createTopology());

59. However, does one Kill a topology in Storm?

Ans:

- Storm kill topology-name [-w wait-time-secs]

Kills the topology with the name: topology-name. Storm can at first deactivate the topology’s spouts for the span of the topology’s message timeout to let all messages presently process end process. Storm can then clean up the staff and close up their state. you’ll be able to annul the life of your time Storm pauses between deactivation and ending with the -w flag.

60. What’s the design of Apache Storm?

Ans:

61. What transpires once Storm kills a topology?

Ans:

Storm doesn’t kill the topology instantly. Instead, it deactivates all the spouts in order that they don’t unharness to any extent further tuples, and so Storm pauses for Config.TOPOLOGY_MESSAGE_TIMEOUT_SECS moments before destroying all staff. This provides the topology decent time to end the tuples; it absolutely was processed whereas it got destroyed.

62. What’s the instructed approach for writing integration tests for associate degree Apache Storm topology in Java?

Ans:

You can utilize LocalCluster for integration testing. you’ll check out a number of Storm’s own integration tests for inspiration here. Tools you would like to use at the FeederSpout and FixedTupleSpout. A topology wherever all spouts implement the CompletableSpout interface may be run till fulfillment victimization of the tools within the Testing category. Storm tests may also conceive to “simulate time” which suggests the Storm topology can idle until you decide LocalCluster.advanceClusterTime. this could permit you to try and do asserts in between bolt emits, for instance.

63. What will the swap command do?

Ans:

A projected feature is to realize a Storm swap command that interchanges an operating topology with a new one, reassuring a minimum period of time and no risk of each topology functioning on tuples at the same time.

64. However does one monitor topologies?

Ans:

The most appropriate place to observe a topology is utilizing the Storm UI. The Storm UI provides information regarding errors occurring in tasks, fine-grained statistics on the output, and latency performance of each part of every operational topology.

65. However does one rebalance the quantity of executors for a bolt in an exceedingly running Apache Storm topology?

Ans:

You regularly ought to have larger (or equal range of) jobs than executors. because the amount of tasks is fastened, you wish to outline a bigger initial range than initial executors to be ready to proportion the quantity of executors throughout the runtime. you’ll see the quantity of tasks, sort of a most range of executors: #executors

66. What are Streams?

Ans:

A Stream is the core thought in Storm. A stream could be a limitless series of tuples that are processed and made in parallel during a distributed manner. we have a tendency to outline Streams by a schema that represents the fields within the stream’s records.

67. What will tuples hold in Storm?

Ans:

By default, tuples will embody integers, longs, shorts, bytes, strings, doubles, floats, booleans, and computer memory unit arrays. you’ll be able to specify your serializers in order that custom varieties may be utilized natively.

68. However will we check for the httpd.conf consistency and also the errors in it?

Ans:

We check the configuration file by using:

httpd :The command provides an outline of how Storm parsed the configuration file. A careful examination of the science addresses and servers may facilitate in uncovering configuration errors.

69. What’s Kryo?

Ans:

Storm utilizes Kryo for publication. Kryo could be a resilient and fast publication library that gives minute serializations.

70. What’s the best Storm design for apache?

Ans:

71. What are Spouts?

Ans:

A spout is the origin of streams in a topology. Generally, spouts will scan tuples from an outside source and release them into the topology. Sprouts can be reliable or unreliable. A reliable spout is able to replay a tuple if it was not processed by Storm, while an unreliable spout overlooks the tuple as soon as it is emitted. Spouts can emit more than one stream. To do so, declare multiple streams utilizing the declareStream method of OutputFieldsDeclarer and define the stream to emit to when applying the emit method on SpoutOutputCollector. The chief method on spouts is nextTuple. nextTuple either emits a distinct tuple into the topology or just returns if there are no new tuples to emit. It is important that nextTuple does not block any spout implementation as Storm calls all the spout methods on the corresponding thread. Other chief methods on spouts are ack and fail. These are called when Storm identifies that a tuple emitted from the spout either successfully made it through the topology or failed to be achieved. Ack and fail are only called for reliable spouts.

72. What are Bolts?

Ans:

In topologies, all processing is carried out in bolts. Bolts are capable of performing a wide range of operations, including joins, aggregations, functions, and more. Bolts have rudimentary stream transformation capabilities. Complex stream modifications may require numerous steps and additional bolts.

73. What are the programming languages supported to work with Apache Storm?

Ans:

here is no specific language mentioned, as Apache Storm is flexible to work with any of the programming languages.

74. What are the components of Apache Storm?

Ans:

The parts of Apache Storm are Nimbus, Zookeeper, and Supervisor.

75. What is Nimbus used for?

Ans:

Nimbus also goes by the name Master Node. Nimbus is used to monitor employees’ jobs. All of the code is divided among the employees, who are then assigned to the available clusters. If Nimbus is required to offer any of the workers with additional resources, it must do so. Note that Nimbus is comparable to Hadoop’s

76. What’s the Storm process?

Ans:

A system for processing streaming information in real time. Apache™ Storm adds reliable period of time processing capabilities to Enterprise Hadoop. Storm on YARN is powerful for situations requiring period of time analytics, machine learning and continuous observation of operations.

77. What’s Storm used for?

Ans:

Apache Storm could be a distributed, fault-tolerant, ASCII text file computation system. you’ll use Storm to method streams of information in real time with Apache Hadoop. Storm solutions can even offer a secured process of information, with the power to replay information that wasn’t processed the primary time.

78. What’s a Storm tool?

Ans:

Storm, or the code tool for the organization of necessities modeling, could be a tool designed to contour the method of specifying a software package by automating processes that facilitate scale back errors.

79. What’s spout in Storm?

Ans:

Spouts represent the supply of information in Storm. you’ll write spouts to browse information from information sources like databases, distributed file systems, electronic messaging frameworks etc. Spouts will broadly speaking be classified into following – Reliable – These spouts have the aptitude to replay the tuples (a unit {of information|of knowledge|of information} in the data stream).

80. Outline a real time huge information analysis engine for Storm?

Ans:

81.What are some of the scenarios in which you would want to use Apache Storm?

Ans:

The following use cases can be accomplished with Storm:

Processing in streams:

Real-time data processing and database updates are accomplished with Apache Storm. Real-time processing is required, and processing speed must be equal to input data speed.

Ongoing calculation:

Continuous data stream processing is possible with Apache Storm, and customers can receive the findings immediately. It might be necessary to do this by either processing each message as it comes in or producing in quick batches. Continuous computation is demonstrated by streaming popular topics from Twitter into web browsers.

RPC distributed:

A complex query can be parallelized by Apache Storm so that it can be computed instantly.

Analytics in real time:

To respond to data, Apache Storm will evaluate it.

82.What is Storm topology?

Ans:

Each node of a topology is a spout or bolt, and it is a graph of stream transformations. In a Storm topology, each node operates concurrently. You can define in your topology how much parallelism each node should have, and Storm will then spawn that many threads throughout the cluster to carry out the operation.

83.What is Zookeeper?

Ans:

The Storm cluster’s nodes may communicate with each other thanks to Zookeeper. There is not much effort because the zookeeper is just concerned with cooperation and not with messages.

84. What is the use of a Supervisor?

Ans:

The supervisor takes signals from Nimbus through Zookeeper to execute the process. Supervisors are also known as Worker nodes.

85. What are the features of Apache Storm?

Ans:

Reliable: The execution of all data is guaranteed.

Scalable: Through the use of a machine’s cluster, scaling is made possible.

Robust: Storm restarts workers when there is an error or malfunction, ensuring that the remaining workers in the node can continue to work successfully and uninterruptedly.

Simple to use: Standard configurations make it simple to deploy and employ.

Rapid : Each node is capable of processing 1,000,000 100-byte messages.

86. However will log files be streamlined?

Ans:

First, piece spout to browse the log files to emit one line and so analyze it by assigning it to bolt.

87. What area unit the kinds of stream teams in Apache Storm?

Ans:

All, none, local, global, field, shuffle, and direct groupings are units offered in Apache Storm.

88. What’s Topology_Message_Timeout_secs used for?

Ans:

Time nominative to method a message discharged from the spout, and if the least bit the message isn’t processed, then the message is taken into account as fail.

89. However, does one use Apache Storm as a Proxy server?

Ans:

Using the mod-proxy module, it is still used as a proxy server.

90. What’s the Storm design and example of topology?

Ans:

91. Command to kill Storm topology?

Ans:

Storm kill :Where – ACTE_topology is that the name of the topology

92. Why is Apache Storm not supplied with SSL?

Ans:

In order to avoid legal or functionary problems, Apache Storm avoids SSL.

93. Which java components does Apache Storm support?

Ans:

There is no Java support for Apache Storm.