Edge Computing Tutorial

Last updated on 25th Sep 2020, Blog, Tutorials

Edge computing

Edge computing is a distributed information technology (IT) architecture in which client data is processed at the periphery of the network, as close to the originating source as possible. The move toward edge computing is driven by mobile computing, the decreasing cost of computer components and the sheer number of networked devices in the internet of things (IoT).

Depending on the implementation, time-sensitive data in an edge computing architecture may be processed at the point of origin by an intelligent device or sent to an intermediary server located in close geographical proximity to the client. Data that is less time-sensitive is sent to the cloud for historical analysis, big data analytics and long-term storage.

How does edge computing work?

One simple way to understand the basic concept of edge computing is by comparing it to cloud computing. In cloud computing, data from a variety of disparate sources is sent to a large centralized data center that is often geographically far away from the source of the data. This is why the name “cloud” is used — because data gets uploaded to a monolithic entity that is far away from the source, like evaporation rising to a cloud in the sky.

Unlike cloud computing, edge computing allows data to exist closer to the data sources through a network of edge devices.

By contrast, edge computing is sometimes called fog computing. The word “fog” is meant to convey the idea that the advantages of cloud computing should be brought closer to the data source. (In meteorology, fog is simply a cloud that is close to the ground.) The benefits of the cloud are brought closer to the ground (data source) and are spread out instead of centralized.

The name “edge” in edge computing is derived from network diagrams; typically, the edge in a network diagram signifies the point at which traffic enters or exits the network. The edge is also the point at which the underlying protocol for transporting data may change. For example, a smart sensor might use a low-latency protocol like MQTT to transmit data to a message broker located on the network edge, and the broker would use the hypertext transfer protocol (HTTP) to transmit valuable data from the sensor to a remote server over the Internet.

Edge infrastructure places computing power closer to the source of data. The data has less distance to travel, and more places to travel to than in a typical cloud infrastructure. Edge technology — sometimes called edge nodes — is established at the periphery of a network and may take the form of edge servers, edge data centers or other networked devices with compute power. Instead of sending massive amounts of data from disparate locations to a centralized data center, smaller amounts are sent to the edge nodes to be processed and returned, only being sent along to a larger remote data center if necessary.

Why does edge computing matter?

Edge computing is important because the amount of data being generated is growing as time goes on, and the array of devices that generate it are as well. The rise of IoT and mobile computing contribute to both factors.

Transmitting massive amounts of raw data over a network puts a tremendous load on network resources. It can also be difficult to process and maintain that massive amount when it is gathered from disparate sources. The data quality and types of data may vary significantly from source to source, and many resources are used to funnel it all to one centralized location. An edge network architecture can ease this strain on resources by decentralizing and processing the generated data closer to the source.

In some cases, it is much more efficient to process data near its source and send only the data that has value over the network to a remote data center. Instead of continually broadcasting data about the oil level in a car’s engine, for example, an automotive sensor might simply send summary data to a remote server on a periodic basis. A smart thermostat might only transmit data if the temperature rises or falls outside acceptable limits. Or an intelligent Wi-Fi security camera aimed at an elevator door might use edge analytics and only transmit data when a certain percentage of pixels significantly change between two consecutive images, indicating motion

Benefits of edge computing

A major benefit of edge computing is that it improves time to action and reduces response time down to milliseconds, while also conserving network resources. Some specific benefits of edge computing are:

- Increases capacity for low latency applications and reduced bottlenecks.

- Enables more efficient use of IoT and mobile computing.

- Enables 5G connectivity.

- Enables real-time analytics and improved business intelligence (BI) insights by using machine learning and smart devices within the edge compute

- Enables quick response times and more accurate processing of time-sensitive data.

- Centralizes management of devices by giving end-users more access to data processes, allowing for more specific network insight and control.

- Increases availability of devices and decreases strain on centralized network resources.

- Enables data caching closer to the source using content delivery networks (CDNs).

Challenges of edge computing

Despite its benefits, edge computing is not expected to completely replace cloud computing. Despite the ability to reduce latency and network bottlenecks, edge computing could pose significant security, licensing and configuration challenges.

- Security challenges: Edge computing’s distributed architecture increases the number of attack vectors. Meaning, the more intelligence an edge client has, the more vulnerable it becomes to malware infections and security exploits. Edge devices are also likely to be less protected in terms of physical security than a traditional data center.

- Licensing challenges: Smart clients can have hidden licensing costs. While the base version of an edge client might initially have a low ticket price, additional functionalities may be licensed separately and drive the price up.

- Configuration challenges: Unless device management is centralized and extensive, administrators may inadvertently create security holes by failing to change the default password on each edge device or neglecting to update firmware in a consistent manner, causing configuration drift.

Examples of edge computing

Edge computing offers a range of value propositions for smart IoT applications and use cases across a variety of industries. Some of the most popular use cases that will depend on edge computing to deliver improved performance, security and productivity for enterprises include:

Autonomous vehicles

For autonomous driving technologies to replace human drivers, cars must be capable of reacting to road incidents in real-time. On average, it may take 100 milliseconds for data transmission between vehicle sensors and backend cloud datacenters. In terms of driving decisions, this delay can have a significant impact on the reaction of self-driving vehicles.

Toyota predicts that the amount of data transmitted between vehicles and the cloud could reach 10 exabytes per month by the year 2025. If network capacity fails to accommodate the necessary network traffic, vendors of autonomous vehicle technologies may be forced to limit self-driving capabilities of the cars.

In addition to the data growth and existing network limitations, technologies such as 5G connectivity and Artificial Intelligence are paving the way for edge computing.5G will help deploy computing capabilities closer to the logical edge of the network in the form of distributed cellular towers. The technology will be capable of greater data aggregation and processing while maintaining high speed data transmission between vehicles and communication towers.

AI will further facilitate intelligent decision-making capabilities in real-time, allowing cars to react faster than humans in response to abrupt changes in traffic flows.

Subscribe For Free Demo

Error: Contact form not found.

Fleet management

Logistics service providers leverage IoT telematics data to realize effective fleet management operations. Drivers rely on vehicle-to-vehicle communication as well as information from backend control towers to make better decisions. Locations of low connectivity and signal strength are limited in terms of the speed and volume of data that can be transmitted between vehicles and backend cloud networks.

With the advent of autonomous vehicle technologies that rely on real-time computation and data analysis capabilities, fleet vendors will seek efficient means of network transmission to maximize the value potential of fleet telematics data for vehicles travelling to distant locations.

By drawing computation capabilities in close proximity to fleet vehicles, vendors can reduce the impact of communication dead zones as the data will not be required to send all the way back to centralized cloud data centers. Effective vehicle-to-vehicle communication will enable coordinated traffic flows between fleet platoons, as AI-enabled sensor systems deployed at the network edges will communicate insightful analytics information instead of raw data as needed.

Predictive maintenance

The manufacturing industry heavily relies on the performance and uptime of automated machines. In 2006, the cost of manufacturing downtime in the automotive industry was estimated at $1.3 million per hour. A decade later, the rising financial investment toward vehicle technologies and the growing profitability in the market make unexpected service interruptions more expensive in multiple orders of magnitude.

With edge computing, IoT sensors can monitor machine health and identify signs of time-sensitive maintenance issues in real-time. The data is analyzed on the manufacturing premises and analytics results are uploaded to centralized cloud data centers for reporting or further analysis.

- Analyzing anomalies can allow the workforce to perform corrective measures or predictive maintenance earlier, before the issue escalates and impacts the production line.

- Analyzing the most impactful machine health metrics can allow organizations to prolong the useful life of manufacturing machines.

As a result, manufacturing organizations can lower the cost of maintenance, improve operational effectiveness of the machines, and realize higher return on assets.

Voice assistance

Voice assistance technologies such as Amazon Echo, Google Home, and Apple Siri, among others, are pushing the boundaries of AI. An estimated 56.3 million smart voice assistant devices will be shipped globally in 2018. Gartner predicts that 30 percent of consumer interactions with the technology will take place via voice by the year 2020. The fast-growing consumer technology segment requires advanced AI processing and low-latency response time to deliver effective interactions with end-users.

Particularly for use cases that involve AI voice assistant capabilities, the technology needs go beyond computational power and data transmission speed. The long-term success of voice assistance depends on consumer privacy and data security capabilities of the technology. Sensitive personal information is a treasure trove for underground cybercrime rings and potential network vulnerabilities in voice assistance systems could pose unprecedented security and privacy risks to end-users.

To address this challenge, vendors such as Amazon are enhancing their AI capabilities and deploying the technology closer to the edge, so that voice data doesn’t need to move across the network. Amazon is reportedly working to develop its own AI chip for the Amazon Echo devices.

Prevalence of edge computing in the voice assistance segment will hold equal importance for enterprise users as employees working in the field or on the manufacturing line will be able to access and analyze useful information without interrupting manual work operations.

Edge computing, IoT and 5G possibilities

5G requires mobile edge computing, largely because 5G relies on a greater multitude of network nodes — more than 4G, which relies on larger centralized cell towers. 5G’s frequency band generally travels shorter distances and is weaker than 4G, so more nodes are required to pass the signal between them and mobile service users. More nodes means a higher likelihood that one may be compromised, so centralized management of these nodes is crucial. The nodes must also be able to process and collect real-time data that enables them to better serve users.

Edge computing is being driven by the proliferation and expansion of IoT. With so many networked devices, from smart speakers to autonomous vehicles to the industrial internet of things (IIoT), there needs to be processing power close to or even on those devices to handle the massive amounts of data they constantly generate.

IoT provides the devices and the data they collect, 5G provides a faster, more powerful network for that data to travel on, and edge computing provides the processing power to make use of and handle that data in real-time. The combination of these three makes for a tightly networked world that would make newer technologies like autonomous vehicles more feasible for public use. Together, the proliferation of 5G, IoT and edge computing could also make smart cities more feasible in places where they are not now.

Future of edge computing

According to the Gartner Hype Cycle 2017, edge computing is drawing closer to the Peak of Inflated Expectations and will likely reach the Plateau of Productivity in 2-5 years. Considering the ongoing research and developments in AI and 5G connectivity technologies, and the rising demands of smart industrial IoT applications, Edge Computing may reach maturity faster than expected.

Forms of Edge Computing

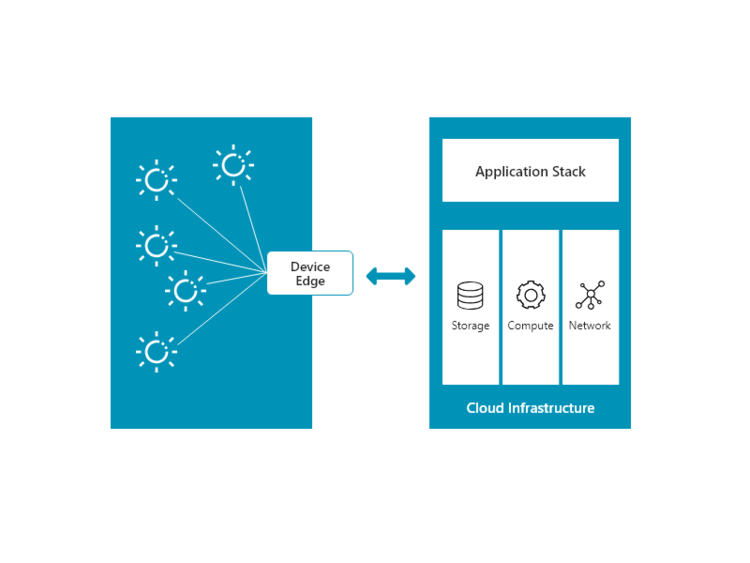

Device Edge

In this model, Edge Computing is taken to the customers in the existing environments. For example, AWS Greengrass and Microsoft Azure IoT Edge.

Cloud Edge

This model of Edge Computing is basically an extension of the public cloud. Content Delivery Networks are classic examples of this topology in which the static content is cached and delivered through a geographically spread edge locations.

Vapor IO is an emerging player in this category. They are attempting to build infrastructure for cloud edge. Vapor IO has various products like Vapor Chamber. These are self-monitored. They have sensors embedded in them using which they are continuously monitored and evaluated by Vapor Software, VEC(Vapor Edge Controller). They also have built OpenDCRE, which we will see later in this blog.

The fundamental difference between device edge and cloud edge lies in the deployment and pricing models. The deployment of these models — device edge and cloud edge — are specific to different use cases. Sometimes, it may be an advantage to deploy both the models.

Edges around you

Edge Computing examples can be increasingly found around us:

- 1. Smart street lights

- 2. Automated Industrial Machines

- 3. Mobile devices

- 4. Smart Homes

- 5. Automated Vehicles (cars, drones etc)

Data Transmission is expensive. By bringing compute closer to the origin of data, latency is reduced as well as end users have better experience. Some of the evolving use cases of Edge Computing are Augmented Reality(AR) or Virtual Reality(VR) and the Internet of things. For example, the rush which people got while playing an Augmented Reality based pokemon game, wouldn’t have been possible if “real-timeliness” was not present in the game. It was made possible because the smartphone itself was doing AR not the central servers. Even Machine Learning(ML) can benefit greatly from Edge Computing. All the heavy-duty training of ML algorithms can be done on the cloud and the trained model can be deployed on the edge for near real-time or even real-time predictions. We can see that in today’s data-driven world edge computing is becoming a necessary component of it.

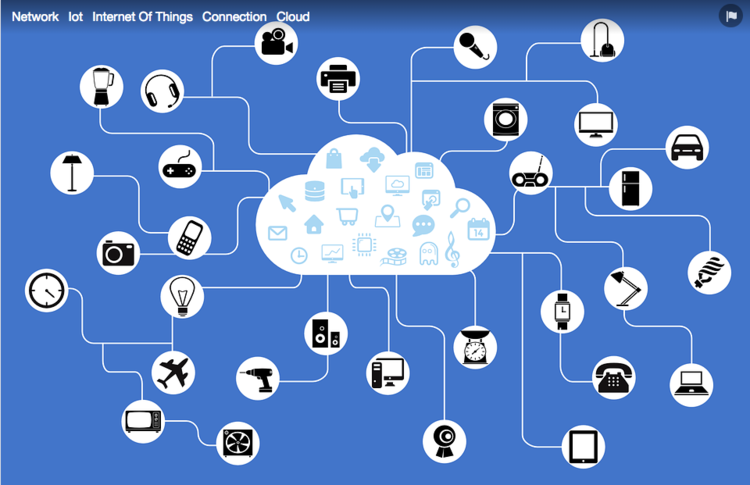

There is a lot of confusion between Edge Computing and IOT. If stated simply, Edge Computing is nothing but the intelligent Internet of things(IOT) in a way. Edge Computing actually complements traditional IOT. In the traditional model of IOT, all the devices, like sensors, mobiles, laptops etc are connected to a central server. Now let’s imagine a case where you give the command to your lamp to switch off, for such simple task, data needs to be transmitted to the cloud, analyzed there and then lamp will receive a command to switch off. Edge Computing brings computing closer to your home, that is either the fog layer present between lamp and cloud servers is smart enough to process the data or the lamp itself.

If we look at the below image, it is a standard IOT implementation where everything is centralized. While Edge Computing philosophy talks about decentralizing the architecture.

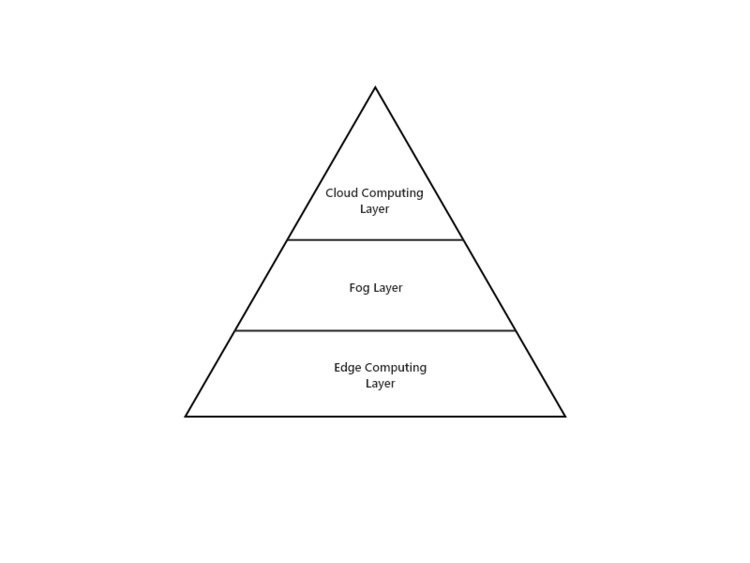

The Fog

Sandwiched between the edge layer and cloud layer, there is the Fog Layer. It bridges the connection between the other two layers.

The difference between fog and edge computing is described in this tutorial –

- Fog Computing — Fog computing pushes intelligence down to the local area network level of network architecture, processing data in a fog node or IoT gateway.

- Edge computing pushes the intelligence, processing power and communication capabilities of an edge gateway or appliance directly into devices like programmable automation controllers (PACs).

How do we manage Edge Computing?

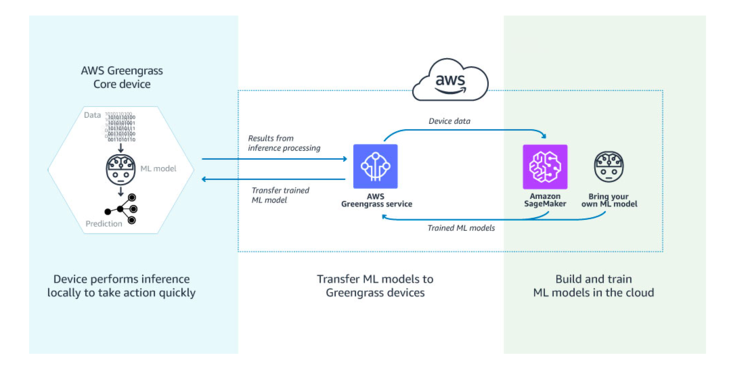

The Device Relationship Management or DRM refers to managing, monitoring the interconnected components over the internet. AWS IOT Core and AWS Greengrass, Nebbiolo Technologies have developed Fog Node and Fog OS, Vapor IO has OpenDCRE using which one can control and monitor the data centers.

Following image (source — AWS) shows how to manage ML on Edge Computing using AWS infrastructure.

AWS Greengrass makes it possible for users to use Lambda functions to build IoT devices and application logic. Specifically, AWS Greengrass provides cloud-based management of applications that can be deployed for local execution. Locally deployed Lambda functions are triggered by local events, messages from the cloud, or other sources.

This GitHub repo demonstrates a traffic light example using two Greengrass devices, a light controller, and a traffic light.

Conclusion

We believe that next-gen computing will be influenced a lot by Edge Computing and will continue to explore new use-cases that will be made possible by the Edge.

Are you looking training with Right Jobs?

Contact Us- Cloud Concepts And Models Tutorial

- Why Cloud Computing Is Essential to Your Organization?

- Cloud Computing Interview Questions and Answers

- The Top In-demand cloud skills for 2020

- What is Cloud Computing Architecture?

Related Articles

Popular Courses

- Aws Certification Online Training

11025 Learners - Google Cloud Certification Courses

12022 Learners - Oracle Certification Training

11141 Learners

- What is Dimension Reduction? | Know the techniques

- Difference between Data Lake vs Data Warehouse: A Complete Guide For Beginners with Best Practices

- What is Dimension Reduction? | Know the techniques

- What does the Yield keyword do and How to use Yield in python ? [ OverView ]

- Agile Sprint Planning | Everything You Need to Know