- File formats in Hadoop Tutorial | A Concise Tutorial Just An Hour

- Controlling Hadoop Jobs Using Oozie Tutorial | The Complete Guide

- Apache Spark Streaming Tutorial | Best Guide For Beginners

- What is Elasticsearch | Tutorial for Beginners

- Amazon Kinesis : Process & Analyze Streaming Data | The Ultimate Student Guide

- Apache Camel Tutorial – EIP, Routes, Components | Ultimate Guide to Learn [BEST & NEW]

- Apache NiFi (Cloudera DataFlow) | Become an expert with Free Online Tutorial

- Kafka Tutorial : Learn Kafka Configuration

- Apache Sqoop Tutorial

- Spark And RDD Cheat Sheet Tutorial

- Apache Pig Tutorial

- Talend

- Cassandra Tutorial

- Kafka Tutorial

- HBase Tutorial

- Spark Java Tutorial

- ELK Stack Tutorial

- Netbeans Tutorial

- PySpark MLlib Tutorial

- Spark RDD Optimization Techniques Tutorial

- Apache Spark & Scala Tutorial

- Apache Impala Tutorial

- Apache Oozie: A Concise Tutorial Just An Hour | LearnoVita

- Apache Storm Advanced Concepts Tutorial

- Apache Storm Tutorial

- Hadoop Mapreduce tutorial

- Hive cheat sheet

- Spark Algorithm Tutorial

- Apache Spark Tutorial

- Apache Cassandra Data Model Tutorial

- Big Data Applications Tutorial

- Advanced Hive Concepts and Data File Partitioning Tutorial

- Hadoop Architecture Tutorial

- Big Data and Hadoop Ecosystem Tutorial

- Apache Mahout Tutorial

- Hadoop Tutorial

- BIG DATA Tutorial

- File formats in Hadoop Tutorial | A Concise Tutorial Just An Hour

- Controlling Hadoop Jobs Using Oozie Tutorial | The Complete Guide

- Apache Spark Streaming Tutorial | Best Guide For Beginners

- What is Elasticsearch | Tutorial for Beginners

- Amazon Kinesis : Process & Analyze Streaming Data | The Ultimate Student Guide

- Apache Camel Tutorial – EIP, Routes, Components | Ultimate Guide to Learn [BEST & NEW]

- Apache NiFi (Cloudera DataFlow) | Become an expert with Free Online Tutorial

- Kafka Tutorial : Learn Kafka Configuration

- Apache Sqoop Tutorial

- Spark And RDD Cheat Sheet Tutorial

- Apache Pig Tutorial

- Talend

- Cassandra Tutorial

- Kafka Tutorial

- HBase Tutorial

- Spark Java Tutorial

- ELK Stack Tutorial

- Netbeans Tutorial

- PySpark MLlib Tutorial

- Spark RDD Optimization Techniques Tutorial

- Apache Spark & Scala Tutorial

- Apache Impala Tutorial

- Apache Oozie: A Concise Tutorial Just An Hour | LearnoVita

- Apache Storm Advanced Concepts Tutorial

- Apache Storm Tutorial

- Hadoop Mapreduce tutorial

- Hive cheat sheet

- Spark Algorithm Tutorial

- Apache Spark Tutorial

- Apache Cassandra Data Model Tutorial

- Big Data Applications Tutorial

- Advanced Hive Concepts and Data File Partitioning Tutorial

- Hadoop Architecture Tutorial

- Big Data and Hadoop Ecosystem Tutorial

- Apache Mahout Tutorial

- Hadoop Tutorial

- BIG DATA Tutorial

Hive cheat sheet

Last updated on 08th Oct 2020, Big Data, Blog, Tutorials

All the industries deal with the Big data that is large amount of data and Hive is a tool that is used for analysis of this Big Data. Apache Hive is a tool where the data is stored for analysis and querying. This cheat sheet guides you through the basic concepts and commands required to start with it.

This Apache Hive cheat sheet will guide you to the basics of Hive which will be helpful for the beginners and also for those who want to take a quick look at the important topics of Hive

Further, if you want to learn Apache Hive in depth, you can refer to the tutorial blog on Hive.Apache Hive is a data warehouse infrastructure based on Hadoop framework that is perfectly suitable for Data summarization, Data analysis, and Data querying. The platform is largely helpful to manage voluminous datasets that reside inside distributed storage system.

You will be surprised to know that before becoming an integral part of open source Hadoop framework, Hive was originally initiated by the Facebook. Hive framework was designed with a concept to structure large datasets and query the structured data with a SQL-like language that is named as HQL (Hive query language) in Hive.

Subscribe For Free Demo

Error: Contact form not found.

Apache Hive:

It is a data warehouse infrastructure based on Hadoop framework which is perfectly suitable for data summarization, analysis and querying. It uses an SQL like language called HQL (Hive query Language)

HQL:

It is a query language used to write the custom map reduce framework in Hive to perform more sophisticated analysis of the data

Table: Table in hive is a table which contains logically stored data

Components of Hive:

- Meta store: Meta store is where the schemas of the Hive tables are stored, it stores the information about the tables and partitions that are in the warehouse.

- SerDe: Serializer, Deserializer which gives instructions to hive on how to process records

Hive interfaces:

- Hive interfaces includes WEB UI

- Hive command line

- HD insight (windows server)

Hive Function Meta commands:

- Show functions: Lists Hive functions and operators

- Describe function [function name]: Displays short description of the particular function

- Describe function extended [function name]: Displays extended description of the particular function

Types of Hive Functions:

- UDF (User defined Functions): It is a function that fetches one or more columns from a row as arguments and returns a single value

- UDTF (User defined Tabular Functions): This function is used to produce multiple columns or rows of output by taking zero or more inputs

- Macros: It is a function that uses other Hive functions

User defined aggregate functions: A user defined function that takes multiple rows or columns and returns the aggregation of the data

User defined table generating functions: A function which takes a column from single record and splitting it into multiple rows

Indexes: Indexes are created to the speedy access to columns in the database

- Thrift: A thrift service is used to provide remote access from other processors

- Meta store: This is a service which stores the metadata information such as table schemas

- Hcatalog: It is a metadata and table management system for Hadoop platform which enables storage of data in any format.

Hive SELECT statement syntax using HQL:

- Select: Select is a projection operator in HiveQL, which scans the table specified by the FROM clause

- Where: Where is a condition which specifies what to filter

- Group by: It uses the list of columns, which specifies how to aggregate the records

- Cluster by, distribute by, Sort by: Specifies the algorithm to sort, distribute and create cluster, and the order for sorting

- Limit: This specifies how many records to be retrieved

Partitioner:

Partitioner controls the partitioning of keys of the intermediate map outputs, typically by a hash function which is same as the number of reduce tasks for a job

Partitioning: It is used for distributing load horizontally. It is a way of dividing the tables into related parts based on values such as date, city, departments etc.

Bucketing: It is a technique to decompose the datasets into more manageable parts

Hive commands in HQL:

Data Definition Language (DDL) : It is used to build or modify tables and objects stored in a database

- To create database in Hive: create database<data base name>

- To list out the databases created in a Hive warehouse: show databases

- To use the database created: USE <data base name>

- To describe the associated database in metadata: describe<data base name>

- To alter the database created: alter<data base name>

Data Manipulation Language (DML):

These statements are used to retrieve, store, modify Hive data types:

1.Integral data types:

- Tinyint

- Smallint

- Int

- Bigint

2.String types:

- VARCHAR-Length(1 to 65355)

- CHAR-Length(255)

3.Timestamp: It supports the traditional Unix timestamp with optional nanosecond precision

- Dates

- Decimals

General rule :

- interchangeable constructs

- hive is case sensitive

- secmicolon to terminate statements

Hive Data Types :

Primitive Data Types :

1.Numeric :

- TINYINT, SMALLINT, INT, BIGINT

- FLOAT

- DOUBLE

- DECIMAL

2.Date/Time :

- TIMESTAMP

- Strings must be in format “YYYY-MM-DD HH:MM:SS.”

- Interger types as UNIX timestamp in seconds from UNIX epoch (1-JAN-1970 00:00:00)

- Floating point types same as Integer with decimal precision

- DATE

3.String Data Types :

- STRING

- VARCHAR

- CHAR

4.Misc. :

- BOOLEAN

- BINARY is an array of Bytes and similar to VARBINARY in many RDBMSs. BINARY columns are stored within the record, not separately like BLOBs . We can include arbitrary bytes in BINARY column and these bytes are not parsed by Hive as numbers or strings.

Partitioning in Hive :

- Advantages

Partitioning is used for distributing execution load horizontally. As the data is stored as slices/parts, query response time is faster to process the small part of the data instead of looking for a search in the entire data set. For example, In a large user table where the table is partitioned by country, then selecting users of country ‘IN’ will just scan one directory ‘country=IN’ instead of all the directories. - Limitations

Having too many partitions in table creates large number of files and directories in HDFS, which is an overhead to NameNode since it must keep all metadata for the file system in memory only.

Partitions may optimize some queries based on Where clauses, but may be less responsive for other important queries on grouping clauses.

In Mapreduce processing, Huge number of partitions will lead to huge no of tasks (which will run in separate JVM) in each mapreduce job, thus creates lot of overhead in maintaining JVM start up and tear down. For small files, a separate task will be used for each file. In worst scenarios, the overhead of JVM start up and tear down can exceed the actual processing time.

Hive is a data warehousing infrastructure based on Apache Hadoop. Hadoop provides massive scale out and fault tolerance capabilities for data storage and processing on commodity hardware. Hive is designed to enable easy data summation, ad-hoc querying and analysis of large volumes of data. It provides SQL which enables users to do ad-hoc querying, summarization and data analysis easily. At the same time, Hive’s SQL gives users multiple places to integrate their own functionality to do custom analysis, such as User Defined Functions (UDFs).

Hive cheat sheet :

Apache Hive: It is a data warehouse infrastructure based on Hadoop framework which is perfectly suitable for data summarization, analysis and querying. User defined aggregate functions: A user defined function that takes multiple rows or columns and returns the aggregation of the data.

Hive Options :

- Set execution engine : set hive.execution.engine=mr/spark/tez;

- Set queuename : set mapreduce.job.queuename=default

Hive Procedures :

Tranfert data between clusters : If it’s an external table, you just need to execute a “show create table” from the source cluster and execute it on the new cluster. You just need to copy paste the content of the table folder from a ccuster to the other in the path specified in the create statement.

Hive Function Meta Commands L

- SHOW FUNCTIONS : lists Hive functions and operators

- DESCRIBE FUNCTION [function name] : displays short description of the function

- DESCRIBE FUNCTION EXTENDED [function name]: access extended description of the function

Types Of Hive Functions :

- UDF : is a function that takes one or more columns from a row as argument and returns a single value or object. Eg: concat(col1, col2)

- UDTF : takes zero or more inputs and and produces multiple columns or rows of output. Eg: explode()

- Macros : a function that users other Hive functions.

How To Develop UDFs, GenericUDFs, UDAFs, And UDTFs :

- public class YourUDFName extends UDF

- public class YourGenericUDFName extends GenericUDF {..}

- public class YourGenericUDAFName extends AbstractGenericUDAFResolver {..}

- public class YourGenericUDTFName extends GenericUDTF {..}semicolon to terminate statemets

TIMESTAMP :

- Strings must be in format “YYYY-MM-DD HH:MM:SS”

- Integer types as UNIX timestamp in seconds from UNIX epoch (1-JAN-1970 00:00:00)

Floating point types same as Integer with decimal precision.

- DATE

String Data Types :

- STRING

- VARCHAR

- CHAR

- Misc.

- BOOLEAN

- BINARY : BINARY is an array of Bytes and similar to VARBINARY in many RDBMSs. BINARY columns are stored within the record, not separately like BLOBs . We can include arbitrary bytes in BINARY column and these bytes are not parsed by Hive as numbers or strings.

Create External table in hive :

Note:

- The difference between external and internal table is that.

- Drop table for internal table drops the table and metadata.

- Drop table for external table only drops the metadata and data is not touched.

External table : When there is data already in HDFS, an external Hive table can be created to describe the data.It is called External (Data is in the external table and is specified in the Location properties instead of the default warehouse directory.

- Table name would be created as directory

- Schema would be created as meta store

Query data : Now we can use select * from table or specify the partition by condition. When we use partition condition internally the fetch data would be faster. Select * from product_ratings where ProductRating=0

Partitioning of table

- Hive creates tables in partitions.

- They are used to divide the table into related parts.

- Makes data querying more efficient.

Data masking :

Insert masked phone number into accounts : insert overwrite table secured accounts select id,name,MASK(phone) from accounts;

Insert masked phone and email into contacts : insert overwrite table secured contacts select id, accountid,firstname, lastname,MASK(phone),

MASK(email) from contacts; Move tables (secured contacts,secured accounts) from hive to my SQL

- sqoop export –connect jdbc:mysql://localhost:3306/securemask

- username root –password cloudera

- table contacts

- export-dir /user/hive/warehouse/secured contacts.

Hive or Pig – Which framework is better?

Hive is a data warehouse infrastructure and a declarative language like SQL suitable to manage all type of data sets while Pig is data-flow language suitable to explore extremely large datasets only. This is the reason why Hive is always given more preference over pig framework.

Hive Commands in HQL with Examples

Till the time, we have discussed on Hive basics and why it is so popular among organizations. Now, we will focus on Hive commands on HQL with examples. These are frequently used commands that are necessary to know for every Hive programmer wither he is beginner or experiences. So, let us go through each of the commands deeply so that you can quickly start your work as required.

Data Definition Language (DDL)

DDL is used to build or modify tables and objects stored in the database.Some of the examples of DDL statements are – CREATE, DROP, SHOW, TRUNCATE, DESCRIBE, ALTER statements etc.

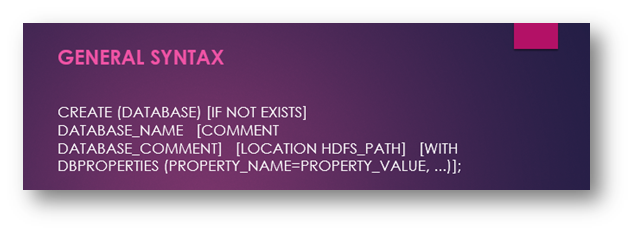

1.Create Database in Hive

The first step when start working with databases is to create a new database. If you are not sure how to create a new database in Hive, let us help you. Open the HIVE shell and enter the command “create <database name>” to start a new database in Hive. Let us give you a deep understanding of the concept through general syntax and example given in the screenshot below –

Here is the actual usage of command for HIVE –

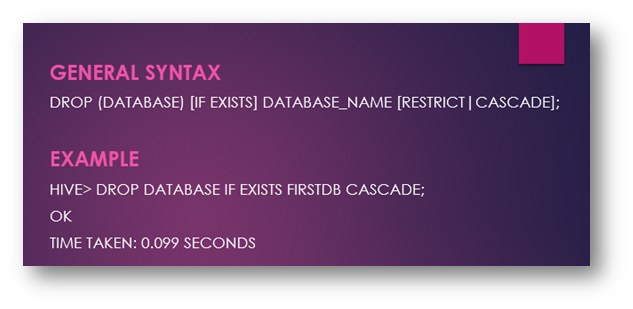

2.DROP Database in Hive

As the name suggest, DROP command is used to delete a database that has already been created earlier. In Hadoop, the database is kept at ‘restrict’ mode by default and it cannot be deleted permissions are not set by the administrator or it is empty. If you are a new user then you should change the ‘RESTRICT’ mode to ‘CASCADE’ before you delete a database.

When using DROP command then Hive may show the error ‘If exists’ that appears when the user tries to delete a database that is not available actually. Let us give you a deep understanding of the concept through general syntax and example given in the screenshot below-

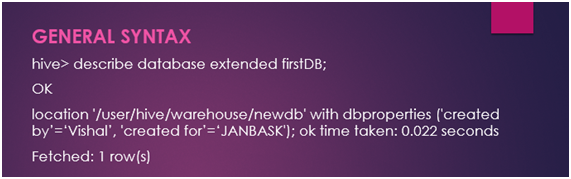

3.DESCRIBE database in Hive

The DESCRIBE command is used to check the associated metadata with the database. The command is useful when you wanted to check data volume and information on large datasets. Let us see how it works actually –

4.ALTER database in Hive

If you wanted to change the metadata associated with the databases then ALTER is just the perfect choice to get your job done within seconds. You can also use the ALTER command to modify the OWNER property and change the role of the OWNER. Here is the general syntax that you should use when working with Hive –

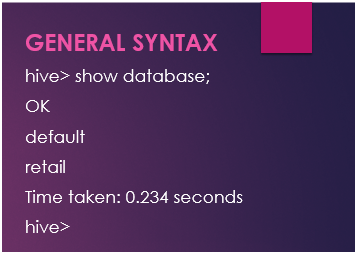

5.SHOW database in Hive

Well, you wanted to check there are how many databases stored in the current schema. The good news is that you can check the same within seconds by using the SHOW command. It will give a list of databases currently exist.

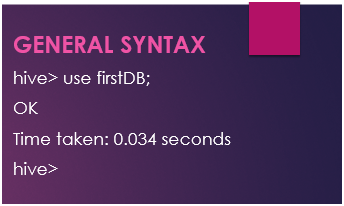

6.USE database in Hive

The command is suitable to select a specific portion of the database or it may be termed as the particular database session where a set of queries can be executed. Here is the example of general syntax

DDL command for Tables in Hive

Till the time, we have discussed DDL command for the database as you have seen earlier like how to create a database, how to delete a database, how to check the number of databases in the current schema, how to use the database and how to alter a database. I hope you must be familiar with all the basic commands well and this is time to start working with tables in Hive by using DDL commands. They are easy and simple when used in the same way as discussed in the blog, let us see how it work actually.

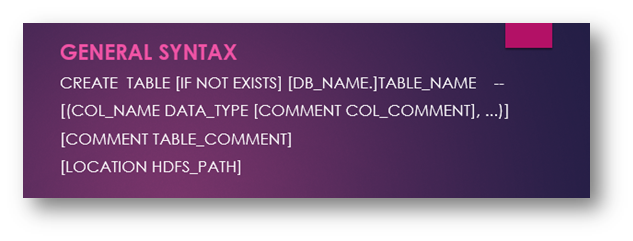

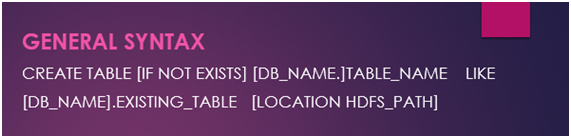

1.How to create a table in Hive?

Create table command is used to create a table in the already existing databaseto store data in the form of rows or columns. For example,if you wanted to create a table with the name “Employee” then important fields could be the name, address, phone number, email id, occupation etc. Also, you need to add a location to the table so that you can mention where particular table needs to store within HDFS.

In Hive, you also have the flexibility to copy the schema of an existing table, not the data. In other words, only structure will be copied to the new table and data can be added as per your convenience. It not only speeds up the table creation but improves the overall efficiency of a programmer too.

2.DROP table command in Hive

With DROP command, you have the flexibility to delete the data associated with the table. This command deletes the metadata and data only, not the structure. Data is sent to Trash and it can be recovered back in case of emergency. If you wanted to delete data permanently then add a ‘PURGE’ option along with the DROP command so that data should be shifted to the Trash anyhow.

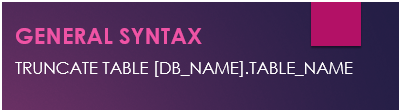

3.Truncate table command in Hive

The truncate command is used to delete all the rows and columns stored in the table permanently. When you are using truncate command then make it clear in your mind that data cannot be recovered after this anyhow. Here is the general syntax for truncate table command in Hive table command in Hive –

4.Alter table commands in Hive

With the ALTER command, the structure, metadata or data of the table can be modified quickly with a simple command as shown below in the screenshot. Further, there is DESCRIBE and the SHOW table command to check the metadata associated with table and number of tables available in the particular database.

conclusion:

That’s all for the day! We have discussed the basic DDL commands in the blog that help you to create a database and table perfectly. You can also perform relevant operations too as required.